B.4 Stochastic Processes

This appendix will provide a very brief overview of stochastic (or random) processes. If the reader finds extra time, he/she can find many good textbooks about them.

Stochastic or random processes are a powerful formalism for studying signals that incorporate some randomness. While the outcome of a random variable is a number, the outcome of a stochastic process is a time-series, each one called a realization. Hence, when using stochastic processes, it is possible to calculate “time” statistics of a single realization. Besides, one can choose a given time instant and calculate “ensemble” statistics over all realizations at that specific time. These two options may be confusing at first, but are essential. To get intuition on them, the reader is invited to observe the following simple example.

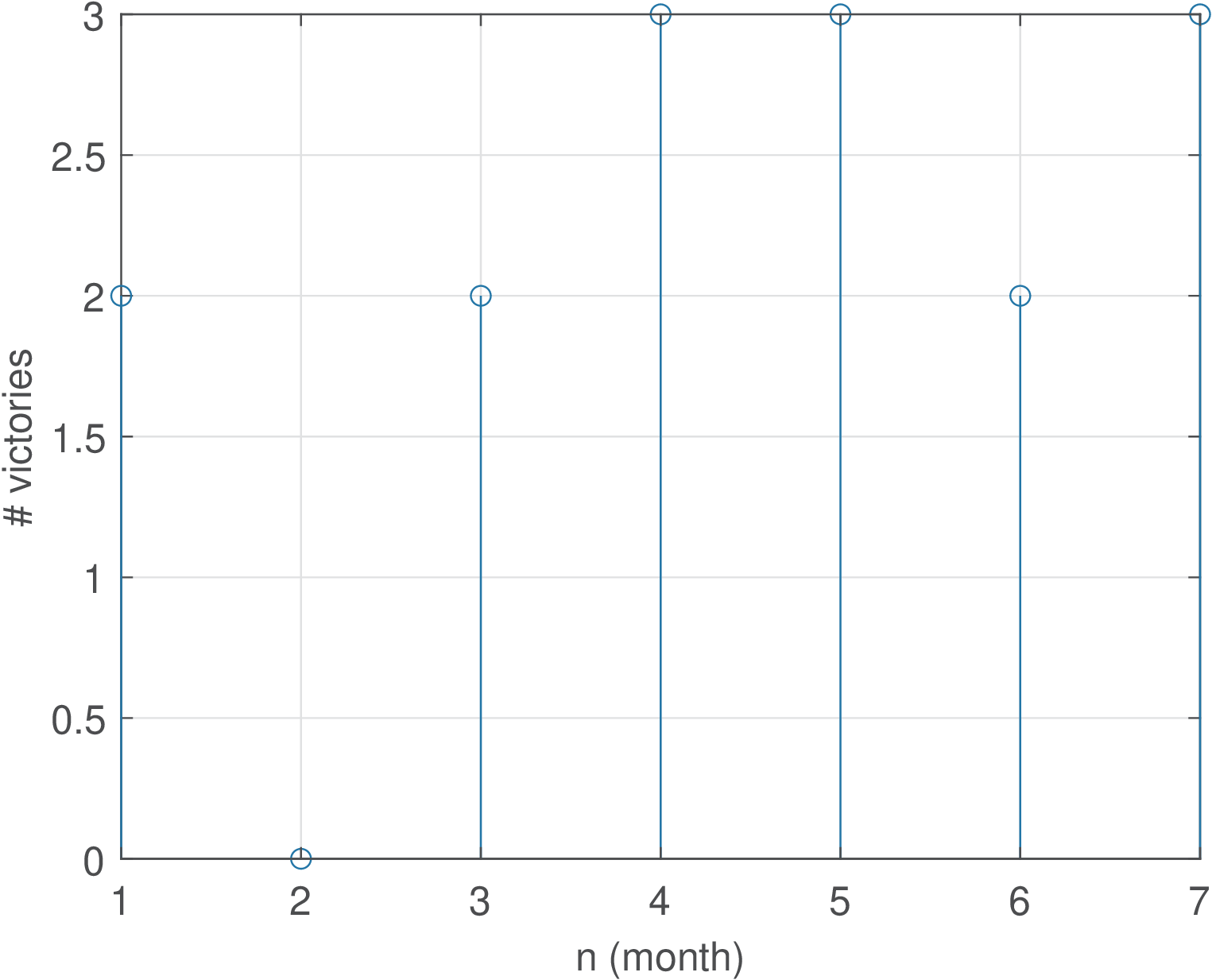

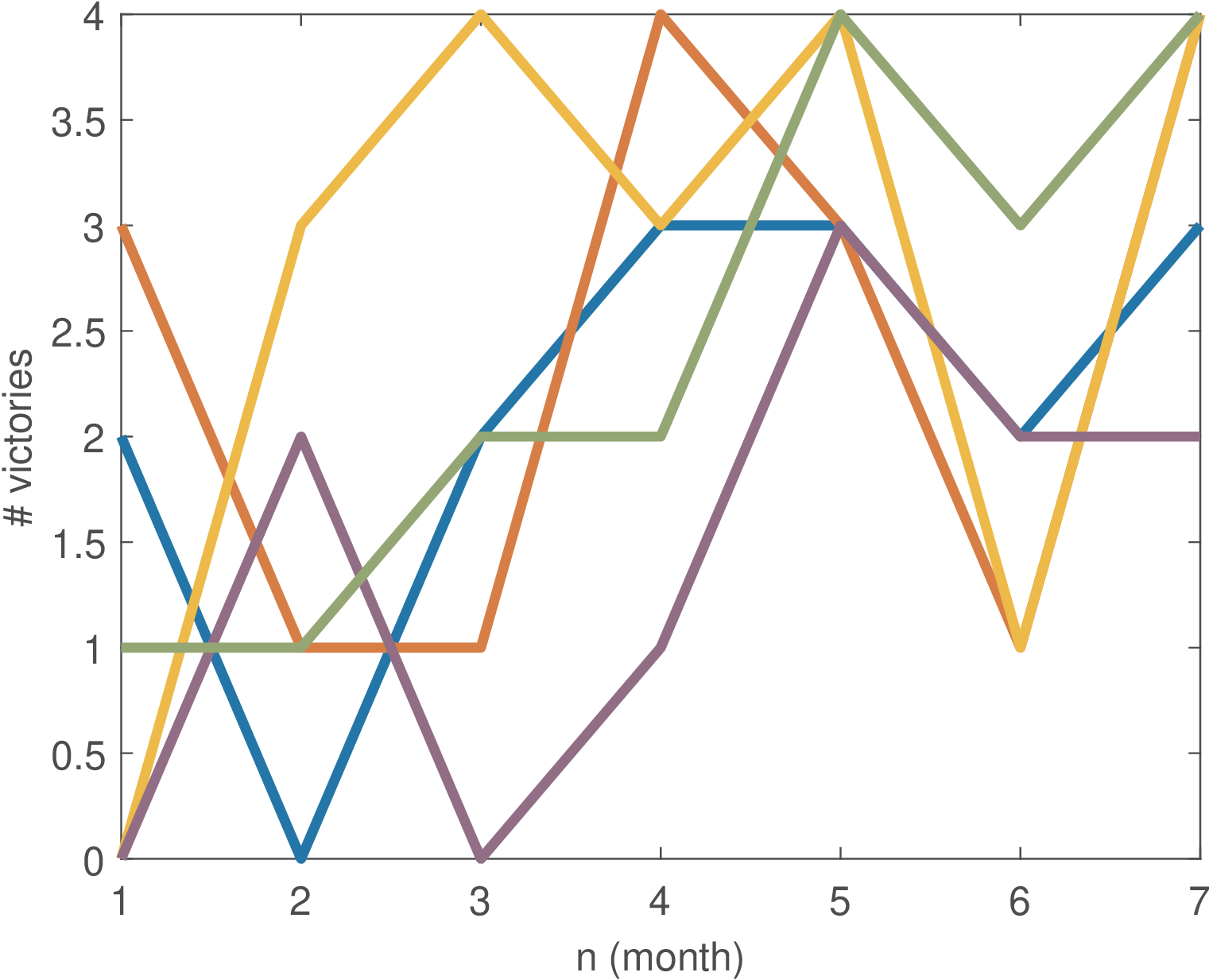

Example B.1. Simple example to explain time and ensemble averages in a stochastic process. Assume a vector [2, 0, 2, 3, 3, 2, 3] where each element represents the outcome of a random variable indicating the number of victories of a football team in four matches (one per week), along a seven-months season (for example, the team won 2 games in the first month and none in the second). One can calculate the average number of victories as , the standard deviation and other moments of a random variable. This vector could be modeled as a finite-duration random signal , with Figure B.3 depicting its graph.

Assume now there are 5 vectors, one for each year. A discrete-time random process can be used to model the data, with each vector (a time series) corresponding to a realization of the random process. For example, following the Matlab convention of organizing different time series in columns (instead of rows), the tabular data below represents the five random signals:

victories = [ 2 3 0 0 1 0 1 3 2 1 2 1 4 0 2 3 4 3 1 2 3 3 4 3 4 2 1 1 2 3 3 4 4 2 4]

Each random signal (column) can be modeled as a realization of a random process. Figure B.4 shows a graphical representation of the data with the five realizations of the example. One should keep in mind that a random process is a model for generating an infinite number of realizations but only 5 are used here for simplicity.

A discrete-time random process will be denoted by and one of its realization as . For the sake of explaining this example, let us define the notation to indicate the -th realization of . Because is a random signal, one can calculate for the given example, and other statistics taken over time. But a random process is a richer model than a random signal and other statistics can be calculated or, equivalently, more questions can be asked.

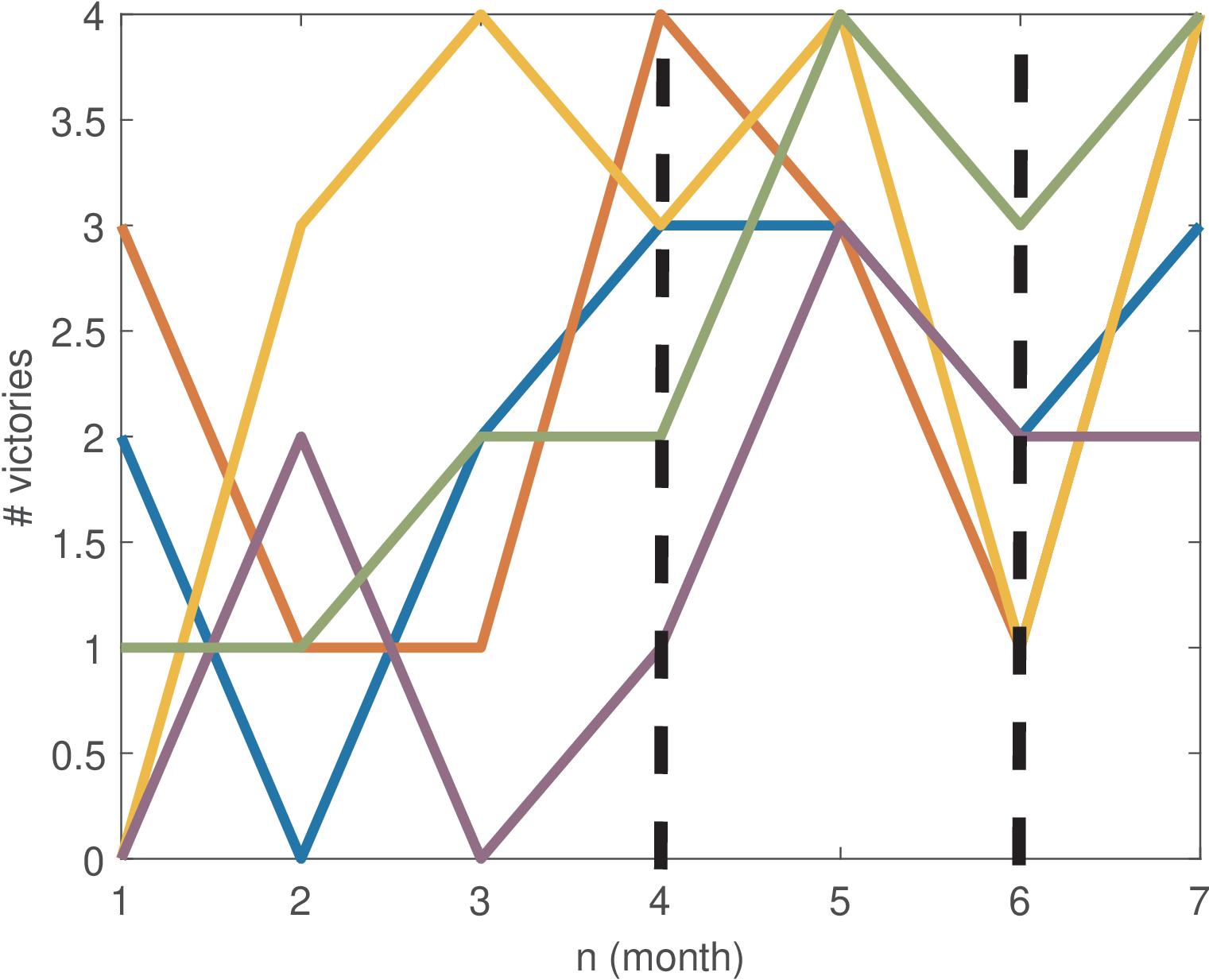

Besides asking for statistics of one realization taken over “time”, it is important to understand that when fixing a given time instant , the values that the realizations assume are also outcomes of a random variable that can be denoted by . In the example, assuming (i.e., assume the fourth month), one can estimate the average . Similarly, . Figure B.5 graphically illustrates the operation of evaluating a random process at these two time instants. Differently than the previous time averages, these “ensemble” statistics are obtained across realizations with fixed time instants.

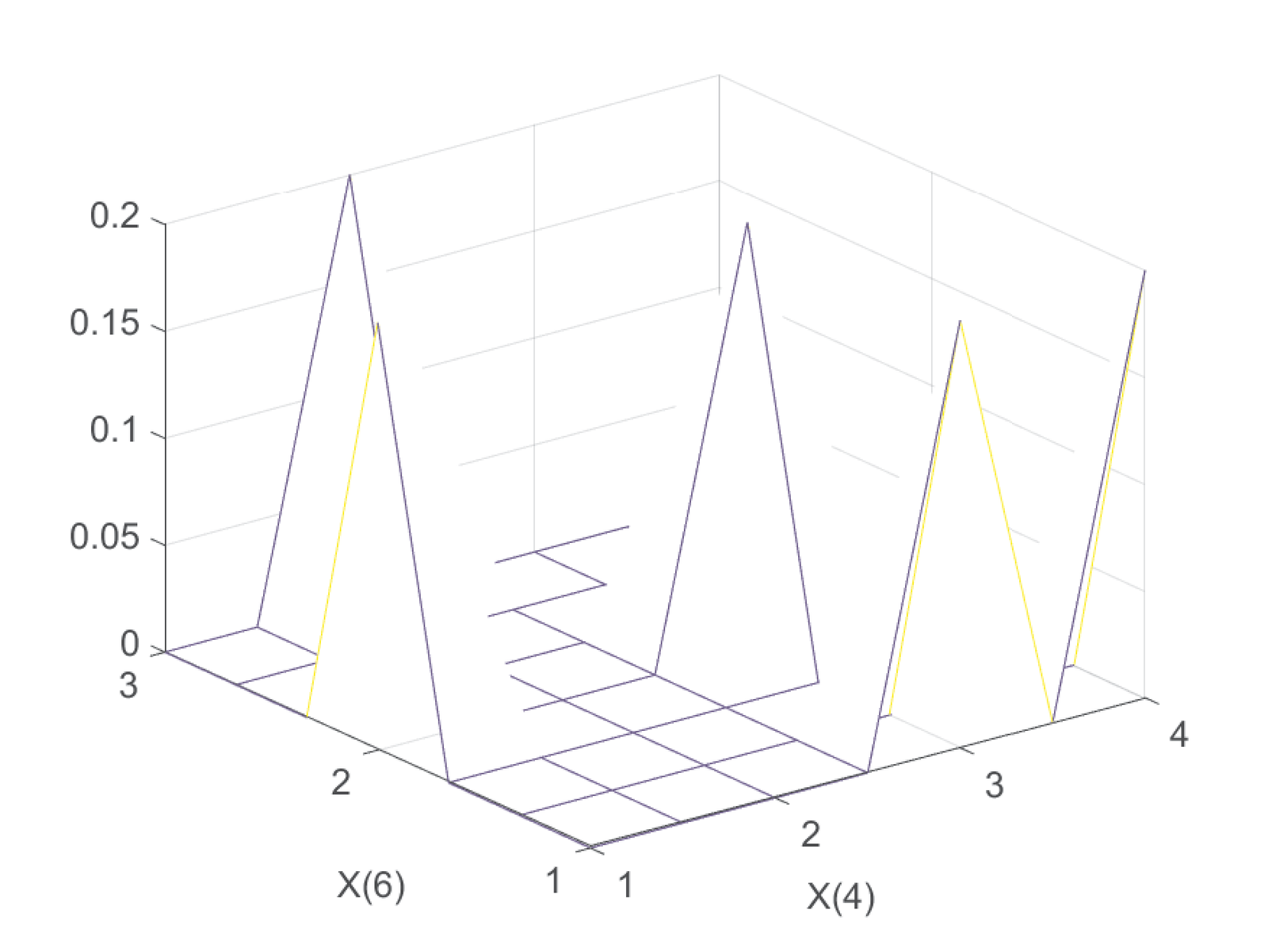

The continuous-time random process is similar to its discrete-time counterpart. Taking two different time values and leads to a pair of random variables, which can then be fully (statistically) characterized by a joint pdf function . This can be extended to three or more time instants (random variables). Back to the discrete-time example, one can use a normalized two-dimensional histogram to estimate the joint PMF of and as illustrated in Figure B.6.

This example aimed at distinguishing time and ensemble averages, which is fundamental to understand stochastic processes.

Correlation Function and Matrix

Second-order moments of a random process involve statistics taken at two distinct time instants. Two of them are the correlation and covariance functions, which are very useful in practice.

As discussed in Section 1.10, the correlation function is an extension of the correlation concept to random signals generated by stochastic processes. The autocorrelation function (ACF)

|

| (B.31) |

is the correlation between random variables and at two different points and in time of the same random process. Its purpose is to determine the strength of relationship between the values of the signal occurring at two different time instants. For simplicity, it is called instead of .

A discrete-time version of Eq. (B.31) is

|

| (B.32) |

where and are two “time” instants.

Specially for discrete-time processes, it is useful to organize autocorrelation values in the form of an autocorrelation matrix.

Example B.2. Calculating and visualizing the autocorrelation matrix (not assuming stationarity). Stationarity is a concept that will be discussed in the sequel (Appendix B.4.0.0). Calculating and visualizing autocorrelation is simpler when the process is stationary. But in this example, this property is not assumed and the autocorrelation is obtained for a general process.

Listing B.1 illustrates the estimation of for a general discrete-time random process.3

1function [Rxx,n1,n2]=ak_correlationEnsemble(X, maxTime) 2% function [Rxx,n1,n2]=ak_correlationEnsemble(X, maxTime) 3%Estimate correlation for non-stationary random processes using 4%ensemble averages (does not assume ergodicity). 5%Inputs: - X, matrix representing discrete-time process realizations 6% Each column is a realization of X. 7% - maxTime, maximum time instant starting from n=1, which 8% corresponds to first row X(1,:). Default: number of rows. 9%Outputs: - Rxx is maxTime x maxtime and Rxx[n1,n2]=E[X[n1] X*[n2]] 10% - n1,n2, lags to properly plot the axes. The values of 11% n1,n2 are varied from 1 to maxTime. 12[numSamples,numRealizations] = size(X); %get number of rows and col. 13if nargin==1 14 maxTime = numSamples; %assume maximum possible value 15end 16Rxx = zeros(maxTime,maxTime); %pre-allocate space 17for n1=1:maxTime 18 for n2=1:maxTime %calculate ensemble averages 19 Rxx(n1,n2)=mean(X(n1,:).*conj(X(n2,:))); %ensemble statistics 20 %that depends on numRealizations stored in X 21 end 22end

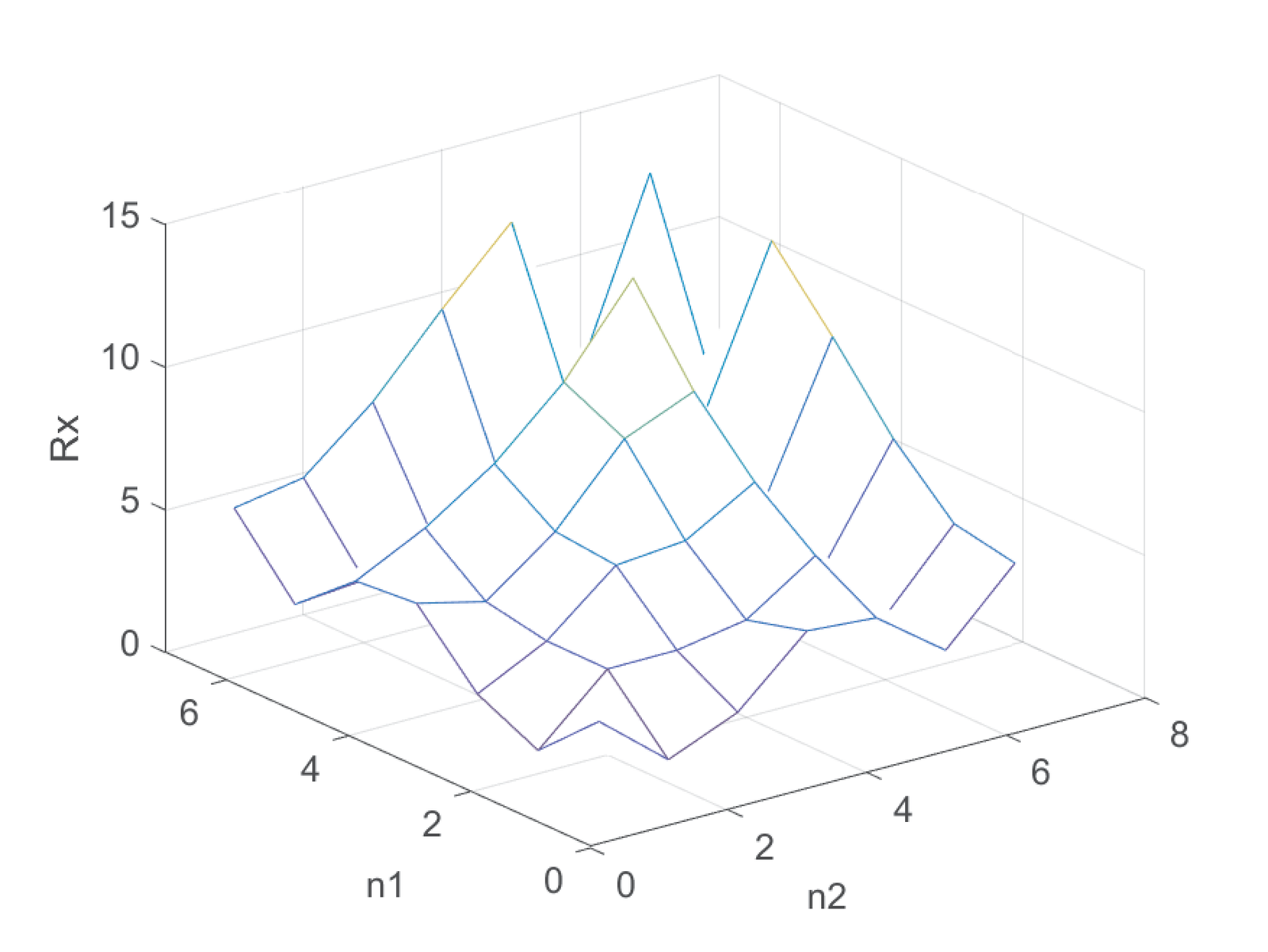

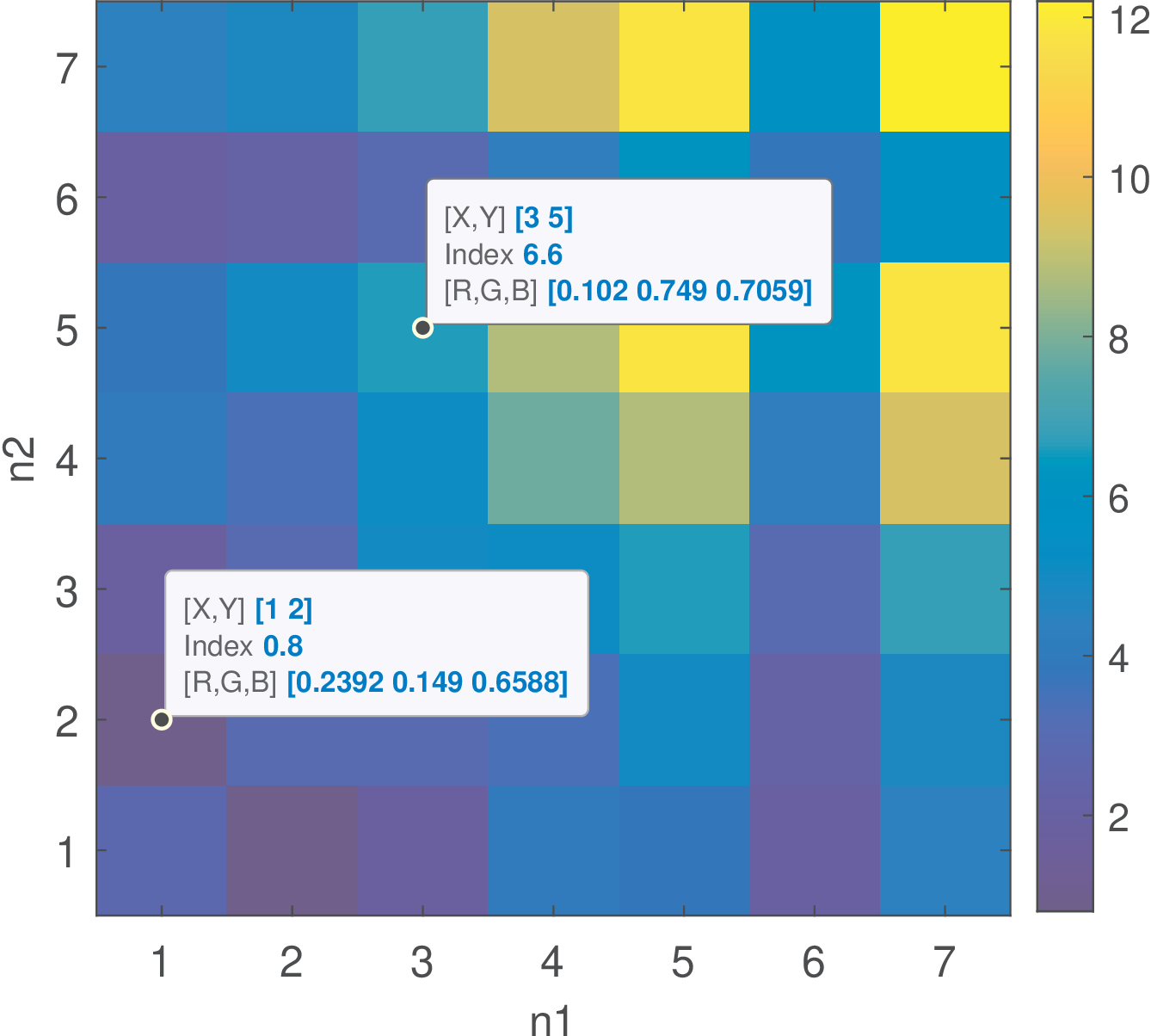

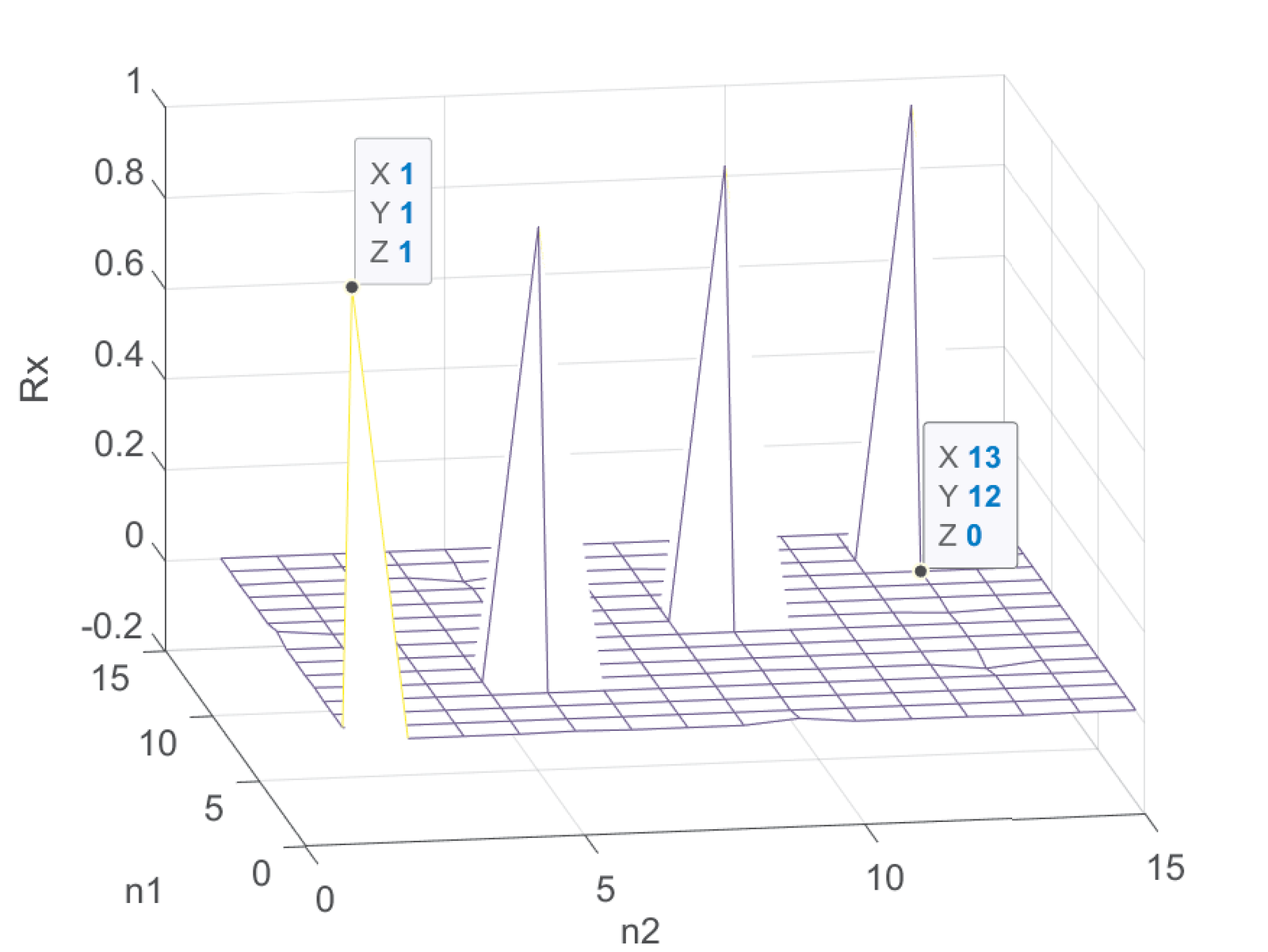

Figure B.7 was created with the command ak_correlationEnsemble(victories) and shows the estimated correlation for the data in Figure B.4 of Example B.1. The data cursor indicates that because for the random variables of the five realizations are . In this case, . Similar calculation leads to because in this case the products victories(1,:).*victories(7,:) are [6, 12, 0, 0, 4], which have an average of 4.4.

Figure B.8 presents an alternative view of Figure B.7, where the values of the z-axis are indicated in color. The two datatips indicate that and (these are the values identified by Index for the adopted colormap, and the associated color is also shown via its RGB triple).

For real-valued processes, the autocorrelation is symmetric, as indicated in Figure B.7 and Figure B.8, while it would be Hermitian for complex-valued processes.

Two time instants: both absolute or one absolute and a relative (lag)

When characterizing second-order moments of a random process, instead of using two absolute time instants, it is sometimes useful to make one instant as a relative “lag” with respect to the other. For example, take two absolute instants and in Eq. (B.31). Alternatively, the same pair of instants can be denoted by considering as the absolute time that provides the reference instant and a lag indicating that the second instant is separated by 3 from the reference.

Using this scheme, Eq. (B.31) can be written as

|

| (B.33) |

Similarly, Eq. (B.32) can be denoted as

|

| (B.34) |

with and , where is the lag in discrete-time.

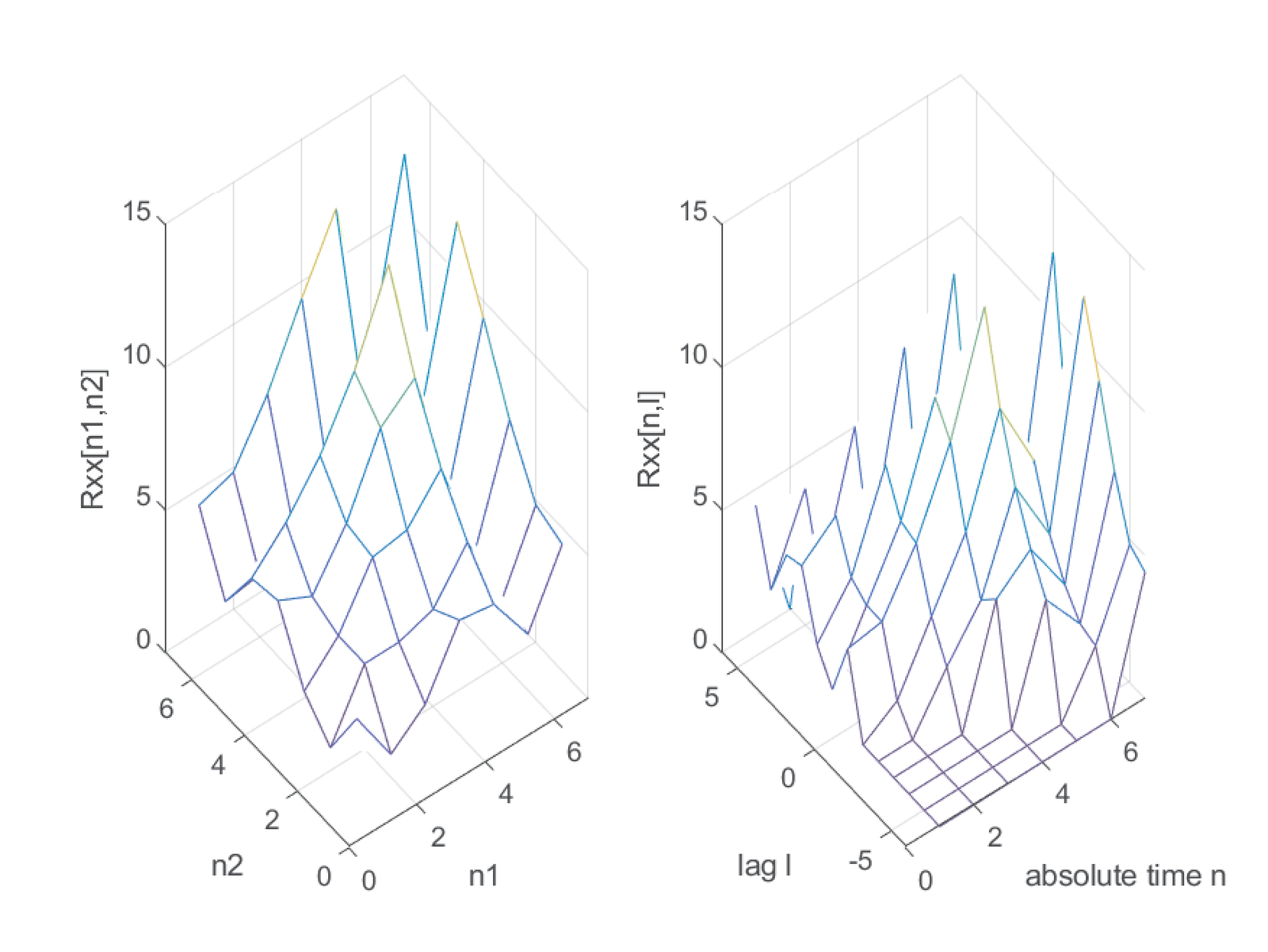

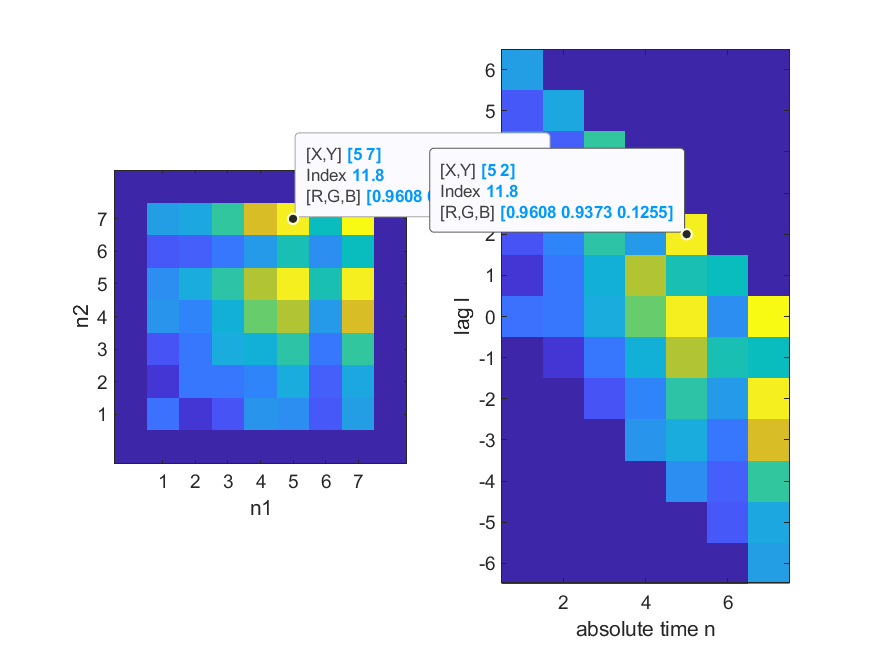

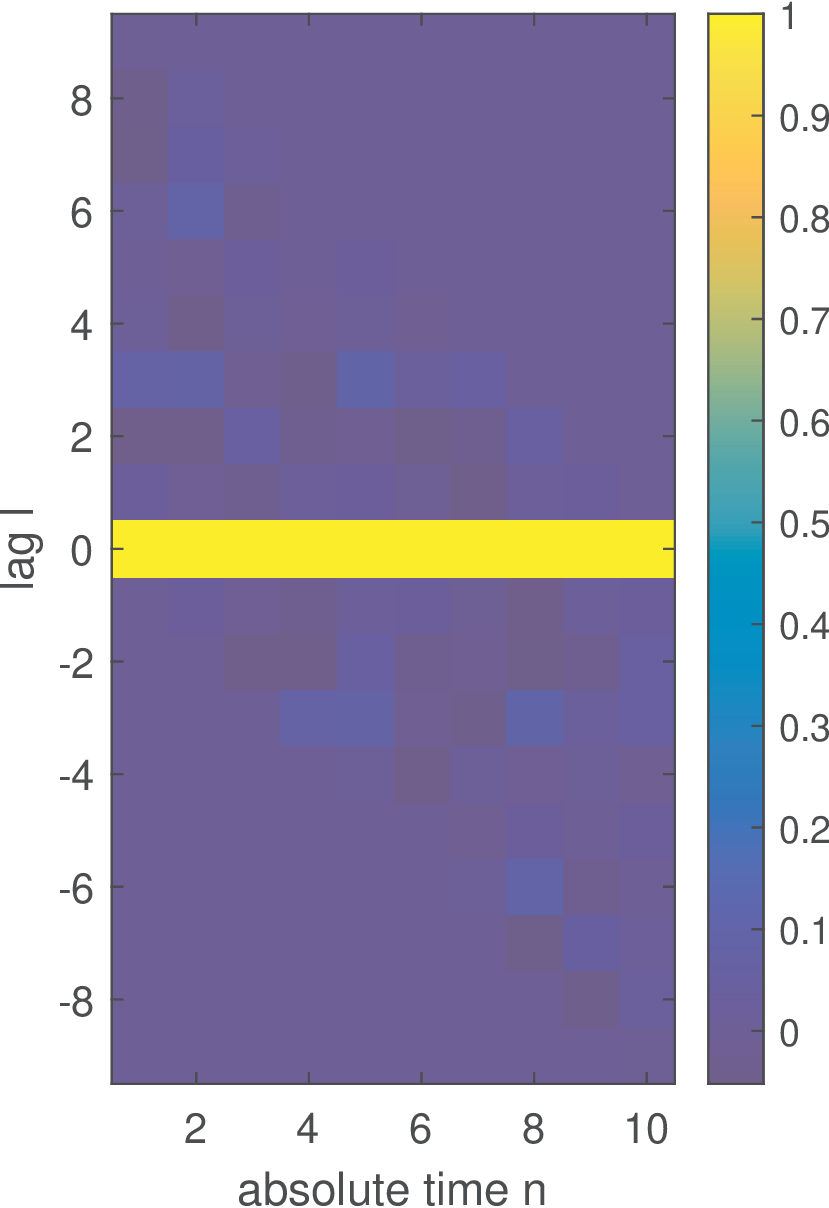

Example B.3. Contrasting two different representations of autocorrelations. Figure B.7 is a graph that corresponds to Eq. (B.32), with two absolute time instants. When using the alternative representation of Eq. (B.34), with a lag, the resulting matrix is obtained by rearranging the elements of the autocorrelation matrix. Figure B.9 compares the two cases. Note that the name autocorrelation matrix is reserved for the one derived from Eq. (B.32) (and Eq. (B.31)) that is, for example, symmetric.

Figure B.10 is an alternative view of Figure B.9, which makes visualization easier. It better shows that Eq. (B.34) leads to shifting the column of the autocorrelation matrix (Figure B.7) corresponding to each value of interest.

Properties such as the symmetry of the autocorrelation matrix may not be so evident from the right-side representation of Figure B.10 as in the left-side representation, but both are useful in distinct situations.

Covariance matrix

Similar to autocorrelation functions, it is possible to define covariance functions that expand the definition for random variables such as Eq. (1.49) and take as arguments two time instants of a random process. For example, for a complex-valued discrete-time process the covariance is

|

| (B.35) |

Stationarity, cyclostationarity and ergodicity

A random process is a powerful model. Hence, an important question is what information is necessary to fully characterize it. Take the matrix in Example B.1: this data does not fully characterize the random process because it consists of only five realizations (tossing a coin five times does not allow to estimate its probability of heads robustly). We would need an infinite number of realizations if we insist in characterizing it in a tabular form. The most convenient alternative is to describe it through pdfs. We should be able to indicate, for all time , the pdf of . Besides, for each time pair , the joint pdf should be known. The same for the pdf of each triple and so on. It can be noted that a general random process requires a considerable amount of statistics to be fully described. So, it is natural to define particular and simpler cases of random processes as discussed in the sequel.

An important property of a random process is the stationarity of a statistics, which means this statistics is time-invariant even though the process is random. More specifically, a process is called -th order stationary if the joint distribution of any set of of its random variables is independent of absolute time values, depending only on relative time.

A first-order stationary process has the same pdf (similar is valid for discrete-time). Consequently, it has a constant mean

|

| (B.36) |

constant variance and other moments such as .

A second-order stationary process has the same joint pdf . Consequently, its autocorrelation is

|

| (B.37) |

where the time difference is called the lag and has the same unit as in . Note that, in general, of Eq. (B.33) depends on two parameters: and . However, for a stationary process, Eq. (B.37) depends only on the lag because, given , is the same for all values of .

Similarly, if a discrete-time process is second-order stationary, Eq. (B.34) can be simplified to

|

| (B.38) |

where is the lag.

A third-order stationary process has joint pdfs that do not depend on absolute time values and so on. A random process is said to be strict-sense stationary (SSS) or simply stationary if any joint pdf is invariant to a translation by a delay . A random process with realizations that consist of i. i. d. random variables (called an i. i. d. process) is SSS. A random process that is not SSS is referred to as a non-stationary process even if some of its statistics have the stationarity property.

The SSS process has very stringent requirements, which are hard to verify. Hence, many real-world signals are modeled as having a weaker form of stationarity called wide-sense stationarity.

Basically, a wide-sense stationary (WSS) process has a mean that does not vary over time and an autocorrelation that does not depend on absolute time, as indicated in Eq. (B.36) and Eq. (B.37), respectively. Broadly speaking, wide-sense theory deals with moments (mean, variance, etc.) while strict-sense deals with probability distributions. Note that SSS implies WSS but the converse is not true in general, with Gaussian processes being a famous exception.

For example, the process corresponding to Figure B.7 is not WSS: its autocorrelation does not depend only on the time difference .

Example B.4. The autocorrelation matrix of a WSS process is Toeplitz. For a WSS process, elements of its autocorrelation matrix depend only on the absolute difference and, therefore, the matrix is Toeplitz. In this example, the function toeplitz.m is used to visualize the corresponding autocorrelation matrix as an image. For example, the command Rx=toeplitz(1:4) generates the following real-valued matrix:

Rx=[1 2 3 4 2 1 2 3 3 2 1 2 4 3 2 1].

Usually, the elements of the main diagonal correspond to .

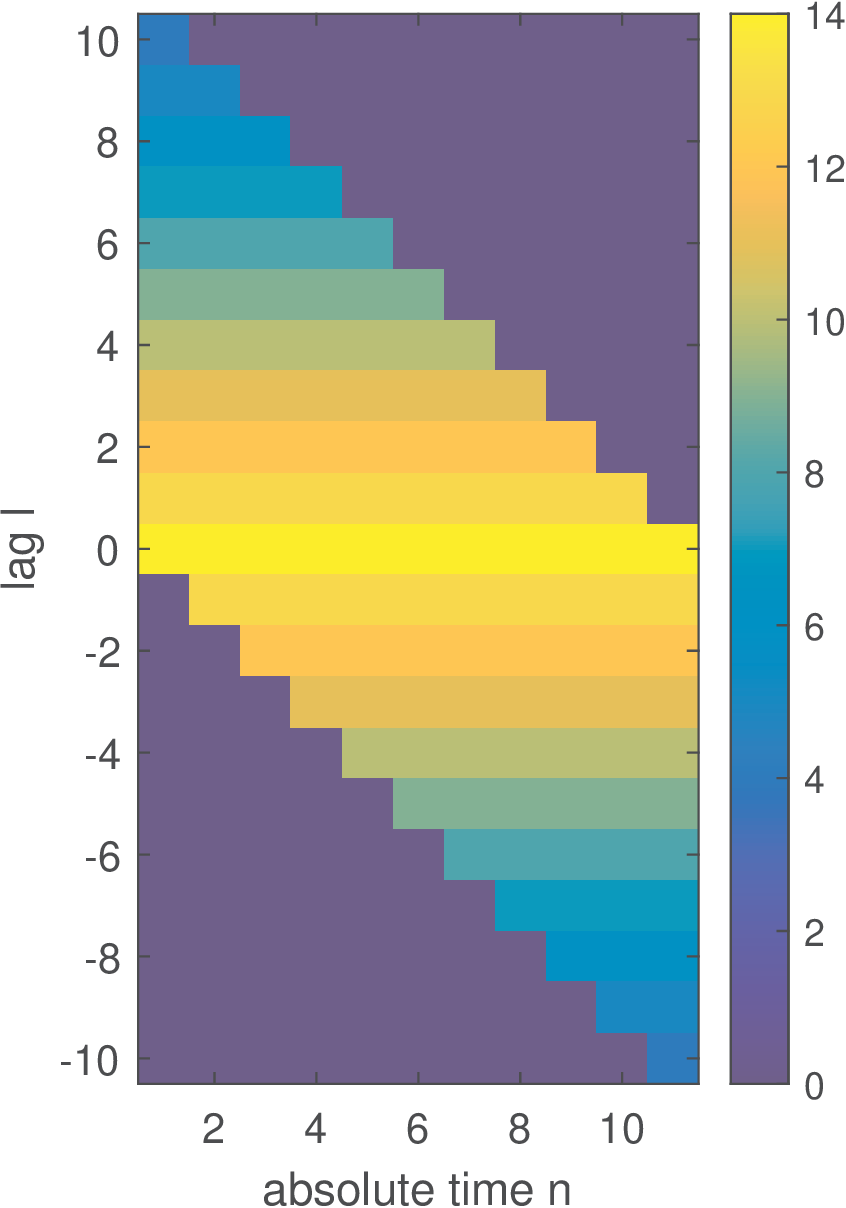

To visualize a larger matrix, Figure B.11 was generated with Rxx=toeplitz(14:-1:4).

Given the redundancy in Figure B.11, one can observe that for a WSS, it suffices to describe for each value of the lag .

A cyclostationary process is a non-stationary process having statistical properties that vary periodically (cyclically) with time. In some sense it is a weaker manifestation of a stationarity property. Cyclostationary processes are further discussed in Appendix B.4.1.

A given statistics (variance, autocorrelation, etc.) of a random process is ergodic if its time average is equal to the ensemble average. For example, an i. i. d. random process is ergodic in the mean.4 And, loosely speaking, a WSS is ergodic in both mean and autocorrelation if its autocovariance decays to zero.5

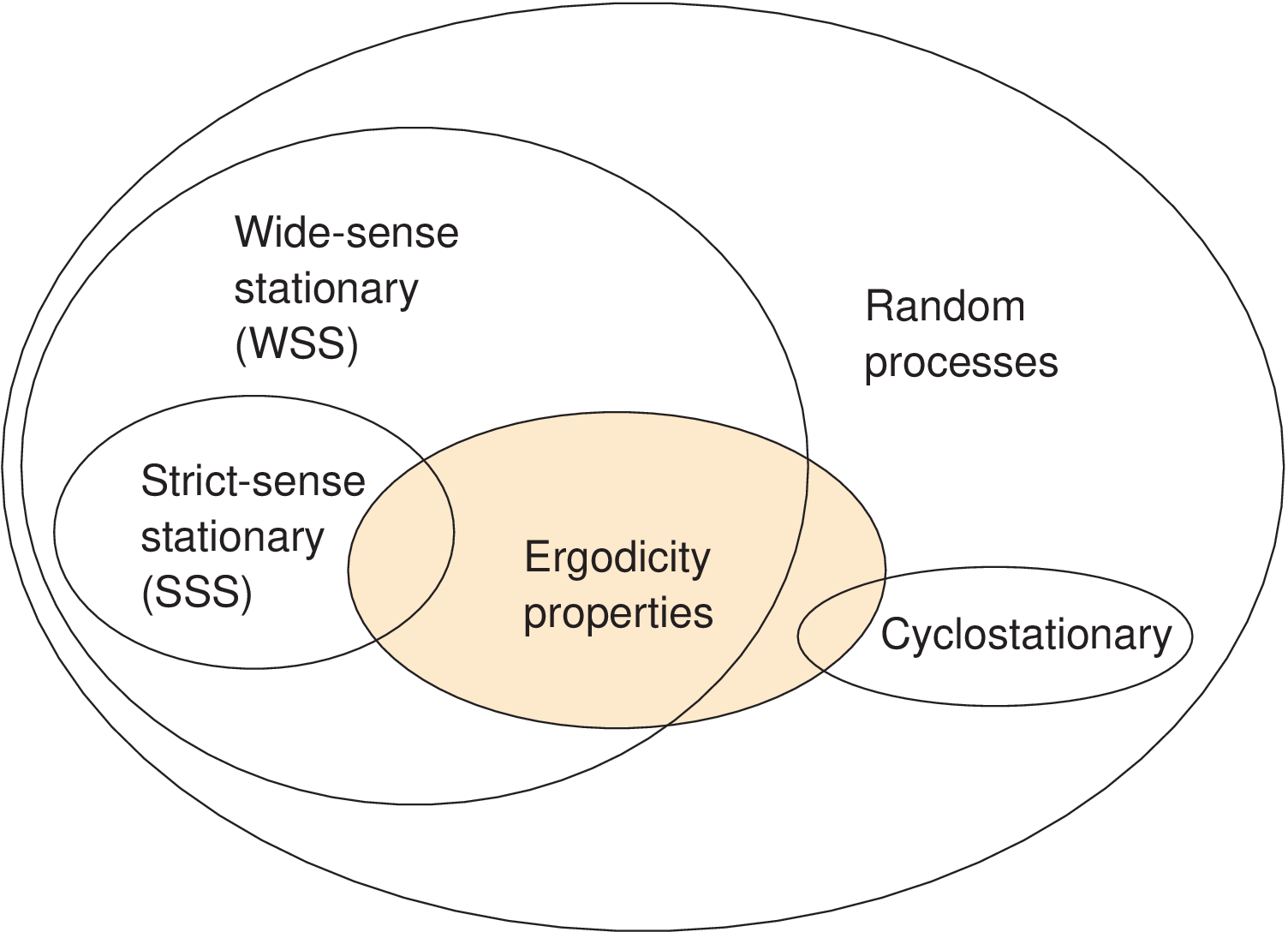

A process could be called “ergodic” if all ensemble and time averages are interchangeable. However, it is more pedagogical to consider ergodicity as a property of specific statistics, and always inform them.6 Even non-stationary or cyclostationary processes can be ergodic in specific statistics such as the mean. Figure B.12 depicts the suggested taxonomy of random processes using a Venn diagram.

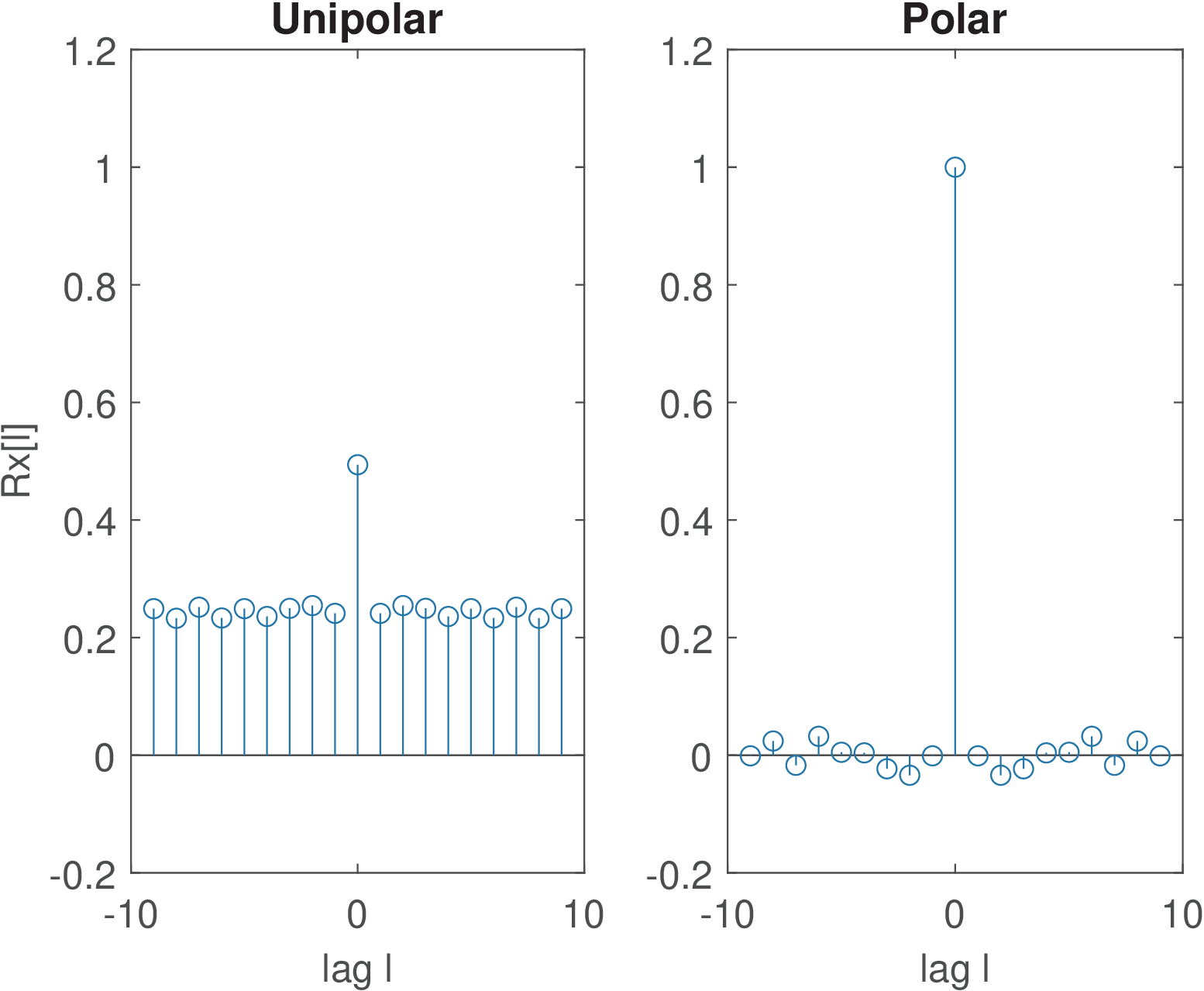

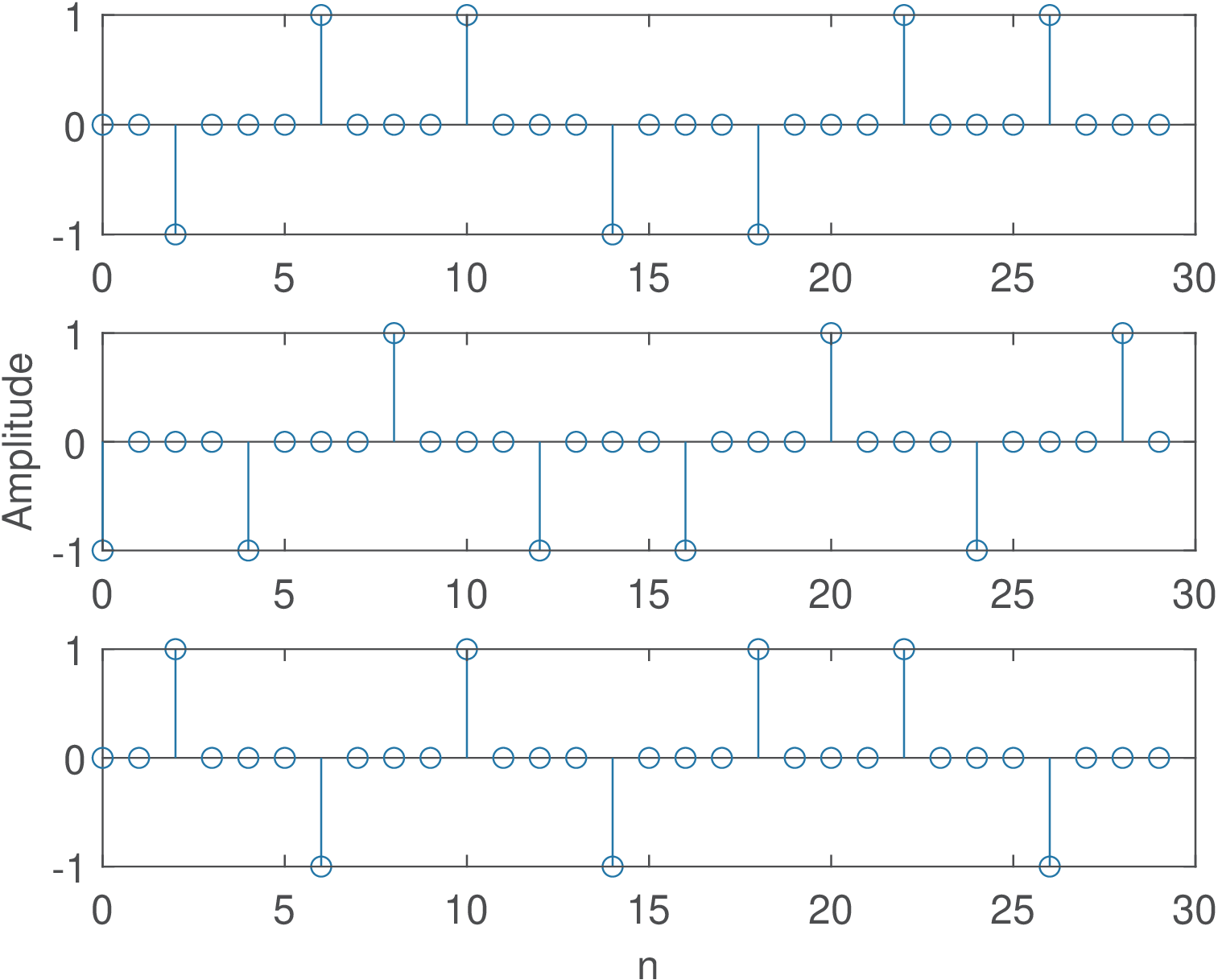

Example B.5. Polar and unipolar bitstreams modeled as WSS processes. This example is based on processes used in digital communications to represent sequences of bits. Note that, after these bit streams are upsampled in order to create line codes, they become cyclostationary processes. This issue is discussed in Appendix B.4.1, while here the sequences are not upsampled.

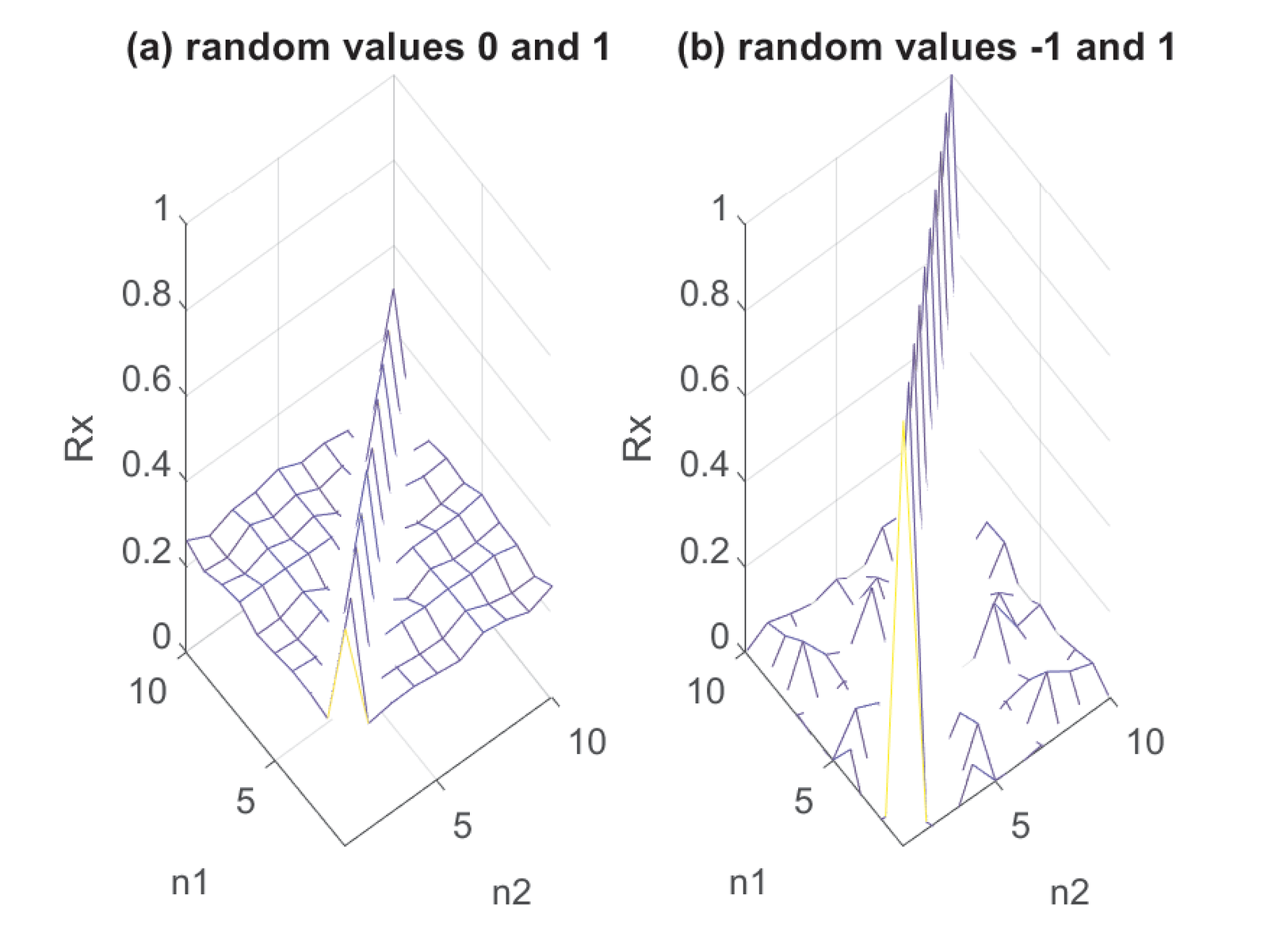

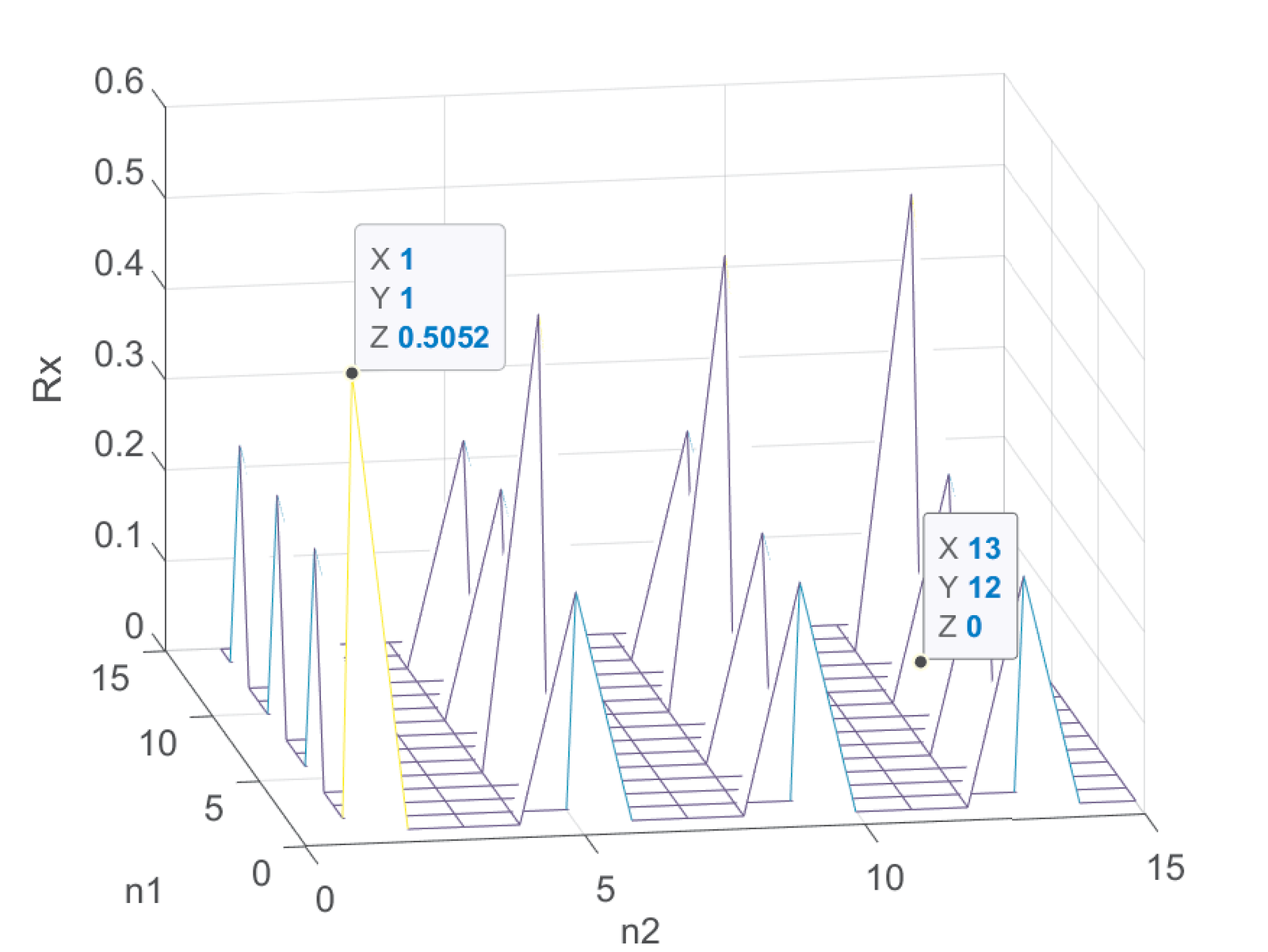

Figure B.13 illustrates two processes for which the main diagonal has the same values. The process (a) (left-most) is called a unipolar line code and consists of sequences with equiprobable values 0 and 1, while for the second process (called polar) the values are and 1.

For the unipolar and polar cases, the theoretical correlations for are 0.5 and 1, respectively. The result is obtained by observing that and for the unipolar case, is 0 or 1. Because the two values are equiprobable, . For the polar case, always and . The values of for are obtained by observing the values of all possible products . For the unipolar case, the possibilities are , , and , all with probability 1/4 each. Hence, for this unipolar example, . For the polar case, the possibilities are , , and , all with probability 1/4 each. Hence, for this polar example, .

In summary, for the polar code for and 0 otherwise. For the unipolar code, for and 0.25 otherwise.

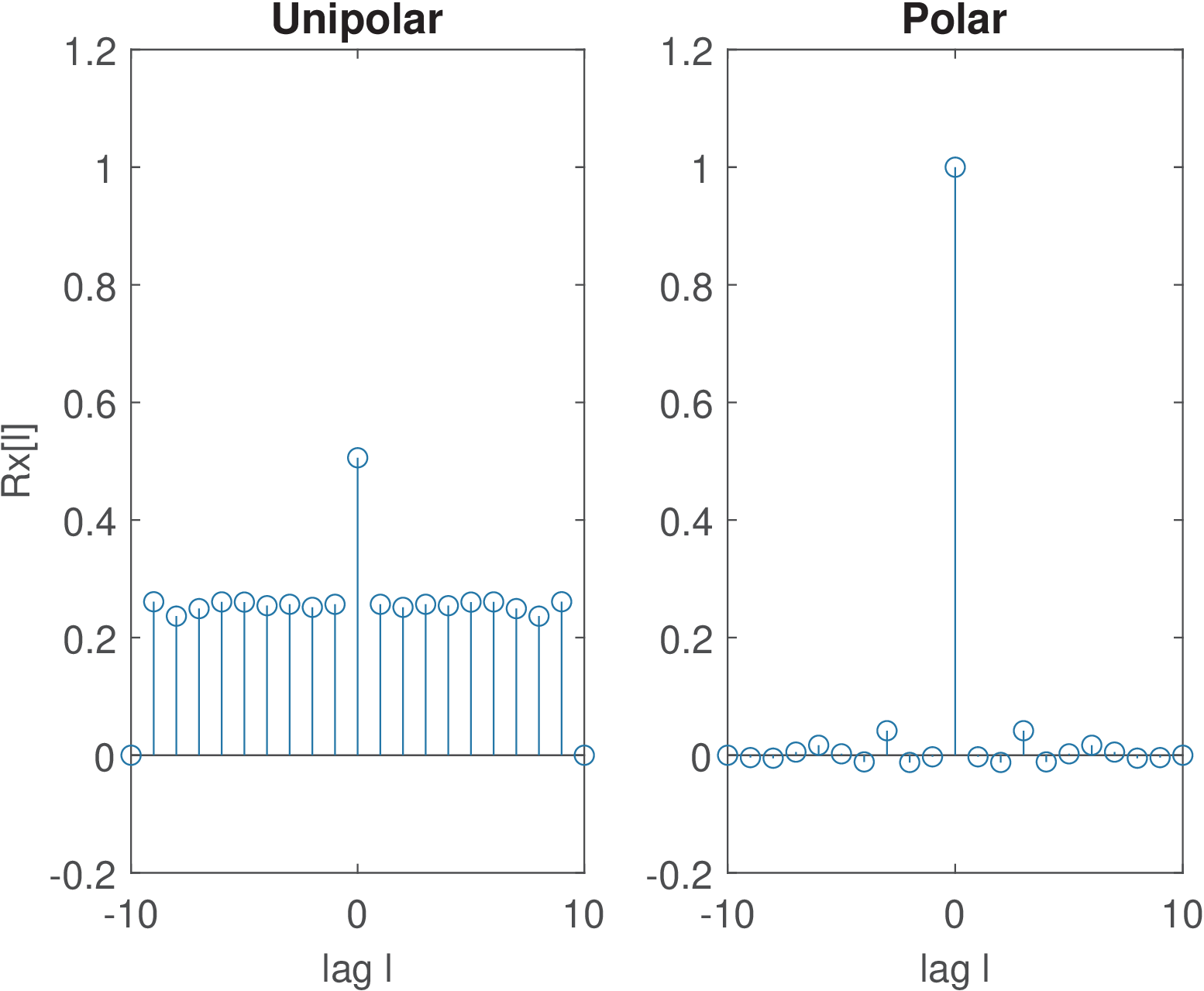

A careful observation of Figure B.13 indicates that two dimensions are not necessary and it suffices to describe as in Eq. (B.34). As discussed previously, in the case of WSS processes, the correlation depends only on the difference between the “time” instants and . Figure B.14 emphasizes this aspect using another representation for the polar case in Figure B.13.

Because the polar and unipolar processes that were used to derive Figure B.13 are WSS, Listing B.2 can be used to take advantage of having depending only on the lag and convert this matrix to an array .

1function [Rxx_tau, lags] = ak_convertToWSSCorrelation(Rxx) 2% function [Rxx_tau,lags]=ak_convertToWSSCorrelation(Rxx) 3%Convert Rxx[n1,n2] into Rxx[k], assuming process is 4%wide-sense stationary (WSS). 5%Input: Rxx -> matrix with Rxx[n1,n2] 6%Outputs: Rxx_tau[lag] and lag=n2-n1. 7[M,N]=size(Rxx); %M is assumed to be equal to N 8lags=-N:N; %lags 9numLags = length(lags); %number of lags 10Rxx_tau = zeros(1,numLags); %pre-allocate space 11for k=1:numLags; 12 lag = lags(k); counter = 0; %initialize 13 for n1=1:N 14 n2=n1+lag; %from: lag = n2 - n1 15 if n2<1 || n2>N %check 16 continue; %skip if out of range 17 end 18 Rxx_tau(k) = Rxx_tau(k) + Rxx(n1,n2); %update 19 counter = counter + 1; %update 20 end 21 if counter ~= 0 22 Rxx_tau(k) = Rxx_tau(k)/counter; %normalize 23 end 24end

Figure B.15 was generated using Listing B.2 for the data in Figure B.13.

If ergodicity can be assumed, a single realization of the process can be used to estimate the ACF. Figure B.16 was generated using the code below, which generates a single realization of each process:

1numSamples=1000; 2Xunipolar=floor(2*rand(1,numSamples)); %r.v. 0 or 1 3[Rxx,lags]=xcorr(Xunipolar,9,'unbiased');%Rxx for unipolar 4subplot(121); stem(lags,Rxx) title('Unipolar'); 5Xpolar=2*[floor(2*rand(1,numSamples))-0.5]; %r.v. -1 or 1 6[Rxx,lags]=xcorr(Xpolar,9,'unbiased'); %Rxx for polar code 7subplot(122); stem(lags,Rxx) title('Polar');

Increasing the number of samples to numSamples=1e6 achieves estimation errors smaller than in Figure B.16.

B.4.1 Cyclostationary random processes

This section aims at providing some intuition about cyclostationarity. More information can be found e. g. in [Gia99, Ant07, Gar91].

A discrete-time process is wide-sense cyclostationary (WSC) if, and only if, exists an integer period such that the mean and correlation are periodic (consequently, the covariance is periodic too), as summarized by:

Note that the correlation is periodic but a cyclostationary process generates realizations that are random and not periodic. To avoid confusion, the period in Eq. (B.39) of a WSC process is referred to as its cycle.

Because WSC are more common than strict-sense cyclostationary processes, the term cyclostationary is assumed here to correspond to a WSC (wide, not strict sense), as adopted in [Gia99], which is the main reference for the discussion presented in the sequel. In order to better understand WSC processes, it is useful to review the concepts of non-stationary processes in Appendix B.4 and get familiar with representations such as the ones in Figure B.9 and Figure B.10. While WSS processes have autocorrelations depending only on one parameter, the lag , WSC correlations depend on two parameters () and this is a key factor for the following discussion.

Note that Eq. (B.39) implies the correlation in Eq. (B.34) is periodic in for each “lag” . In other words, given a lag value , the time-series over the “absolute time instant” is periodic. Hence, it can be represented using a DTFS in which the Fourier coefficients are called cyclic correlations. Therefore, when has a period of samples over , the following Fourier pair can be used:

|

| (B.40) |

is also called time-varying correlation and instantaneous correlation.

As explained in [Gia99], sampling a continuous-time periodic signal may lead to an almost periodic signal for which Eq. (1.21) does not hold. This occurs in the so-called wide-sense almost cyclostationary processes, in which has the following generalized Fourier series:

|

| (B.41) |

where is the set of cycles.

The estimation of both and is often done with FFTs and, consequently, both periodic and almost periodic realizations are treated similarly. Hereafter, the notation is also adopted for .

For a given cycle , the evolution of over the lags can be interpreted over frequency using its DTFT to define the cyclic spectrum:

|

| (B.42) |

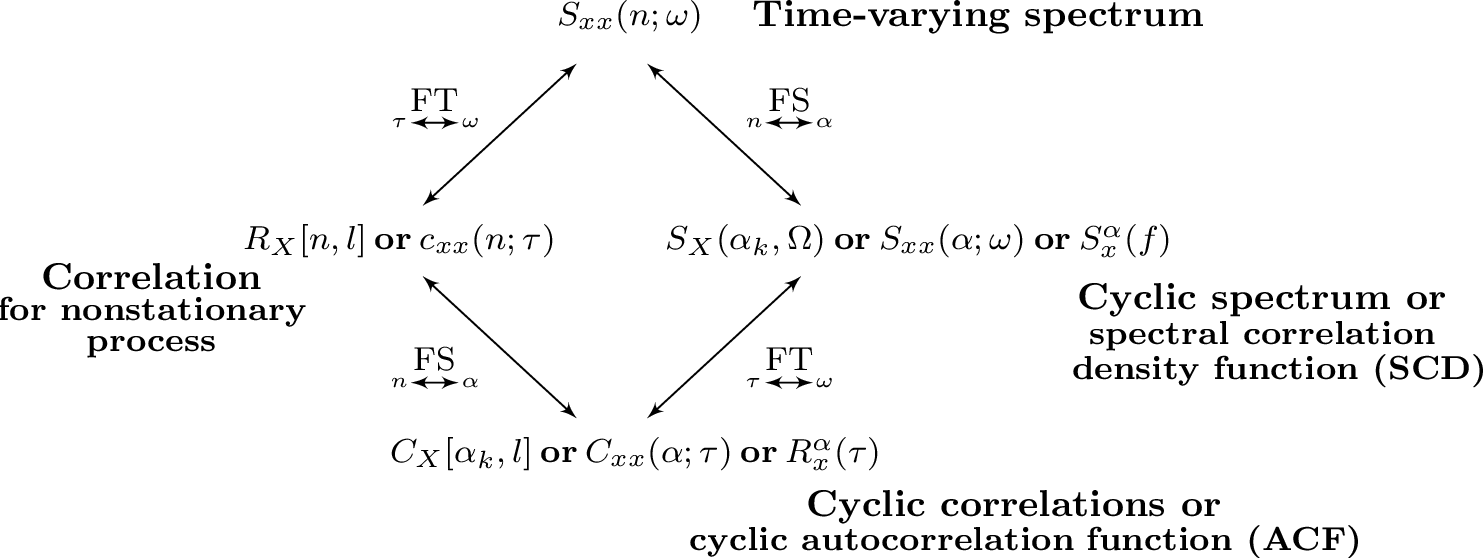

In summary, while stationary discrete-time signals are analyzed using PSDs in frequency-domain () and time-invariant correlations in lag-domain (), non-stationary signals include also the time () and cycle () domains. Figure B.17 illustrates the relations.

As further discussed in Section B.4.3, a cyclostationary process can be converted into a WSS through the “uniform shift” or “uniformly randomizing the phase” [Gia99].

The following example aims at making these concepts more concrete.

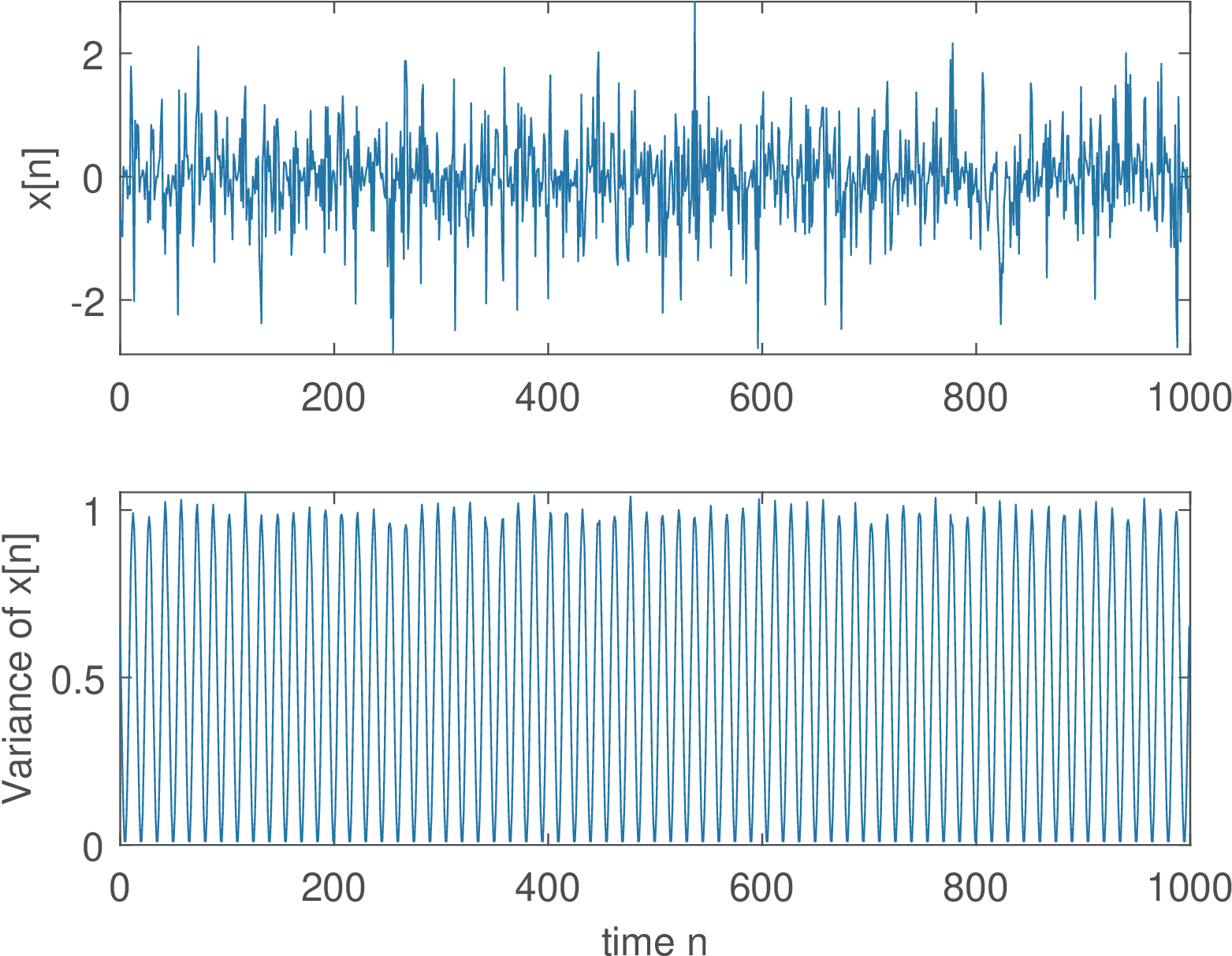

Example B.6. Example: WGN modulated by sinusoid. Assume represents a realization of an i. i. d. white Gaussian noise process with variance . Each realization is modulated by a carrier of frequency and phase (both in radians) leading to7

|

| (B.43) |

The top plot in Figure B.18 illustrates a single realization of , as generated by Listing B.3. It can be seen that the sinusoid is “buried” in noise. All M=5000 realizations are stored in matrix X. The ensemble variance for each “time” instant is shown in the bottom plot of Figure B.18, indicating that the variance has a period (in this case, 15 samples, given that P=30) because it depends on the absolute value of the sinusoid amplitude at instant . This can be also seen from the correlation of over time, as discussed in the sequel.

1M=5000; %number of realizations for ensemble statistics 2N=1000; %number of samples of each realization 3P=30; %sinusoid period (in samples) 4X=zeros(N,M); %pre-allocate space 5Wc=2*pi/P; Phc=pi/5; %carrier frequency and phase, both in radians 6n=0:N-1; carrier=transpose(cos(Wc*n+Phc)); %same for all realizations 7%carrier(carrier>0)=1; carrier(carrier<0)=-1; %if wants square wave 8for m=1:M %for each realization 9 X(:,m)=randn(N,1).*carrier; %WGN modulated by sinusoidal carrier 10end

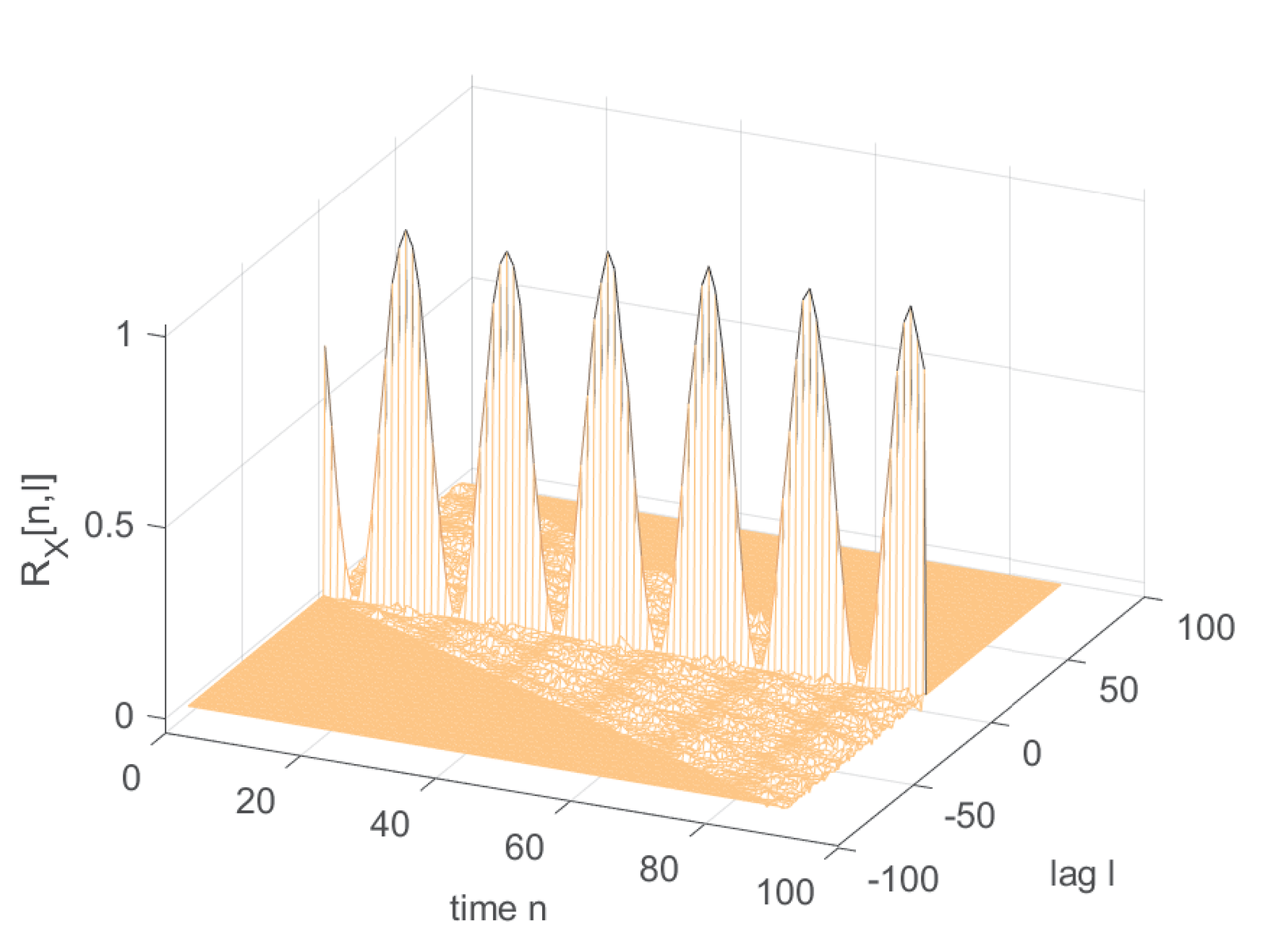

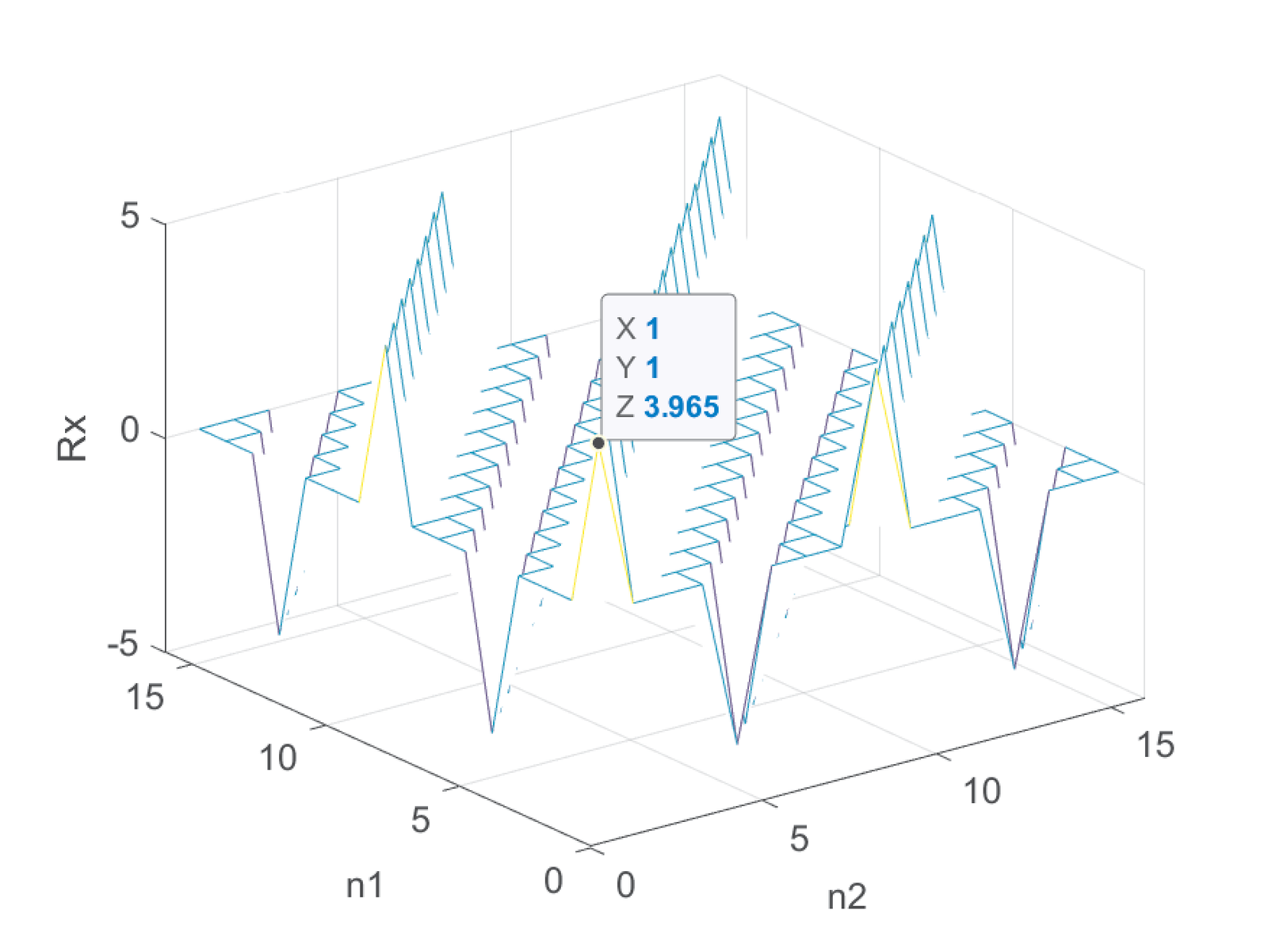

From Eq. (B.34), the correlation of is

which is depicted in Figure B.19(b). The value of is 1 for all when and zero otherwise. Note that Figure B.19(a) is the alternative representation with a pair of absolute time instants .

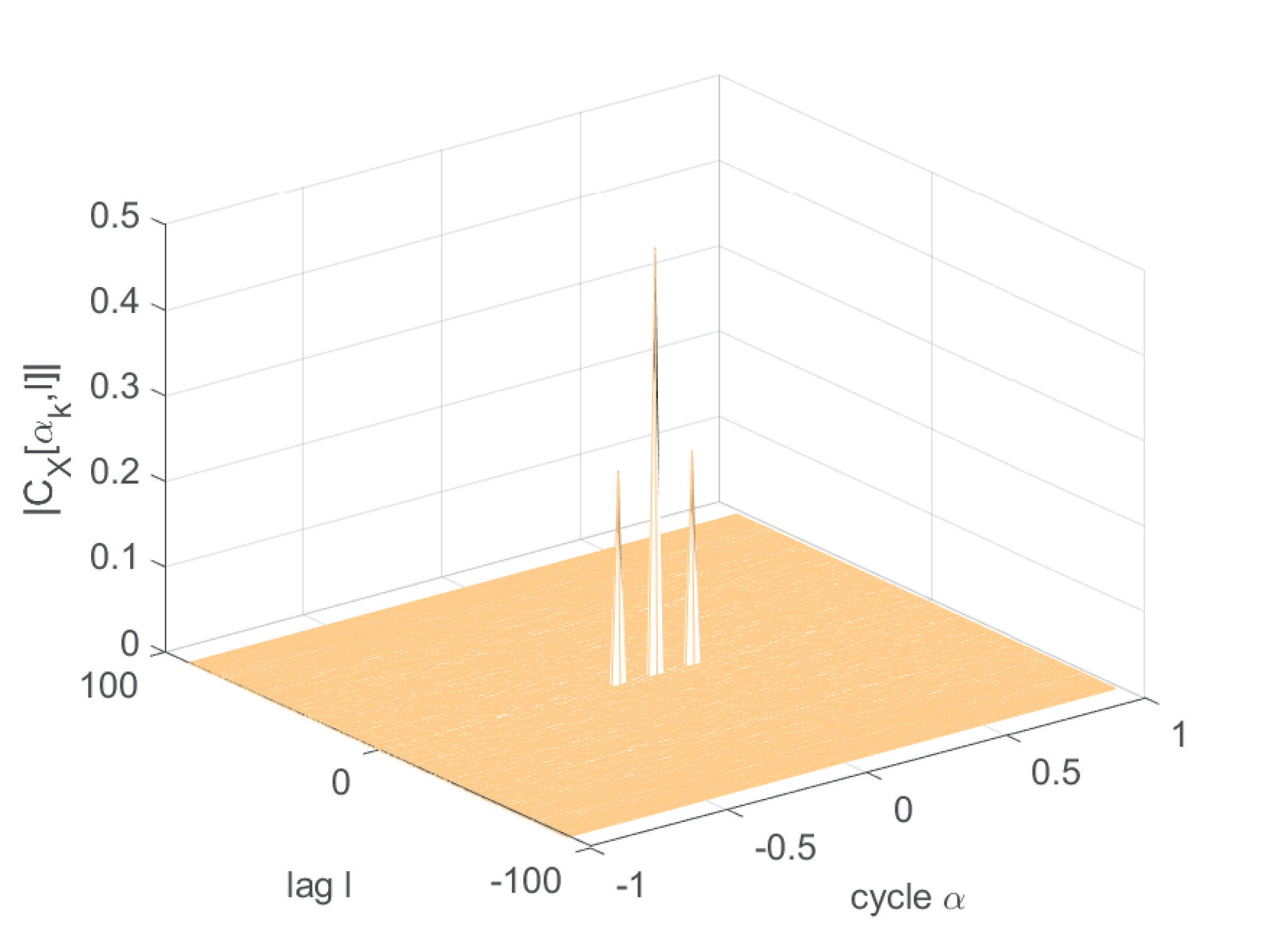

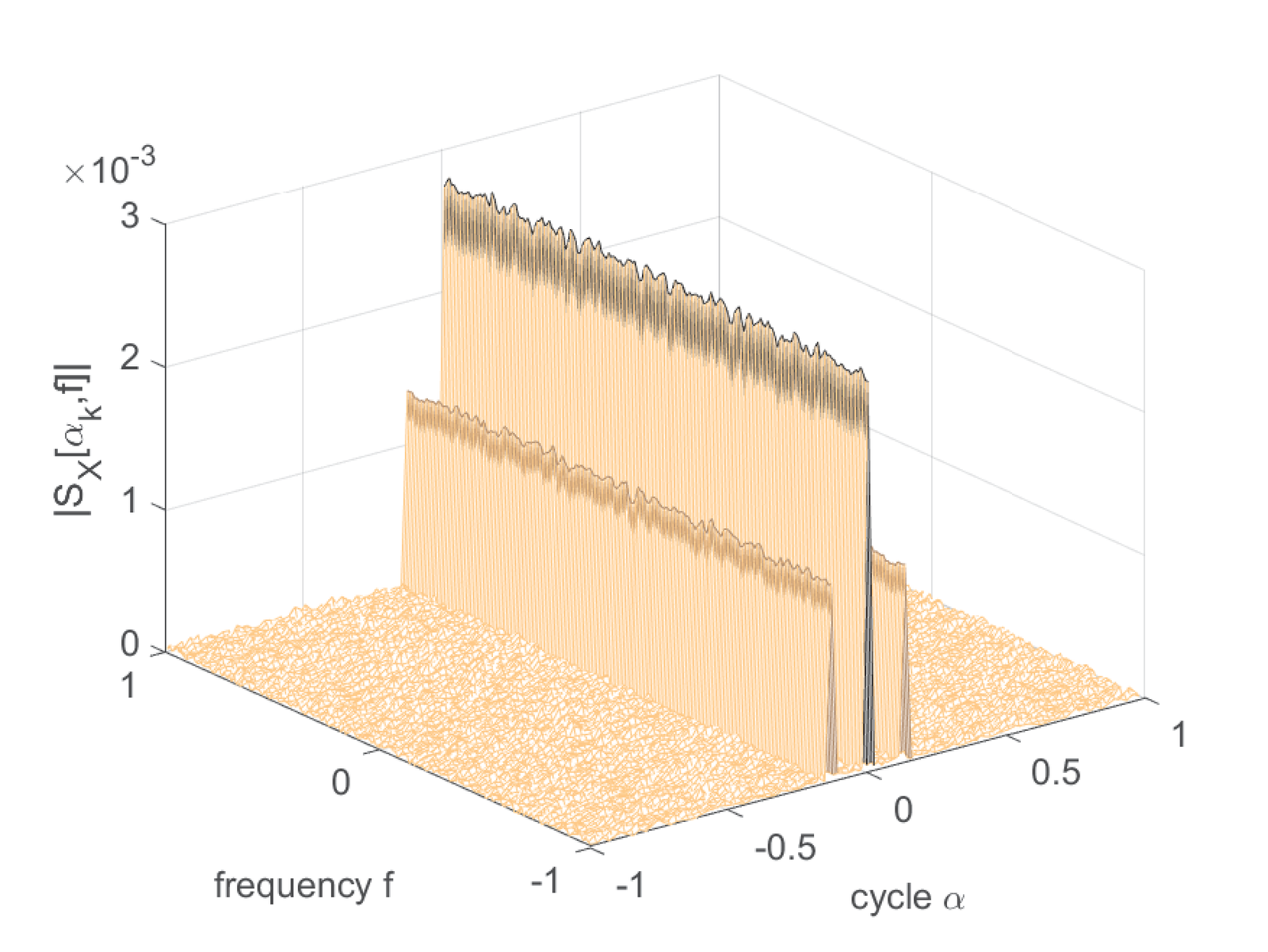

Eq. (B.44) is non-zero only at , where it is a squared cosine . Hence, from Eq. (A.8), its cyclic correlation is . And the cyclic spectrum is obtained by a DTFT for each cycle, which leads to the DC levels in Figure B.19(d).

Eq. (B.43) can be modified to , where is a generic periodic signal. In this case, , where is the Fourier coefficient of , as discussed in [Ant07].

Listing B.4 indicates how the sinusoid frequency and phase can be obtained using the realizations in matrix X created by Listing B.3.

1snip_appprobability_modulatednoise %generate modulated WGN 2alphaFFTLength=3*P; %P is the period, a multiple of P avoids leakage 3[Cxx,alphas,lags] = ak_cyclicCorrelationEnsemble(X, alphaFFTLength); 4onlyPositiveCycles=1; exclude0Alpha=1; %take only alpha>0 in account 5[peakValue,peakAlpha,peakLag] = ak_peakOfCyclicCorrelation(Cxx, ... 6 alphas,lags,exclude0Alpha,onlyPositiveCycles); %get the peak 7WEstimated=peakAlpha/2; %estimated frequency in rad 8phaseEstimated = angle(peakValue)/2; %phase in rad 9phaseOffset=wrapToPi(Phc) - wrapToPi(phaseEstimated); %force [-pi,pi] 10periodEstimated=round(2*pi/WEstimated); %estimate period (samples)

It should be noted that the phase estimation is highly sensitive to the value of alphaFFTLength (relatively, the frequency estimation is more robust). The reader is invited to modify this value and observe the estimation errors. As an example, the following output was obtained with Listing B.4:

Peak value=0.25187*exp(1.2545j) Period: Correct=30, Estimated=30 (samples) Cycle: Correct=0.20944, Estimated=0.20944(rad). Error=2.7756e-17 Phase: Correct=0.62832, Estimated=0.62724(rad). Error=0.0010821

In this example the cyclic correlation was obtained with ensemble statistics. The following discussion concerns estimation using a single realization.

When it is feasible to assume ergodicity in the autocorrelation of a WSC, the estimation can be done with a single realization of samples. In this case and assuming a complex-valued signal, an estimator for the cyclic correlation is:8

|

| (B.45) |

For negative , one can use .

Example B.7. Example: WGN modulated by sinusoid using a single realization. Listing B.5 estimates the cyclic spectrum using a single realization of Example B.6.

1snip_appprobability_modulatednoise %generate modulated WGN 2x=X(:,1); %take one realization 3maxTau=3*P; %maximum lag value (P is the sinusoid period) 4numCycles=4*P; %the cycle resolution is then 2*pi/numCycles 5[Cxx,alphas,lags]=ak_cyclicCorrelation(x,maxTau,numCycles);

The output of Listing B.5 in this case is

Peak value=0.24393*exp(1.3427j) Period: Correct=30, Estimated=30 (samples) Cycle: Correct=0.20944, Estimated=0.20944(rad). Error=0 Phase: Correct=0.62832, Estimated=0.67133(rad). Error=-0.043013

Listing B.5 is similar to Listing B.4, but estimates the cyclic correlation from a single realization instead of ensemble statistics. The main functions for these codes are ak_cyclicCorrelation.m and ak_cyclicCorrelationEnsemble.m, respectively.

In both Listing B.5 and Listing B.4, the duration of the signals was carefully controlled to improve accuracy. More sophisticated algorithms use windowing or estimate the cyclic spectrum directly, via periodograms.

Example B.8. Example: Cyclic spectrum via periodograms. The cyclic spectrum of Eq. (B.42) can be obtained by first estimating the cyclic correlation as in ak_cyclicSpectrum.m, which was used to obtain Figure B.19(d). Alternatively, can be directly estimated via periodograms [Ant07, GG98].

Listing B.6 estimates the cyclic spectrum using a single realization of the modulated WGN in Example B.6.

1P=30; %sinusoid period (in samples) 2N=1e3*P; %number of samples of this realization (force multiple of P) 3Wc=2*pi/P; Phc=pi/5; %carrier frequency and phase, both in radians 4n=0:N-1; carrier=transpose(cos(Wc*n+Phc)); %same for all realizations 5x=randn(N,1).*carrier; %WGN modulated by sinusoidal carrier 6L = length(x); % signal length 7Nw = 128; % window length 8Nv = fix(2/3*Nw); % block overlap 9nfft = 2*Nw; % FFT length 10da = 1/L; % cyclic frequency resolution (normalized freq) 11cycleIndex = round(2*Wc/(2*pi*da)); %find the correct cycle 12a1 = cycleIndex-20; % first cyclic freq. bin to scan (cycle a1*da) 13a2 = cycleIndex+20; % last cyclic freq. bin to scan (cycle a2*da) 14S = zeros(nfft,a2-a1+1); %pre-allocate space 15for k = a1:a2; % Loop over all chose cyclic frequencies 16 spectralObject = cps_w(x,x,k/L,nfft,Nv,Nw,'sym'); %cycle k/L 17 S(:,k-a1+1) = spectralObject.S; %extract S(alpha,f) from object 18end 19alphas = 2*pi*da*(a1:a2); %get all cycles in rad 20sumMagOverFreq=sum(abs(S)); %for each cycle, sum all magnitudes 21indMax=find(sumMagOverFreq == max(sumMagOverFreq),1); 22WEstimated=alphas(indMax)/2; %estimated frequency from cycle of peak 23periodEstimated=round(2*pi/WEstimated); %period in samples 24phaseEstimated=mean(angle(S(:,indMax)))/2;%half averaged peak's phase

Listing B.6 is based on the third-party function cps_w.m,9 which allows calculating for specific values of . This is handy when there is previous knowledge about the cycles of interest.

The output of Listing B.6 is:

Period: Correct=30, Estimated=30 (samples) Cycle: Correct=0.20944, Estimated=0.20944(rad). Error=-2.7756e-17 Phase: Correct=0.62832, Estimated=0.64059(rad). Error=-0.012276

Note that this relatively good result was obtained with N chosen as a multiple of P and, consequently, the sinusoid frequency Wc is a multiple of the cycle resolution da. Changing N from to thousand samples led to:

Period: Correct=30, Estimated=30 (samples) Cycle: Correct=0.20944, Estimated=0.20947(rad). Error=-2.618e-05 Phase: Correct=0.62832, Estimated=0.10469(rad). Error=0.52363

which has an significantly increased phase estimation error. Practical issues when estimating cyclostationary statistics are discussed, for example, in [Ant07].

B.4.2 Two examples of cyclostationary signals

Cyclostationarity is discussed here in the context of digital communications. More specifically, two signals of interest with periodic statistics are:

|

| (B.46) |

which are generated, respectively, by the following processes:

- the output of an upsampler when its input is a discrete-time WSS process;

- the sampled signal obtained by periodic sampling of a continuous-time WSS process.

The upsampler of the former case is discussed in Section 3.17, and its block diagram is repeated here for convenience:

The first goal of this discussion is to indicate that is WSC (wide-sense cyclostationary) while is WSS.

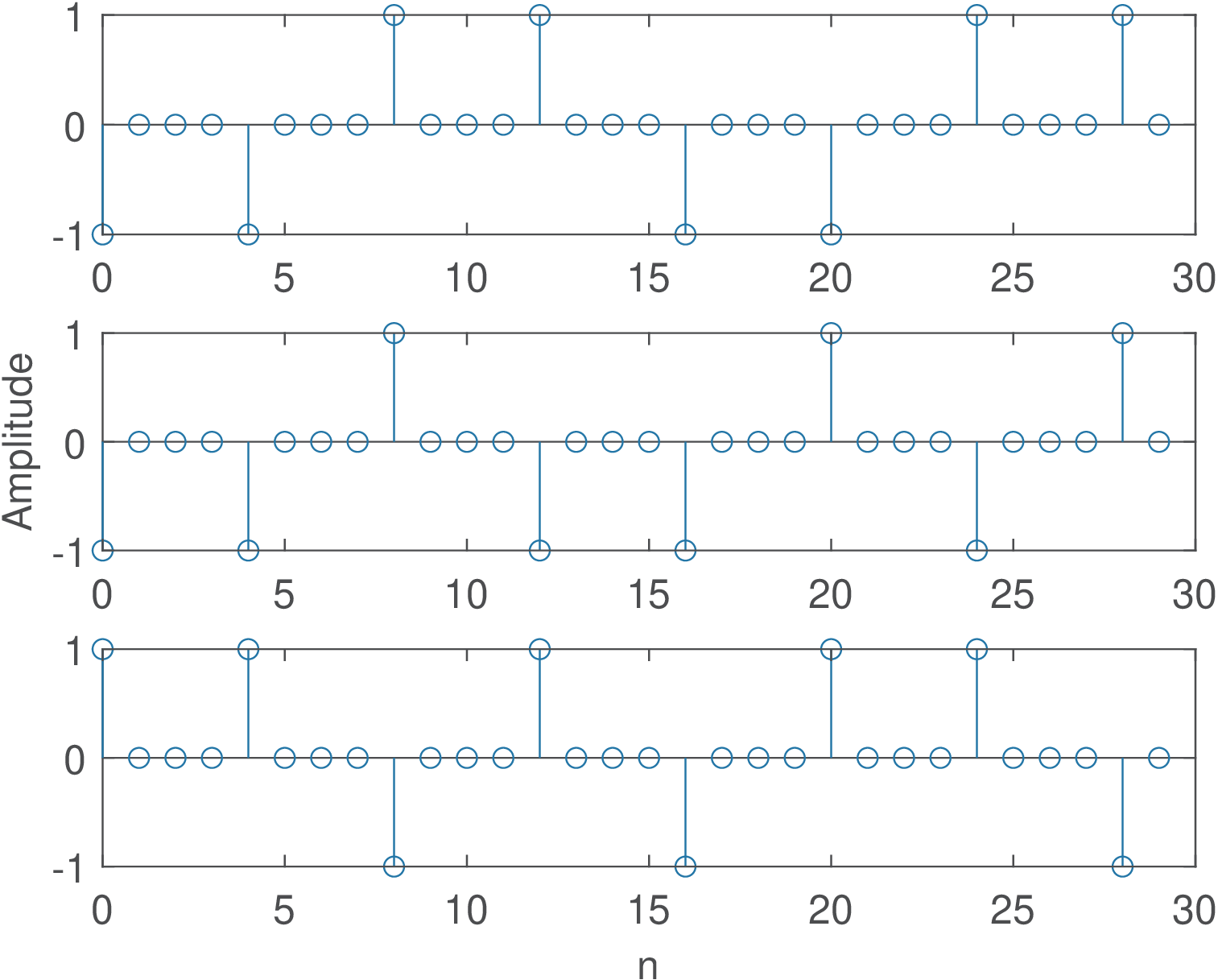

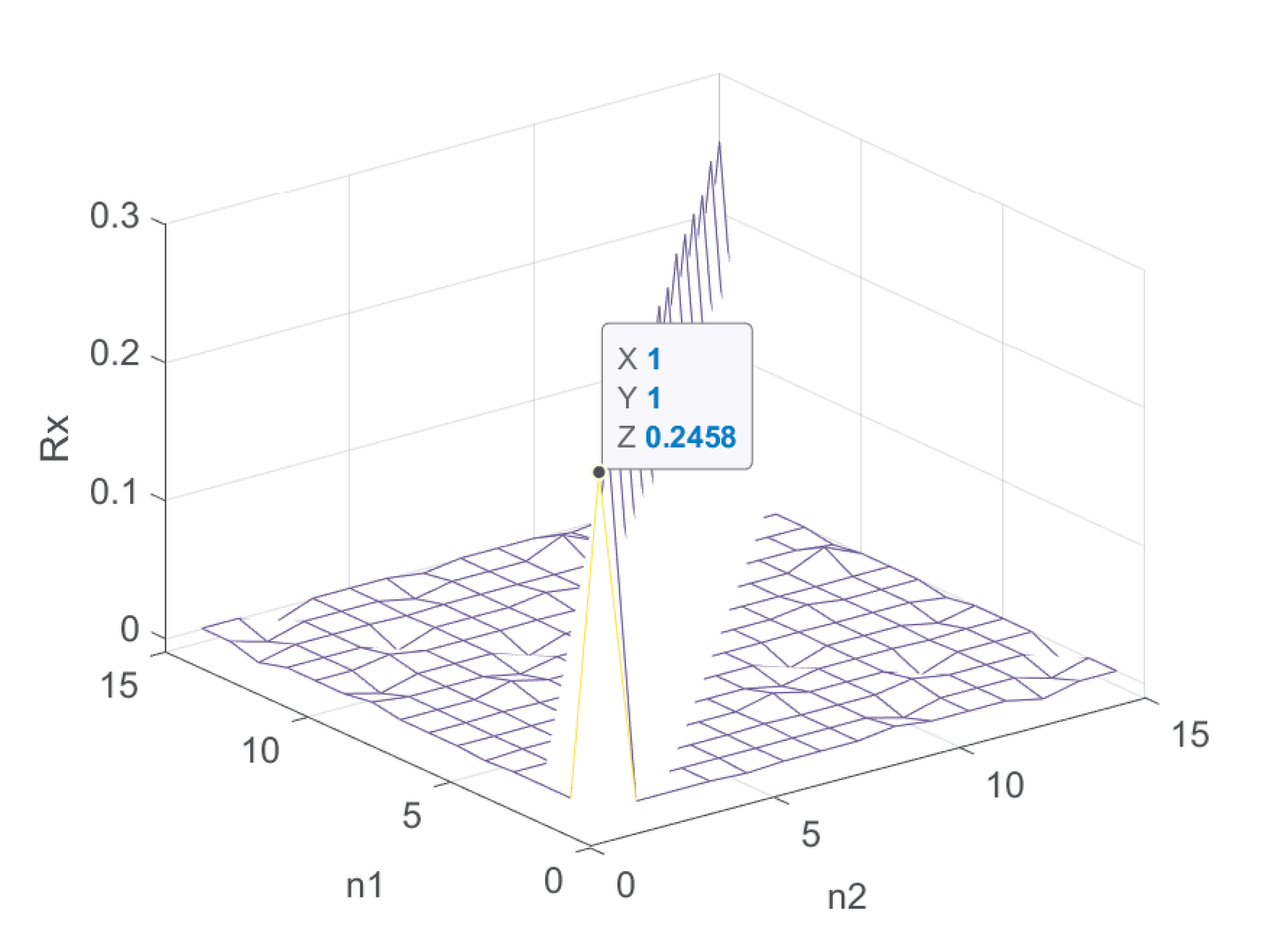

Example B.5 discusses that the input is WSS and presents the corresponding autocorrelations for a polar and unipolar binary signal. But, as informed in Section 3.17, the upsampling by is not a time-invariant operation and the output process cannot be assumed WSS in spite of the input being WSS. This fact can be confirmed by observing Figure B.20 and Figure B.21. Figure B.20 shows that the amplitudes of the upsampled signal obtained from a polar signal with are always zero at times that are not a multiple of . The corresponding autocorrelation in Figure B.21 presents cyclostationarity.

Figure B.21 was generated by Listing B.7. It can be seen that, different from Figure B.11, Figure B.21 cannot be represented by a one-dimensional function of the lag because the corresponding process is WSC, not WSS.

1numRealizations = 500; numSamples = 4000; %500 waveforms 2X=2*[floor(2*rand(numSamples,numRealizations))-0.5]; 3L=4; %oversampling (upsampling in this context) factor 4Xu=zeros(L*numSamples,numRealizations);%pre-allocate space 5Xu(1:L:end,:)=X; %generate upsampled realizations 6[Rx,n1,n2] = ak_correlationEnsemble(Xu,15); %estimate correlation 7mesh(n1,n2,Rx); %3-d plot

Similar to Figure B.21, Figure B.22 corresponds to the autocorrelation of an upsampled signal obtained from a unipolar signal with . In both cases the output processes are not WSS. For example, and in spite of both pairs corresponding to a lag .

The reason for not being WSS is that all realizations have well-defined time instants in which they have value zero and time instants in which there is randomness (the value may not be zero). In other words, the randomness is mixed along time with a deterministic behavior of zero values. For example, at there is randomness, but all realizations have zero amplitude at . At the randomness appears again and so on. Note that the statistics are periodic and the random process is cyclostationary.

B.4.3 Converting a WSC into WSS by randomizing the phase

A WSC can be converted into a WSS by using the trick of “uniformly randomizing the phase”. The following discussion, assumes the two signals and from Eq. (B.46), both representing realizations of WSC processes. The upsampled discrete-time signal is discussed first, and then the results are adapted to the WSC process associated to .

The procedure of “uniformly randomizing the phase” means that is randomly shifted to create a new signal , where is a uniformly-distributed discrete random variable assuming integer values from 0 to (periodic signals require another choice of values for and are discussed later). The process is illustrated as

|

| (B.47) |

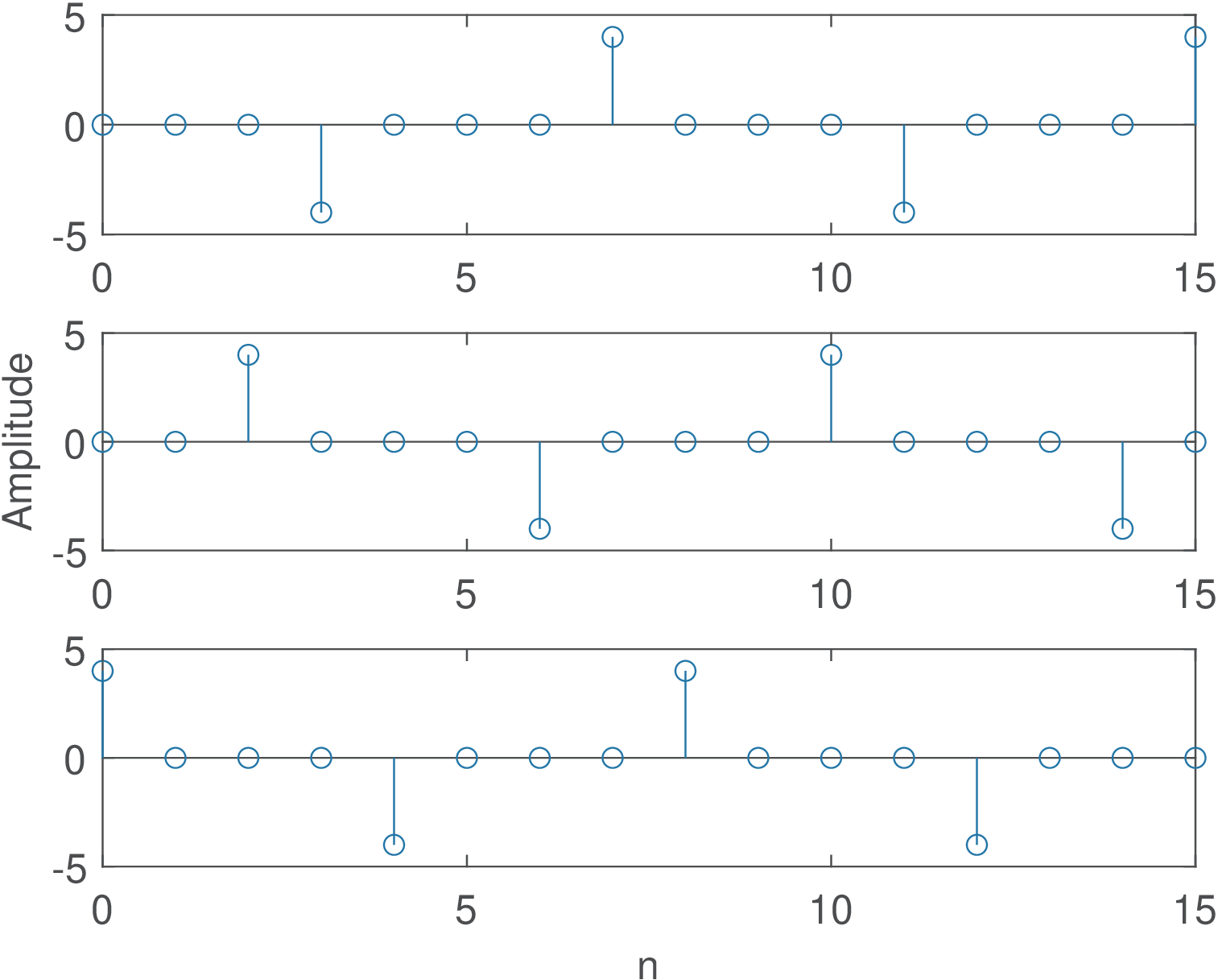

Example B.9. Conversion of polar WSC process into WSS. Based on Block (B.47), Figure B.23 shows three realizations of for a polar10 signal with . The new process with realizations represented by is WSS because the randomness is now spread over time.

Figure B.24 shows the ACF for the same process that generated the realizations in Figure B.23. The random shift turned into a WSS process. Because the product is zero for several pairs of realizations, the original value (obtained for the signal ) decreases to when is considered.

Example B.10. Conversion of upsampled sinusoids into WSS. Another example is useful to illustrate the concept of randomizing the phase. This time the signal is a deterministic sinusoid of period equal to samples. This signal is upsampled by and randomly shifted. In this case, the uniformly distributed r.v. assumes integer values in the range (see ak_upsampleRandomShift.m for an explanation). Some realizations of the resulting random process are depicted in Figure B.25.

Figure B.26 illustrates the correlation of the WSS process corresponding to Figure B.25. Note that the autocorrelation values (recall Eq. (1.60)) of the original sinusoid were reduced by a factor of 2 in Figure B.26. For example, of the original cosine was reduced to .

In fact, from Eq. (3.99) and taking in account that the random shift will add parcels equal to zero (decreasing the autocorrelation values by ), the autocorrelations of and in Block (B.47) are related by

that can be written mathematically as:

|

| (B.48) |

The following example illustrates the use of Eq. (B.48).

Example B.11. Conversion of an upsampled MA(1) process into WSS. Assume the MA(1) process of Example 4.27 is driven by WGN with power and uses the FIR filter . The MA(1) is then upsampled by a factor of and becomes a WSC process. The processing of Block (B.47) is then used to convert it to a WSS. The goal here is to confirm Eq. (B.48).

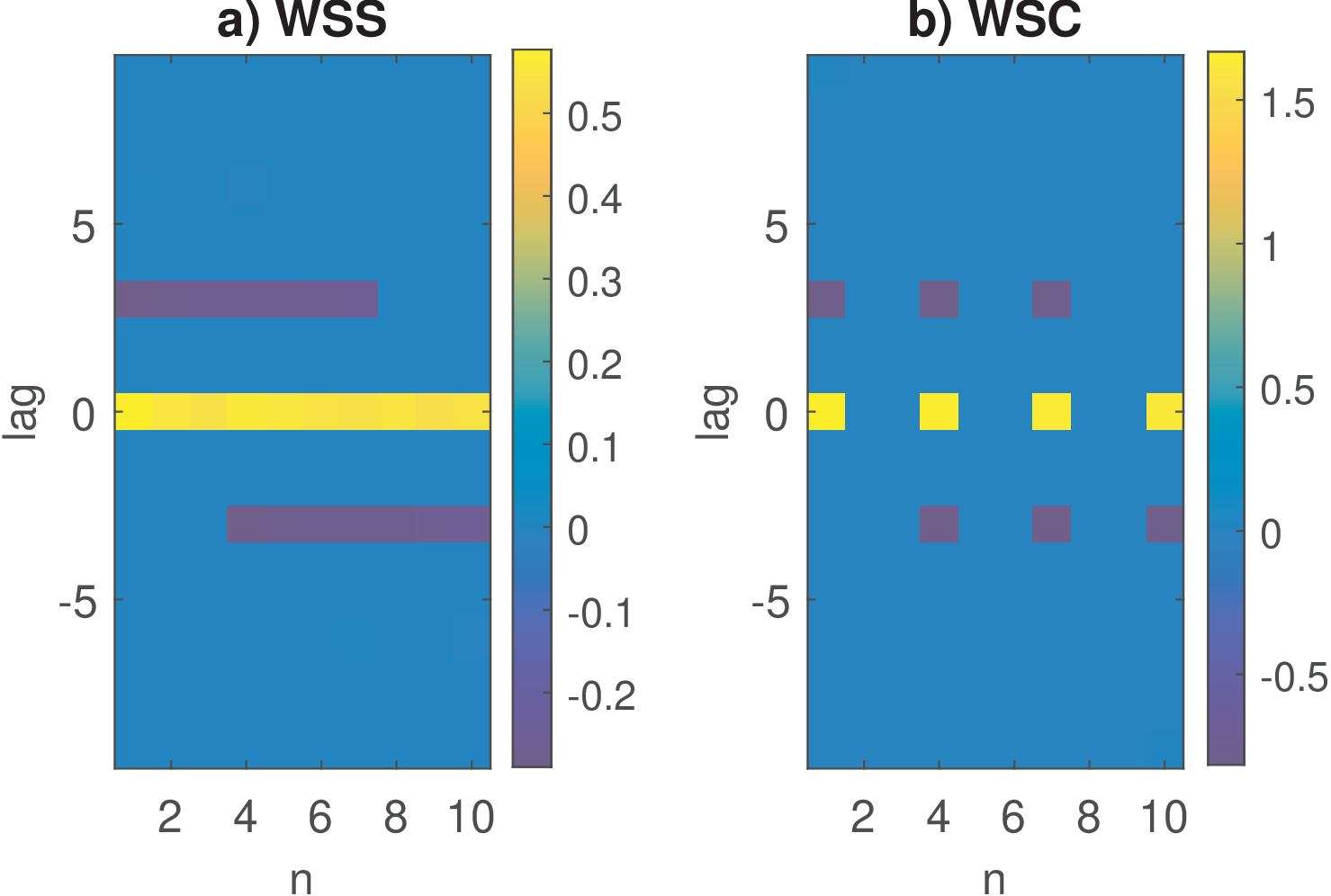

In this case, from Eq. (4.65), the input to the upsampler has autocorrelation . From Eq. (B.48), the autocorrelation of the phase randomizer block has autocorrelation . This can be confirmed with Listing B.8 that generates Figure B.27 (which can then be analyzed using a data cursor).

1B=[1 -0.8]; %Generate MA(1) process with these FIR coefficients 2L=3; %upsampling factor (to upsample the MA(1) process) 3numRealizations = 20000; %number of sequences (realizations) 4numSamples = 300; %number of samples 5%matrix X represents the input random process, add L extra samples 6Z=randn(numSamples+L,numRealizations); %white Gaussian noise 7X=filter(B,1,Z); %implement filtering by LTI system 8X(1:2*length(B),:)=[]; %take out filter transient 9Xup_shifted=ak_upsampleRandomShift(X, L); %upsample & random shift 10[Rxx,n1,n2] = ak_correlationEnsemble(Xup_shifted,10); %get Rxx 11subplot(121), ak_correlationMatrixAsLags(Rxx); %plot as n times lag 12ylabel('lag'); xlabel('n'); colorbar; title('a) WSS') 13Xup = zeros(L*size(X,1),numRealizations); %initialize with zeros 14Xup(1:L:end,:)=X; %effectively upsample 15subplot(122) %now the autocorrelation of the cyclostationary process 16ak_correlationMatrixAsLags(ak_correlationEnsemble(Xup,10)); %plot Rxx 17ylabel('lag'); xlabel('n'); title('b) WSC'); colorbar

Figure B.27 illustrates that the upsampling creates zeros in the autocorrelation over the lag dimension and, that the phase randomization made the value of the autocorrelation independent of the absolute time , as required by a WSS.

Via the phase randomization, dealing with a WSC process has been circumvented, and one can obtain the PSD of in Block (B.47) by calculating the DTFT of both sides of Eq. (B.48):

|

| (B.49) |

Observe that the PSD of is simply obtained via the DTFT of , i. e.,

|

| (B.50) |

such that Eq. (B.49) can be written as

|

| (B.51) |

The second case of a WSC listed in the beginning of this section occurs when a sampled signal is obtained by periodic sampling of a continuous-time WSS process. Similar to Block (B.47), a continuous-time randomization with a uniformly distributed phase can be represented by

|

| (B.52) |

and it turns the sampled signal into a realization of a WSS process.

The PSD of is given by11

|

| (B.53) |

which is similar to Eq. (B.49) and also has the PSD of (not ) provided by Eq. (B.50).

Note that due to the D/C conversion in Block (B.52), is mapped to continuous-time in rad/s using the relation . In this specific case, , which leads to:

Hence, Eq. (B.53) can be written as

|

| (B.54) |

or, alternatively, with in Hz:

|

| (B.55) |

From Eq. (B.50), note that is periodic, as it is a version of with a scaled abscissa. Consequently, are also periodic, in spite of the notation using the independent variables and .