A.19 Probability and Stochastic Processes

This appendix will provide a very brief overview about key topics. If the reader finds extra time, he/she can find many good textbooks about probability and stochastic (or random) processes.

A.19.1 Joint and Conditional probability

If two events and are statistically independent, their joint probability is the multiplication of their individual probabilities: . For instance, obtaining two heads when tossing a fair coin twice is , given that . Given that the event is tossing the coin for the second time, we can say that the conditional probability of given that the first tossing event occurred is because does not influence .

In general, the conditional probability is obtained by its definition: . Writing this equation as , one can imagine a causal relation between and , with occurring with , before , and then occurring with given that happened.

Starting from the joint probability , one can pick as the first event that happens and write . Combining the two alternatives of writing , i. e. leads to

|

| (A.65) |

which is called the Bayes rule (see, e. g., [ urlBMpro]).

A.19.2 Random variables

First note that the outcome of a probabilistic experiment need not be a number but any element of a set of possible outcomes. For example, the outcome when a coin is tossed can be “heads” or “tails”. Basically, random variables allow us to map any probabilistic event into numbers, which are then conveniently manipulated using mathematical operations such as integral and derivatives. A source of confusion is that, strictly, a random variable (e.g., or ) is a function. More specifically, a random variable (r.v.) is a function that associates a unique numerical value with every outcome of an experiment (r.v. can be complex numbers, vectors, etc., but here it will be assumed as a real number). In math, a function output is often represented as . When dealing with a r.v., instead of adopting something like , both the random variable (equivalent to the function ) and its output value (equivalent to ) is represented by a single letter (e.g., or ).

There are two types of r.v.: discrete and continuous. Hence, a r.v. has either an associated probability distribution (discrete r.v.) or probability density function (continuous r.v.).

Assume a discrete r.v. and a continuous r.v. . While the former is typically described by a probability mass function (pmf), the latter can be described by a probability density function (pdf).

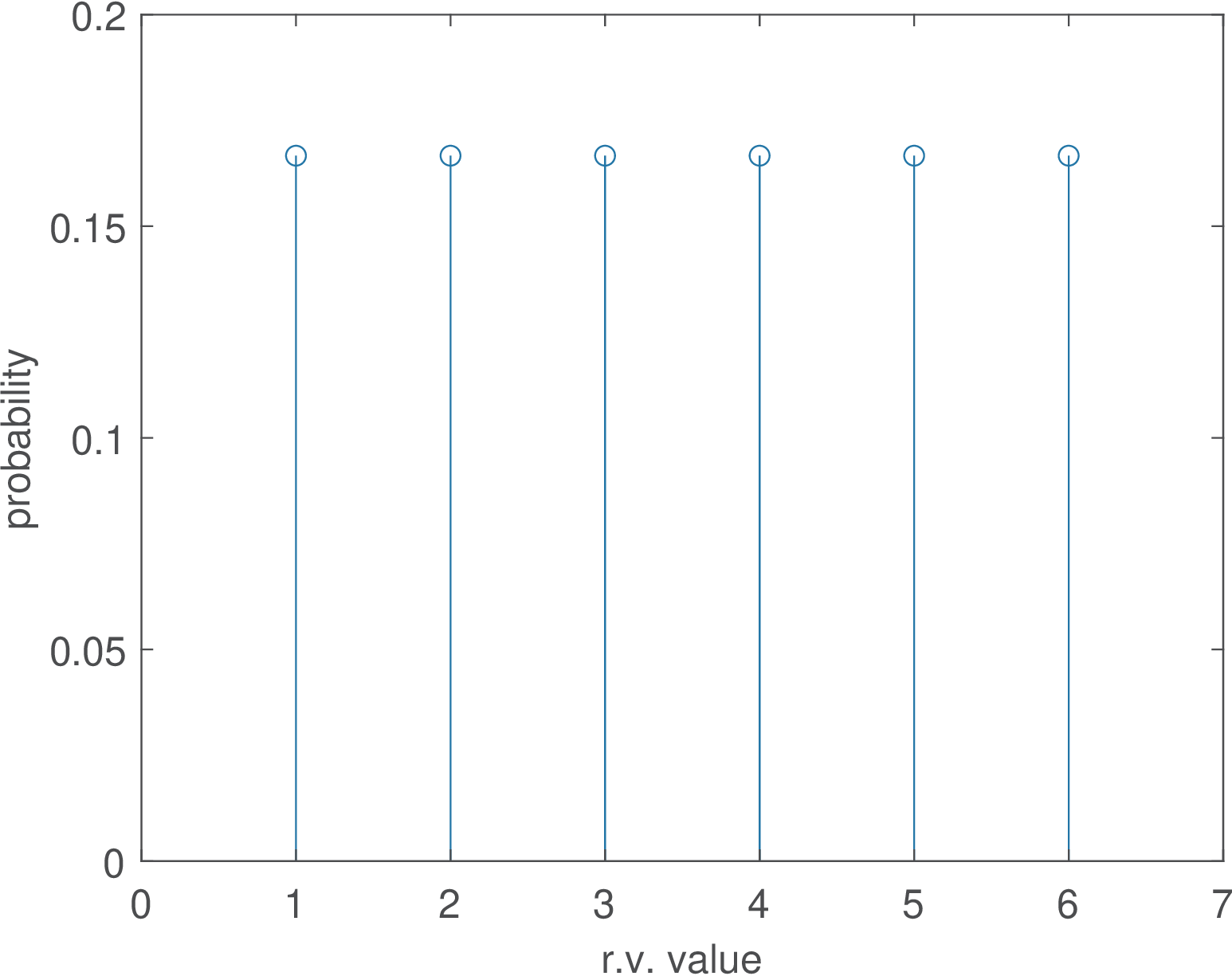

Say that represents the outcome of rolling a dice. Its PMF is shown in Figure A.7 and indicates that each face of a fair dice has a probability of .

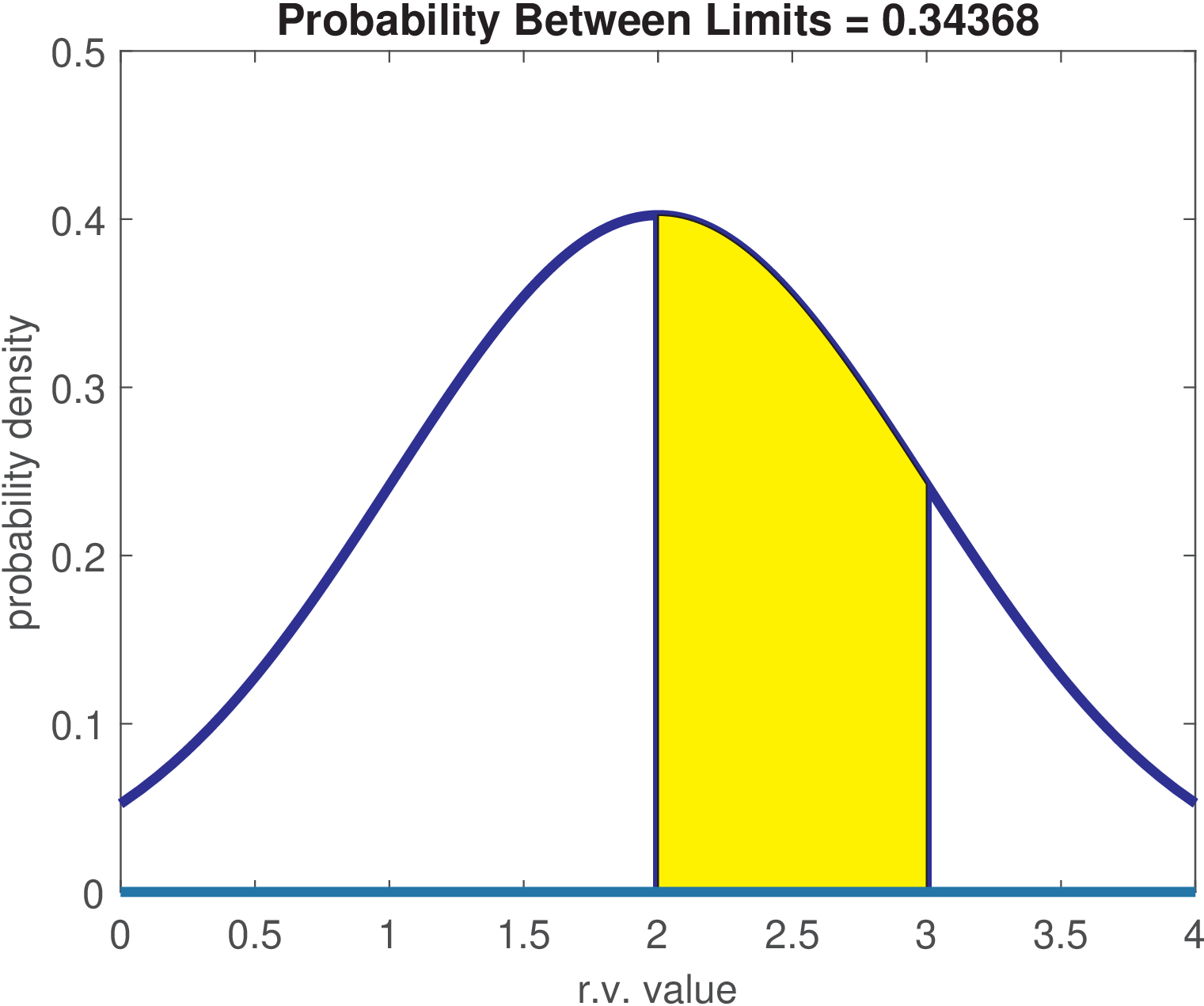

Now consider that represents the amplitude of a Gaussian noise source with mean 2 and variance equal to 1. Its pdf is shown in Figure A.8. A common mistake is to assign a non-zero value to a specific value of a density function. For example, it is wrong to say that the probability of is , in spite of this being the value of the function. The function represents a density, and the correct answer is that the probability of , or any other point, is 0. One can extract probability from a pdf only integrating it over a non-zero range of its abscissa. For example, over the range [2, 3] the probability is approximately 0.34 as indicated by the shaded area in Figure A.8.

When dealing with ratios of pdfs it is possible to have the abscissa range canceling out. For example, if a discrete binary r.v. is used to represent two classes (A and B, for example), and each class has a pdf associated to it ( and , respectively), the Bayes’ rule states

|

| (A.66) |

In this case, cancels because it appears on both numerator and denominator.

A.19.3 Expected value

The expected value operator is the most common mathematical formalism for calculating an average (or mean).

The expected value is a linear operator (see Appendix A.27.1 for more information about linearity), such that

|

| (A.67) |

The expected value of a random variable can be estimated as a typical average when can be represented by a finite-dimension vector. For example, if is a vector with random samples from , the expected value can be estimated as , where is the conventional mean value.

If one has realizations of a random variable , for instance organized as a vector , and is looking for the expected value of a function , it is possible to apply the function to each realization (value of ) and then take their average. For example, assume and one is interested on estimating based on realizations of . In this case, applying to elements of leads to the vector , which has the average . The estimate is .

As another example, consider is known (or has been previously estimated), and one is interested on estimating the variance . In this case, and if the realizations are still , which has an average , applying leads to a vector with mean value . In this case the estimated variance is .

The variance is often denoted as , and can be written as

which is often interpreted as .

When a discrete random variable has distinct values, its mean can be estimated with

|

| (A.69) |

where is the probability of the -th possible value . For instance, the realizations have only distinct values: and , each one with estimated probability . Hence,

The same result could be obtained by directly taking the mean value of the elements with

Note that corresponds to the -th element in , while is the -th distinct value of .

When is a continuous random variable, instead of Eq. (A.69) one has its continuous version:

where is the probability density function of . In this case, for each value of , the role of the probability in the discrete r.v. case, is played by the value that corresponds to a “weight” that depends on the likelihood of the specific value .

In order to find for a given function one can use:

|

| (A.70) |

A.19.4 Orthogonal versus uncorrelated

Two random variables and are said to be orthogonal to each other if

They are said to be uncorrelated with each other if

The above condition is equivalent to

Note that if one or both of and have zero mean, then the orthogonal and uncorrelated conditions are equivalent.

A.19.5 PDF of a sum of two independent random variables

If and are independent, implies . For example, the sum of a bipolar signal (-5 and +5V with 0.5 of probability each) with pdf and additive white Gausssian noise (AWGN) with pdf is a good example because the result is the Gaussian scaled by 0.5 and shifted to the position of each of the original impulses, at and 5, i. e., .