1.6 Signal Categorization

This section discusses important categories of signals and their distinctive properties.

1.6.1 Even and odd signals

A signal is called even if , and odd if . The definitions are also valid for a continuous-time signal and, in general, for any function . An even function has the property that , while an odd function has the property that . For instance, the cosine is an even function while the sine is an odd function.

Interestingly, any function can be decomposed into even and odd parts, such that . The two component parts can be obtained as

Similarly, any signal (or ) can be obtained as the sum of an even and odd parcels, which can be found as follows:

|

| (1.14) |

and

|

| (1.15) |

respectively, such that .

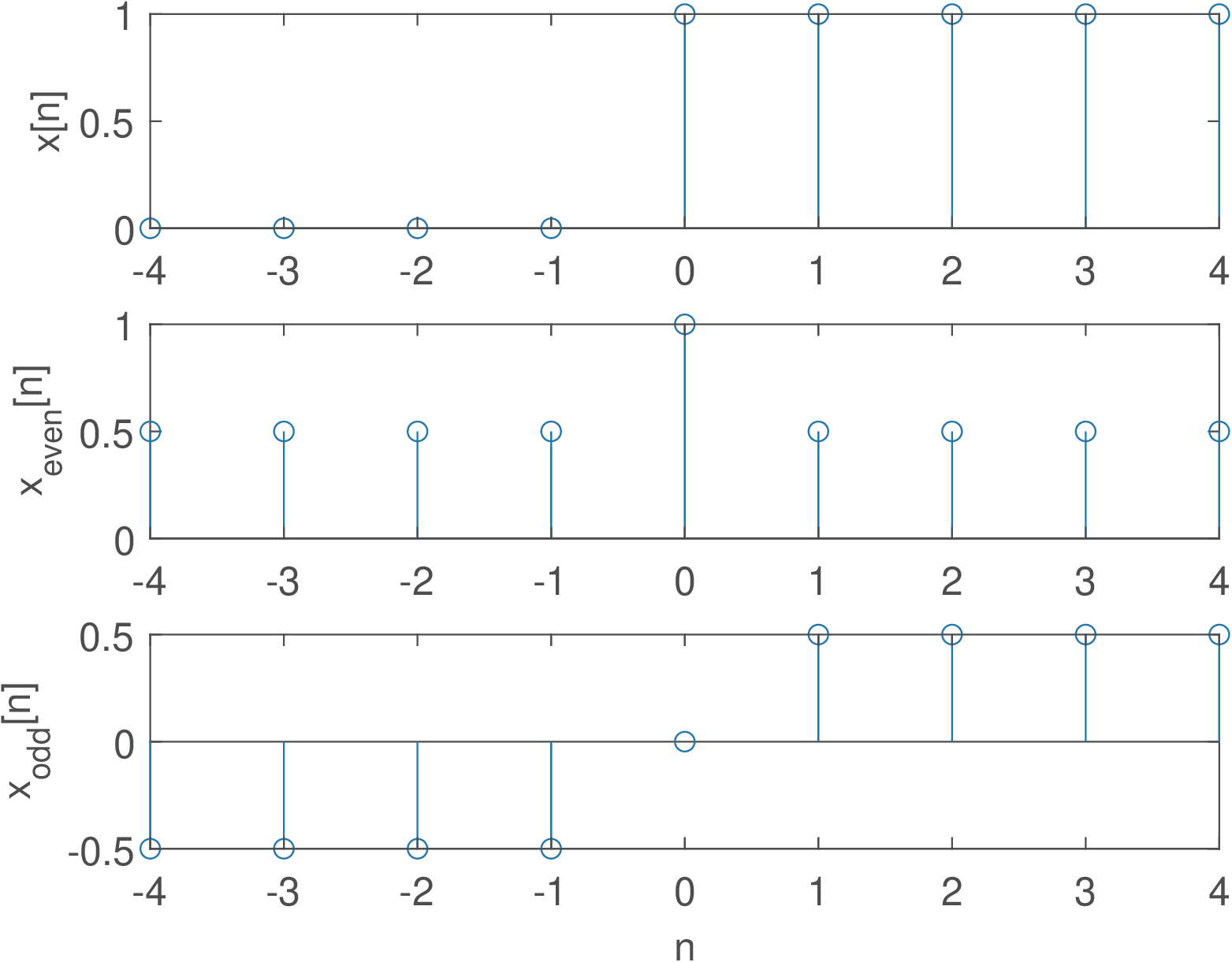

For example, assume the unit step function . Its even and odd parts are and , respectively.

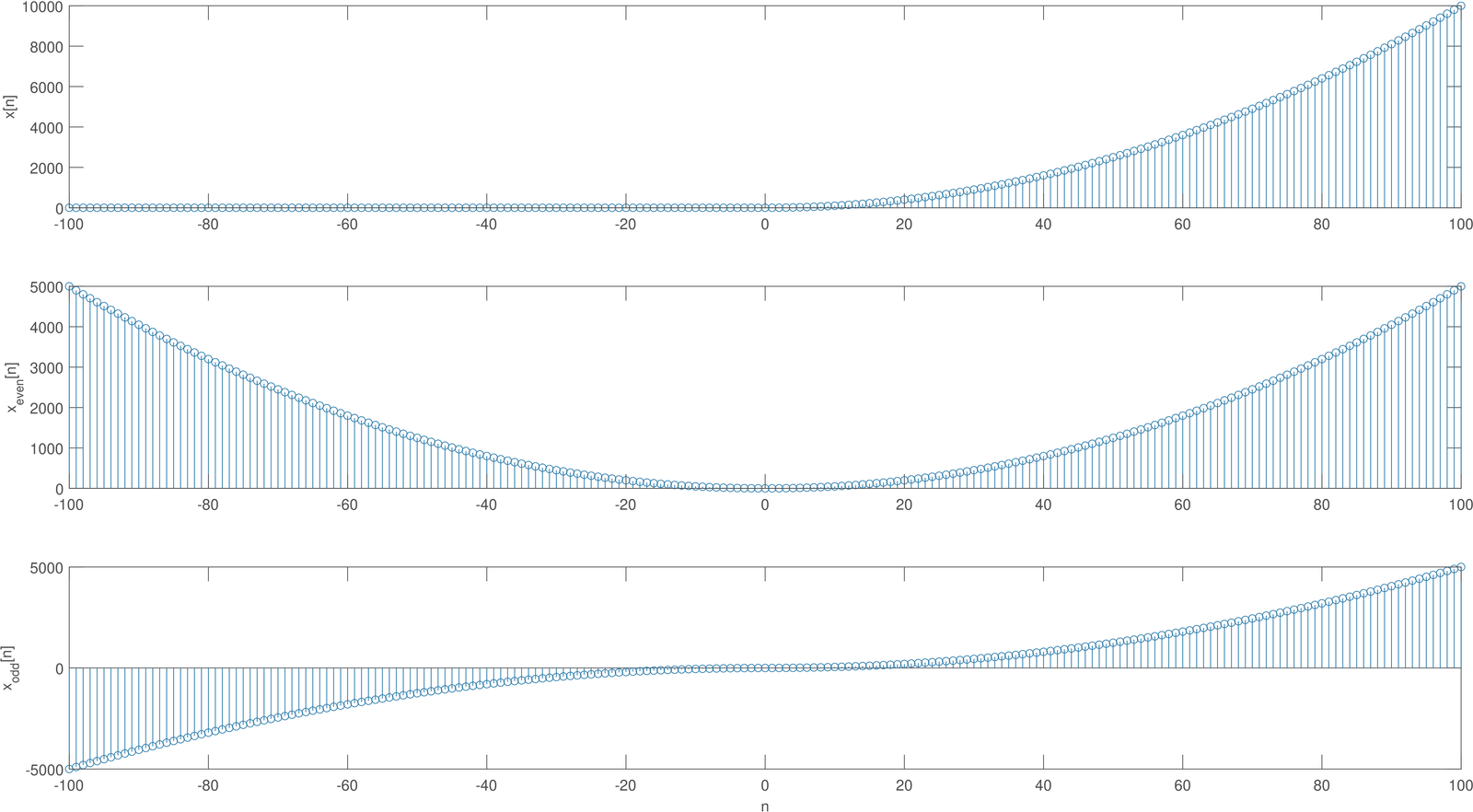

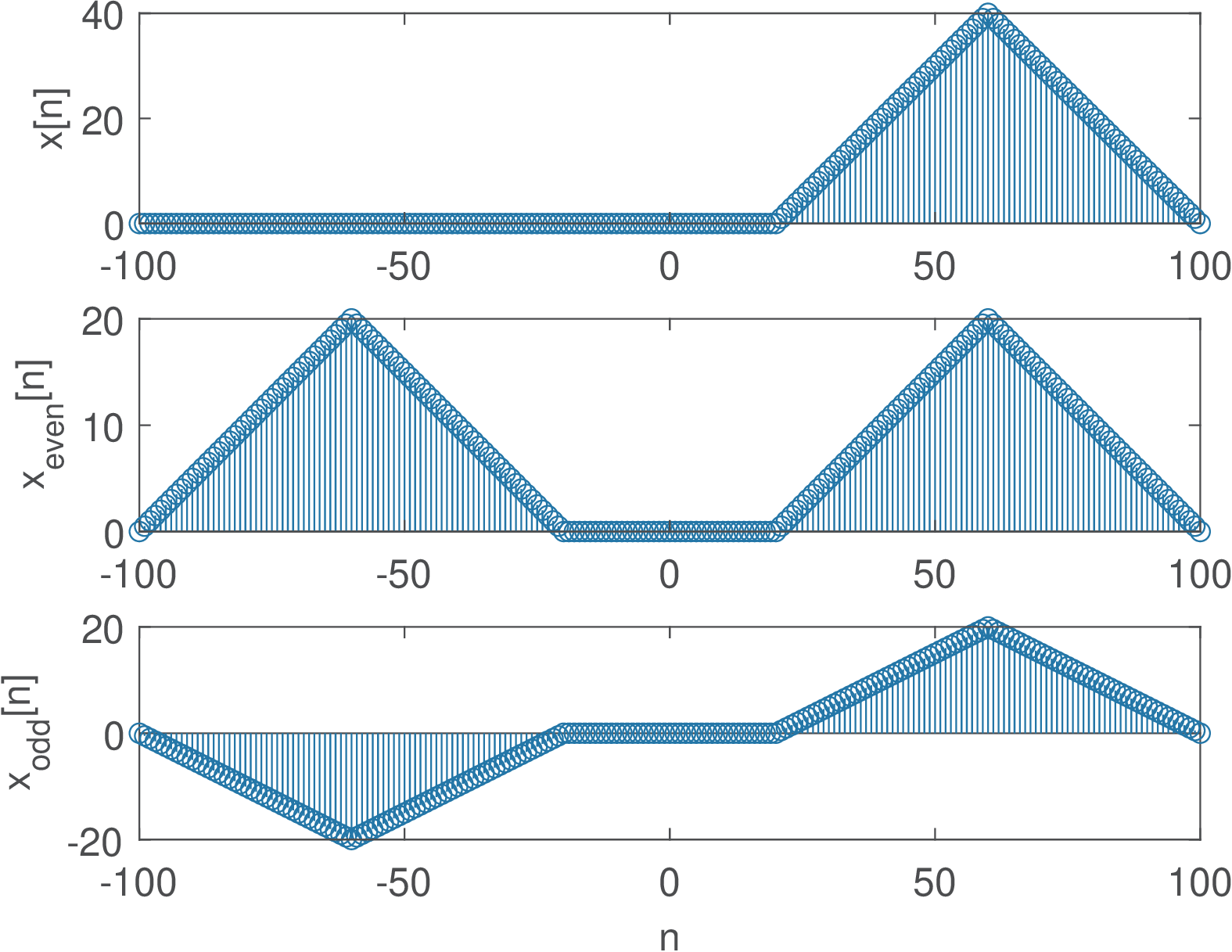

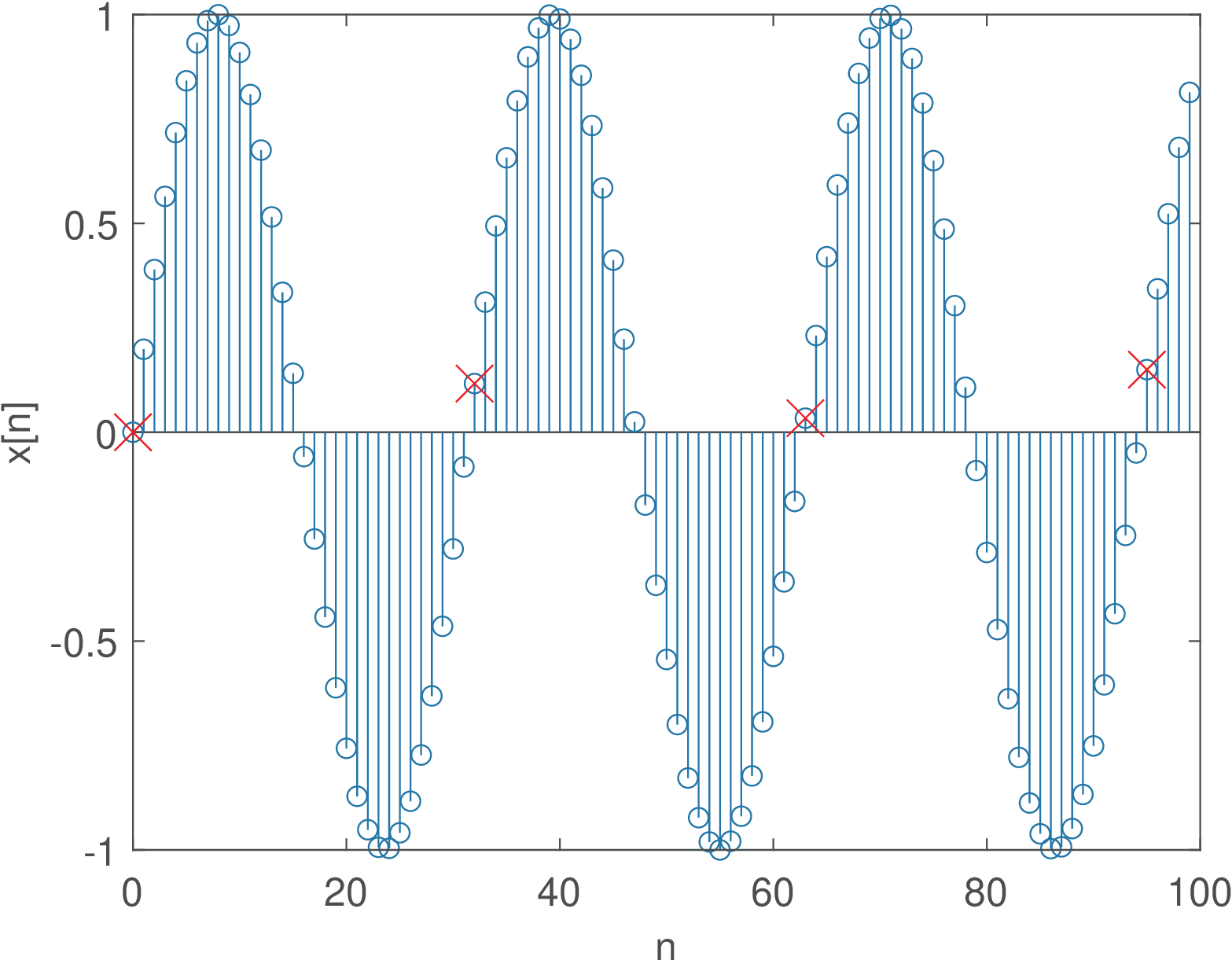

The function ak_getEvenOddParts.m can be used to obtain the even and odd components of arbitrary finite-duration sequences. Three examples are provided in Figure 1.22, Figure 1.23 and Figure 1.24.

1.6.2 Random signals and their generation

Random signals are important to represent noise or other non-deterministic signals. Discrete-time random signals of finite duration can be represented by vectors in which the elements are outcomes of random variables (see Appendix B.3). For example, assuming all elements of [2, 0, 2, 3, 3, 2, 3] are outcomes of the same random variable , one can calculate the average , the standard deviation and other statistical moments of .

Alternatively, a vector with random samples may correspond to a realization of a discrete-time random process (see Appendix B.4).

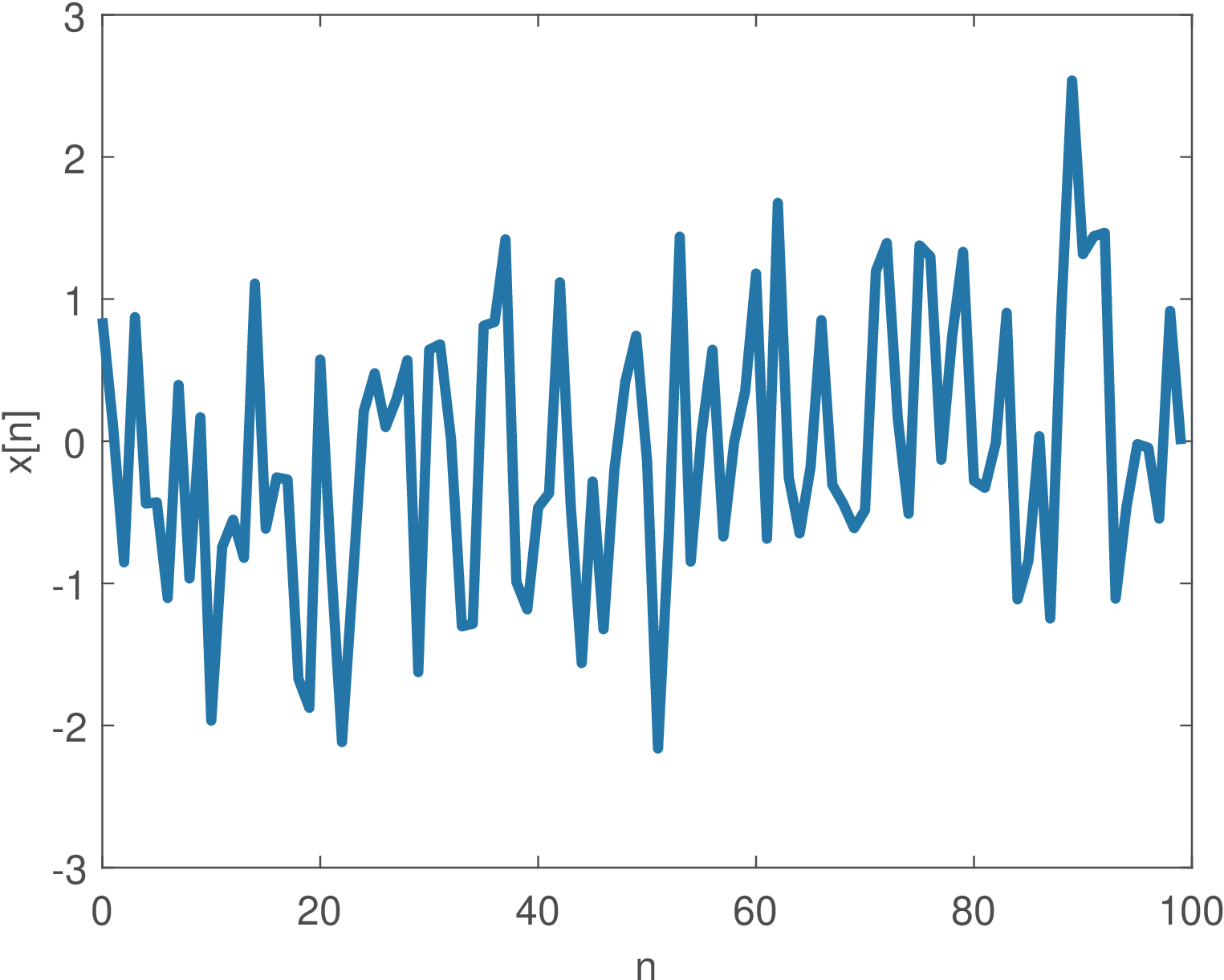

Example 1.11. Random Gaussian signals: generation, waveform and histogram. It is easy to generate random signals in Matlab/Octave. For example, the command x=randn(1,100) generates 100 samples distributed according to a standard Gaussian (zero-mean and unity-variance) distribution , where the notation assumes the second argument is the variance , not the standard deviation . These signals can be visualized as a time-series,9 such as in Figure 1.25.

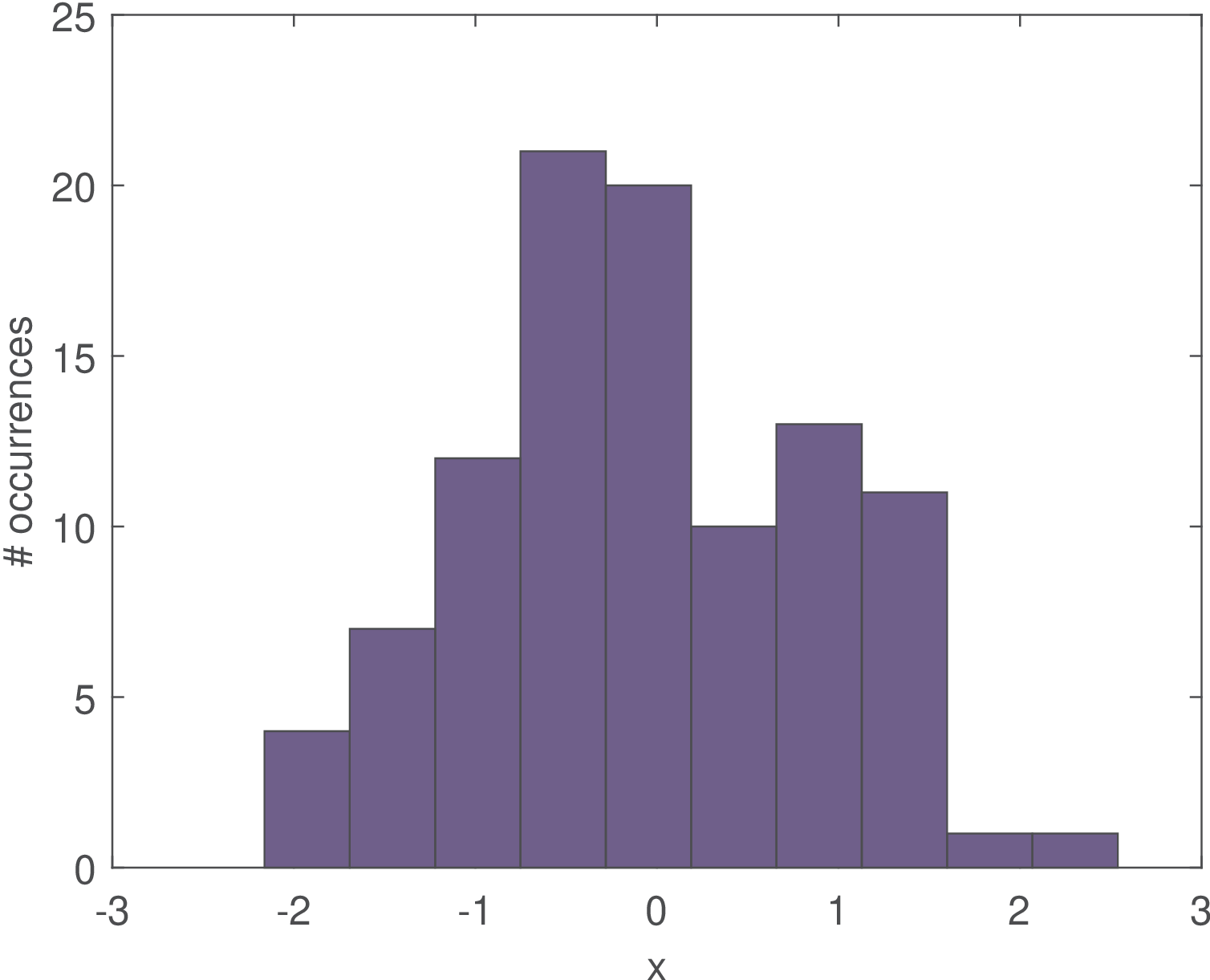

The time-domain visualization can be complemented by plotting the probability distribution of the random signal. Figure 1.26 illustrates the histogram of the signal depicted in Figure 1.25. The histogram is calculated by dividing the dynamic range in bins and counting the number of samples that belong to each bin. Figure 1.26 was obtained by using bins (the default).

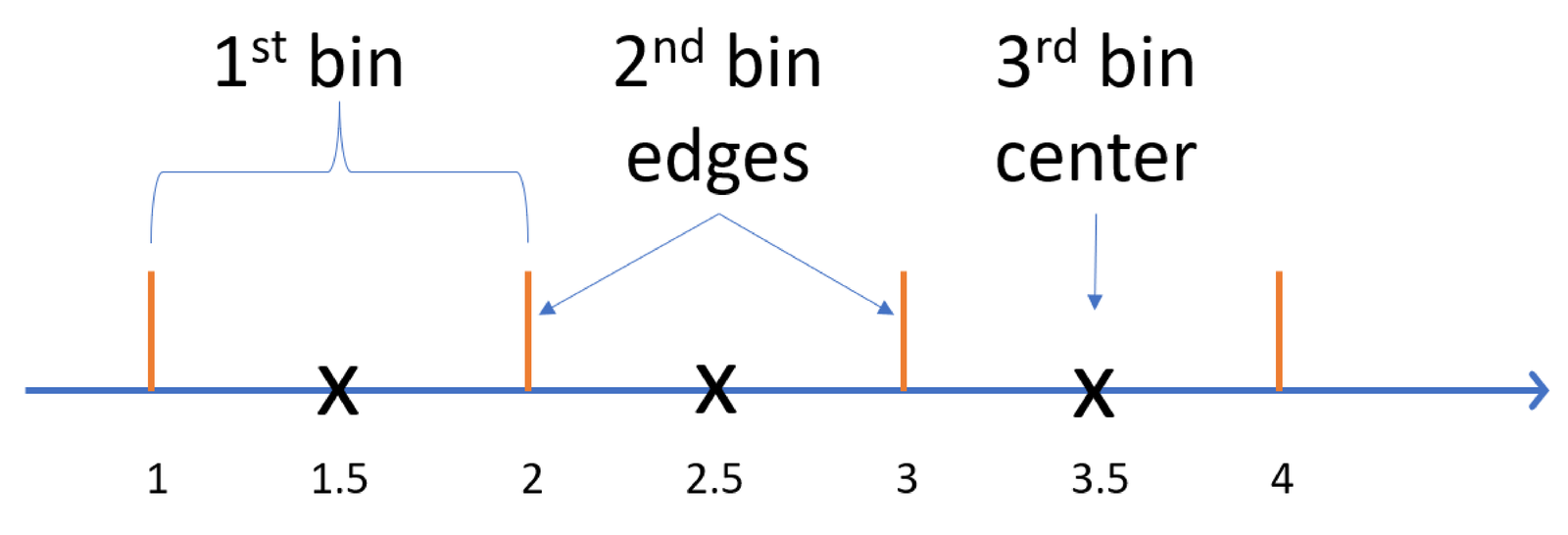

Figure 1.27 can help in case the reader is not familiar with the notion of a histogram bin. An example of Matlab/Octave commands that would lead to these bins are: x=[1, 3.1, 2.6, 4]; [counts,centers] = hist(x,3).

Histograms can also be easily generated with Python. For instance, using numpy one can create a histogram with the bins in Figure 1.27 with the commands:

While Matlab/Octave returns the bin centers, Python’s numpy returns the bin edges. In both languages, the first and last edge values (1 and 4, in the example of Figure 1.27) correspond to the minimum and maximum values of the input signal. The bin width is given by

|

| (1.16) |

which leads to in Figure 1.27.

The histogram indicates the number of occurrences of the input data within the ranges (or bins) indicated in its abscissa. The histogram is also very useful to estimate a probability mass function (PMF) and probability density function (PDF) from available data, which are used for discrete and continuous random variables, respectively.

Example 1.12. Using a normalized histogram as an estimate of the probability mass function. Even when the elements of the input vector x are real values, the histogram calculation “quantizes” these input values into only values, which represent the ranges of the histogram bins. In other words, the bin centers can be seen as the result of a quantization process. Besides peforming this “quantization”, the histogram also indicates the number of occurrences of these values in x.

The center bins of a histogram can be interpreted as the possible distinct values of a discrete random variable. Therefore, normalizing the histogram by the total number of samples provides an estimate of the PMF of this discrete random variable.

For instance, suppose a vector x with N=100 elements. Calculating the histogram and dividing the number of occurrences by N provides an estimate of the PMF of x. In Matlab/Octave, after obtaining the histogram with [occurrences, bin_centers]=hist(x), the PMF can be estimated using stem(bin_centers, occurrences/N).

Normalizing the histogram by the total number of samples provides an estimate of the PMF of a discrete random variable, with values represented by centers of the histogram bins. When this result is further normalized by the bin width, an estimate of the PDF is obtained, as discussed in the next example.

Example 1.13. Using a normalized histogram as an estimate of the probability density function. Sometimes we know the input data x is composed of realizations of a continuous random variable with a known PDF. When the task is to superimpose a PDF estimated from data to the actual PDF, one needs to properly normalize the histogram (see Appendix A.11).

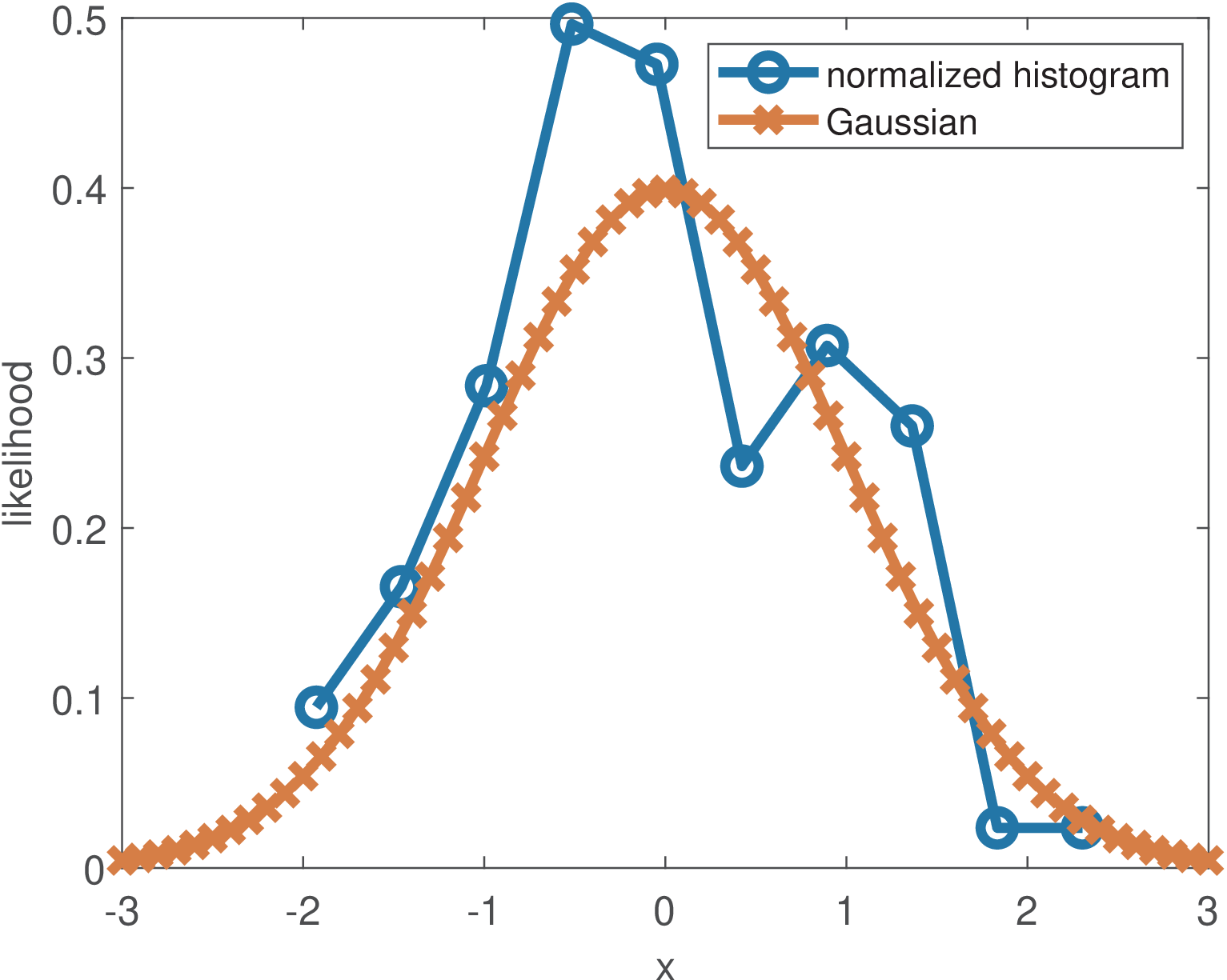

The function ak_normalize_histogram.m can be used to estimate a PDF from a histogram and was used to obtain Figure 1.28 according to the commands in Listing 1.8.

1B=10; %number of bins 2x=randn(1,100); %random numbers ~ G(0,1) 3[n2,x2]=ak_normalize_histogram(x,B);%PDF via normalized histogram 4a=-3:0.1:3; %use range of [-3std, 3std] around the mean 5plot(x2,n2,'o-',a,normpdf(a),'x-') %estimate vs. theoretical PDF

Figure 1.28 indicates that 100 samples and 10 bins provide only a crude estimation.

Example 1.14. Changing the mean and variance of a random variable. Assume a random variable has a mean and of its realizations compose a vector x. If one wants to create a new random variable with , this can be done with , or using the realizations: y=x+3. This is valid for any constant value .

To prove it, observe that the expected value is a linear operator (see, Appendix B.3.3), such that when , one has

|

| (1.17) |

Similarly, if the variance of is , a random variable has variance . The proof is based on Eq. (B.28) and linearity:

Consider now the particular example of the function randn in Matlab/Octave, which generates realizations of a random variable that is distributed according to the normalized Gaussian . Based on Eq. (1.17) and Eq. (1.18), if one creates a new random variable , the mean and variance of are and , respectively.

Considering Matlab/Octave, the command x=sqrt(newVariance)*randn(1,N)+newMean provides Gaussians with arbitrary mean and variance.

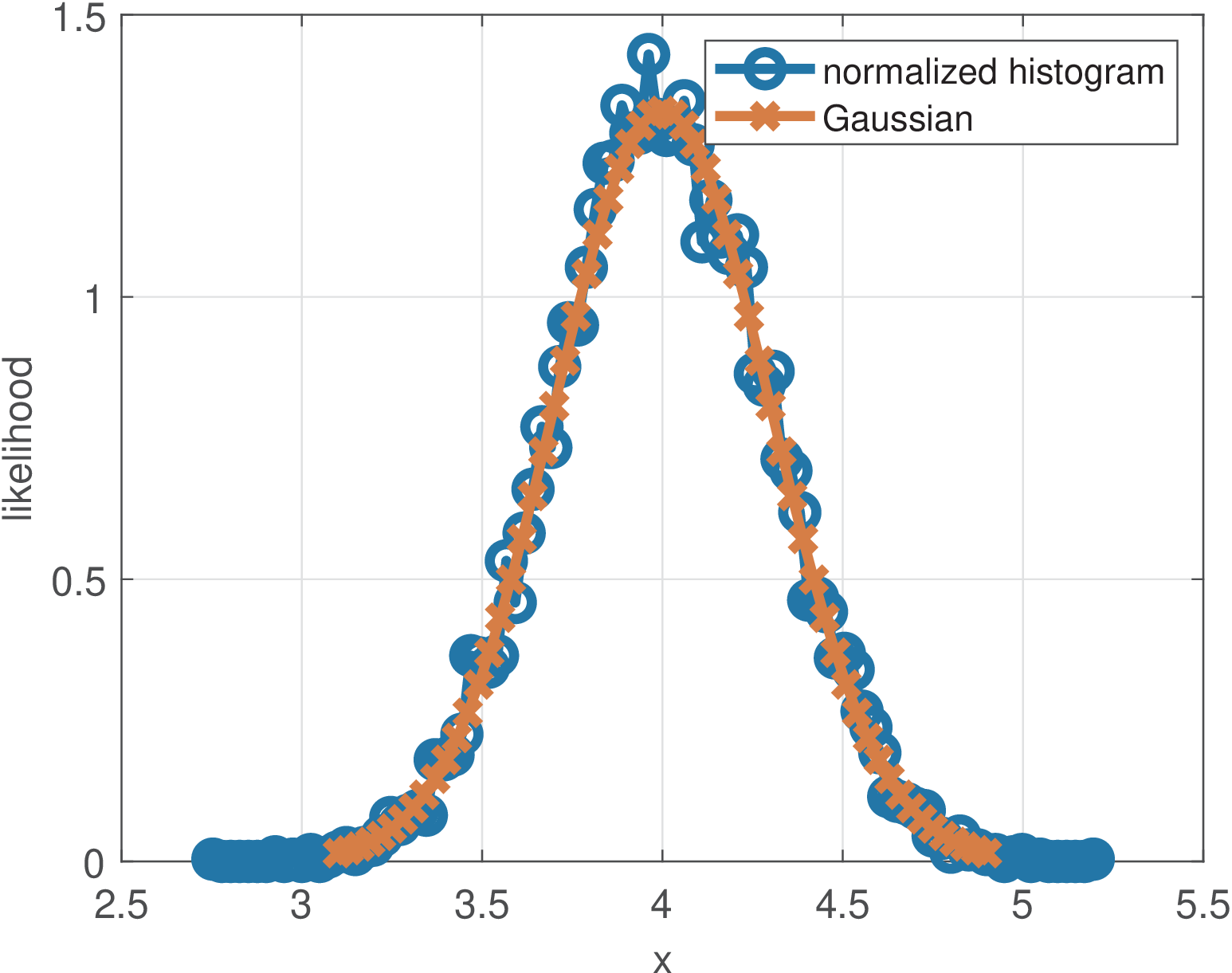

Listing 1.9 was used to generate the samples of and illustrates how to draw samples from a Gaussian with any given mean and variance from calls to a random number generator that outputs samples from a standard Gaussian .

1newMean=4; %new mean 2newVariance=0.09; %new variance 3N=10000; %number of random samples 4x=sqrt(newVariance)*randn(1,N)+newMean;

Figure 1.29 was obtained by using 10 thousand samples from a Gaussian and using 100 bins for the histogram. Now the Gaussian estimation is relatively good when compared to the one depicted in Figure 1.28.

It should be noted that the normalized histogram of a continuous PDF indicates likelihood, not probability. The likelihood function is the PDF viewed as a function of the parameters. Therefore, it is possible to have values larger than one in the ordinate, such as in Figure 1.29.

While the previous example assumed a Gaussian PDF, the next one deals with a uniform PDF.

Example 1.15. Calculating and changing the mean and variance of a uniform probability density function. A random variable distributed according to a uniform PDF with support (range from to of values that have non-zero likelihood) has a mean given by

|

| (1.19) |

and variance:

|

| (1.20) |

Eq. (1.20) can be proved observing that the uniform PDF is a constant over the range and zero otherwise. Hence, using Eq. (B.30) with the function leads to

|

| (1.21) |

where is the PDF support.

When using the random number generator rand for uniformly distributed samples, one should notice that the dynamic range is , i. e., and . Hence, Eq. (1.19) indicates the mean is 0.5 and the variance given by Eq. (1.20) is .

Drawing from rand, it is possible to generate N samples uniformly distributed in an arbitrary range with the command x = a + (b-a) * rand(N,1).

1.6.3 Periodic and aperiodic signals

Periodicity in continuous-time

A signal (same discussion applies to ) is periodic if a given segment of is eternally repeated, such that for some , where is called the period. For example, if seconds and , for all values of .

In the example of , it is easy to check that , and so on. In other words, a signal with period is also periodic in . The fundamental period is the smallest value of for which . Note that the definition imposes and a constant signal is not considered periodic.

Example 1.16. Using the LCM and GCD for a periodic signal composed by commensurate frequencies. Two frequencies and are called commensurate if their ratio can be written as a rational number , where are non-zero integers. Instead of frequencies, one can use their associated time periods.

Assume a signal is composed by the sum of periodic components , each one with period and frequency . The set of frequencies is commensurate if all pairs are commensurate. In this case, the fundamental period of can be found using the least common multiple (LCM) of the periods while the fundamental frequency can be found using the greatest common divisor (GCD) of the frequencies . Assuming both LCM and GCD are defined only for integer numbers, it may be needed to extract a common factor and later reintroduce it. A numerical example helps: let be composed by sinusoids with frequencies , and Hz, which corresponds to periods , and seconds, respectively. To find the LCM, one may need to multiply all periods by 10 and then calculate that . Dividing this result by the factor 10 leads to s. This LCM could be obtained in Matlab/Octave with lcm(lcm(4,60),80) given that this function is limited to accepting only two input arguments.

Periodicity of a generic discrete-time signal

A discrete-time signal is periodic if for some integer . Similar to the continuous-time case, the value is called the fundamental period if it corresponds to the minimum number of samples in which the amplitudes repeat.

Periodicity of discrete-time sinusoids

One important thing is that the discrete-time counterpart of some periodic analog signals may be non-periodic. The next paragraphs discuss the periodicity of discrete-time sinusoids such as , and . For example the signal is periodic with period s. However, the discrete-time signal is non-periodic.

A discrete-time sinusoid such as is periodic only if is a ratio of two integers and as proved below.10 One can write:

If the parcel in previous expression is a multiple of , then . Hence, periodicity requires , which leads to the condition

|

| (1.22) |

for a discrete-time sinusoid to be periodic.

Example 1.17. Checking the periodicity of discrete-time sinusoids. For example, is non-periodic because and cannot be written as a ratio of two integers. In contrast, is periodic with period ( in this case). The signal is periodic because and , with and .

If in Eq. (1.22) is an irreducible fraction, then is the fundamental period. Otherwise, may be a multiple of the fundamental period.

Example 1.18. Finding the fundamental period requires reducing the fraction . For instance, the signal is periodic because and , with and . However, if one is interested on the fundamental period, it is necessary to reduce the fraction to , and obtain the fundamental period as samples.

In summary, when contrasting continuous and discrete-time sinusoids, to find the period of a continuous-time cosine , one can obtain the term that multiplies and calculate

which is given in seconds if is in rad/s. Hence, a continuous-time sinusoid is always periodic. But a discrete-time cosine may be quasi periodic (i. e., not periodic). If someone tries to simply calculate , it may end up with a non-integer period. The condition for periodicity is to be able to write as a ratio of integers, i. e.

where is the period in samples. After turning an irreducible fraction, is then the fundamental period.

Example 1.19. Meaning of when determining the fundamental period. To understand the role of , consider the signal . Assume for a moment that we are forced to consider . With this restriction, could not be the period because it is not an integer. However, if one allows for , which corresponds to three times the number of samples specified by , the result is the integer period samples.

Hence, reflects how many full cycles of the sinusoid are needed before the discrete-time signal starts repeating with perfect alignment at integer time steps. See Example 1.21 for a discussion on finding and using Matlab/Octave.

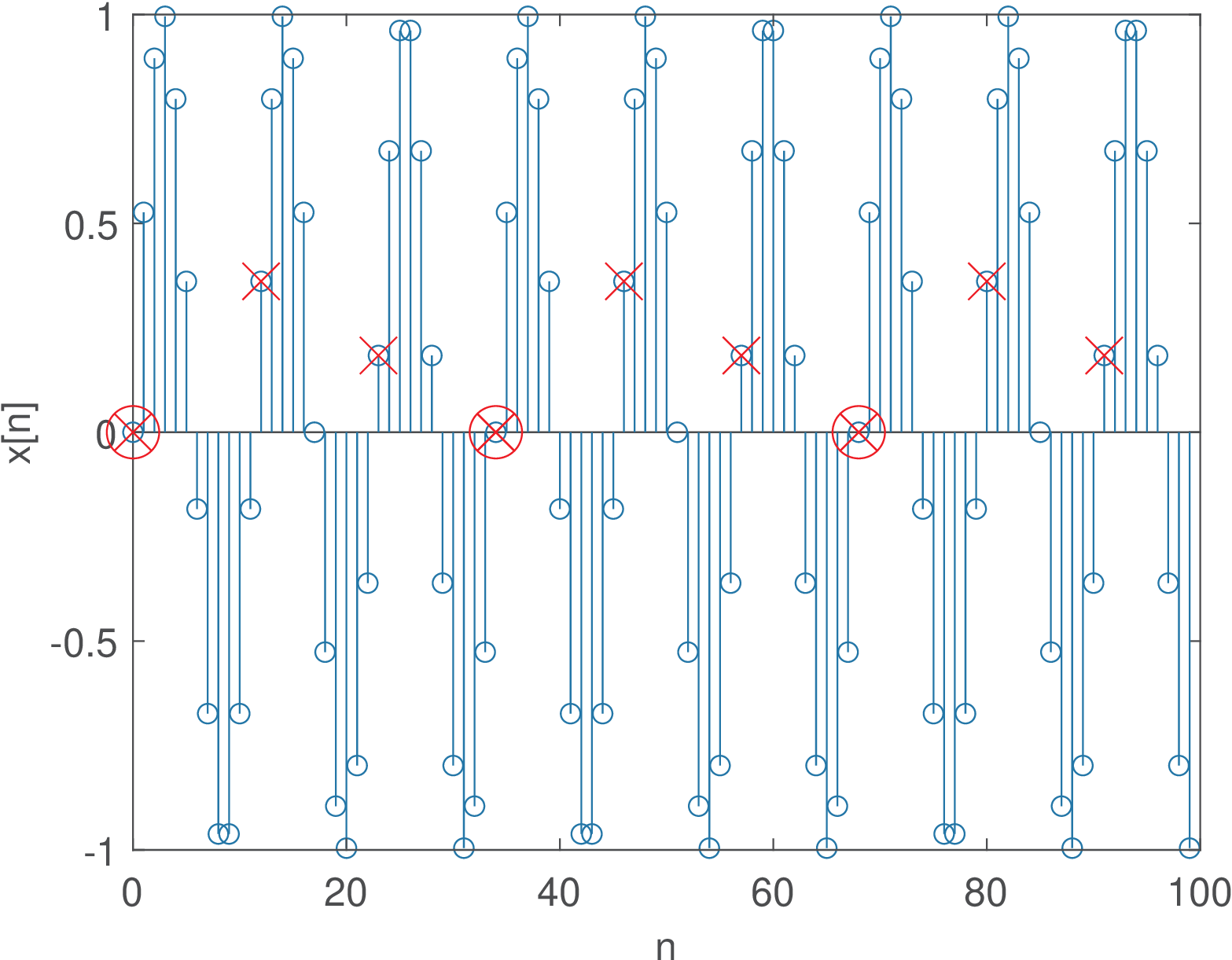

Example 1.20. Almost (quasi) periodic signals. Sometimes, it is misleading to guess the period of a discrete-time cosine or sine via the observation of its graph. Figure 1.30 depicts the graph of and was obtained with the following code:

1M=100, w=0.2; %%num of samples and angular freq. (rad) 2n=0:M-1; %generate abscissa 3xn=sin(w*n); stem(n,xn); %generate and plot a sinusoid

In this case, the signal seems to have a period around 31 samples at a first glance (because ). But, for example, will never be 0 at the beginning of a cycle for a value of other than . Therefore, in spite of resembling a periodic signal, the angular frequency is such that is a irrational number and a cycle of numbers will never repeat. In this case, the signal is called almost or quasi periodic.11

It is useful to visualize a discrete-time sinusoid that is periodic with . Figure 1.31 depicts the graph of and illustrates the repetition of sine envelopes within a period of samples.

Figure 1.30 and Figure 1.31 illustrate how to distinguish strictly periodic sinusoids from quasi periodic, and help interpreting in Eq. (1.22).

Example 1.21. Using rat in Matlab/Octave to find the period of discrete-time sinusoids. The Matlab/Octave function rat for rational fraction approximation can be used for finding and . But care must be exercised because rat approximates the input argument within a given tolerance. The code below illustrates how this function can be used to obtain and :

In this case, the result is m=3, N=10, as expected. However, note that w=0.2,[m,N]=rat(w/(2*pi)) returns m=113, N=3550, which is not precise (recall that if the sinusoid is non-periodic). Modifying the previous command to use a smaller tolerance w=0.2,[m,N]=rat(w/(2*pi),1e-300) gives much larger values for m,N, which clearly indicates that the user must be aware that rat uses approximations. Make sure you can generate discrete-time sinusoids with distinct values of and and understand the roles played by these two values.

1.6.4 Power and energy signals

It is important to understand the concepts of power and energy of a signal. One reason is that, in some cases, the equation to be used for a specific analysis (autocorrelation, for example) differs depending if or are not finite. This section assumes continuous-time but the concepts are also valid for discrete-time signals.

If is the energy dissipated by a signal during a time interval , the average power along is

If is the instantaneous power of , can be calculated as

|

| (1.23) |

If the interval is not specified, it is implicitly assumed (by default) the whole time axis and

In this case, is defined as the limit

|

| (1.24) |

Note that, because the time interval goes to infinite (denominator), is zero unless the energy (numerator) also goes to infinite. This situation suggests the definition of power and energy signals, which have finite power and energy, respectively. Their characteristics are summarized in Table 1.3, which also indicates that there is a third category for signals that have neither finite power or energy.

| Category | Example(s) |

||

| Power signal | finite | and other periodic signals |

|

| Energy signal | finite | 0 | and |

| Neither |

|

The most common power signals are periodic. In this case, the energy in one period

can be used to easily calculate

because what happens in one period is replicated along the whole time axis.

The most common energy signals have a finite duration, such as . Assuming the signals have finite amplitude, their energy in a finite time interval cannot be infinite. Note that infinite duration signals, such as , can also have a finite energy in case their amplitude decay over time.

It is assumed throughout this text that the signals are currents or voltages over a resistance , such that the instantaneous power is

Besides, to deal with signals representing both currents and voltages without bothering about the normalization by , it is assumed that ohm. Hence, the instantaneous power is for any real and, more generally, in case is complex-valued.

Throughout the book, unless stated otherwise, is assumed to be in volts, and in watts and in joules. A dimensional analysis of should not be interpreted directly as watts = , but watts = /ohm, where the normalization by 1 ohm is implicit. Two examples are provided in the sequel.

Example 1.22. Sinusoid power. Sinusoids and cosines can be represented by and are power signals with average power . The phase does not influence the power calculation. The proof follows.

The angular frequency is rad/s, where is the period in seconds.

Using the identity (see Appendix):

The first parcel of the integral is zero, independent of because corresponds exactly to two periods of the cosine with angular frequency , while the second parcel is . The average power is

|

| (1.25) |

which is a result valid for any sinusoid or cosine. This discussion assumed continuous-time signals, but Eq. (1.25) is also valid for discrete-time sinusoids.

Example 1.23. Power of a DC signal. A constant signal (i. e., a DC signal) has power because the energy at any interval is .

The root-mean-square (RMS) value of any signal is the DC value that corresponds to the same power of , i. e., or, equivalently, For example, the RMS value of a cosine is because a DC signal has the same average power as .

As discussed in Section B.6.4, is a distribution and it is tricky to define the energy or power of a sampled signal, which is the topic of Section 3.5.2.