1.9 An Introduction to Quantization

Similar to sampling, quantization is very important, for example, because computers and other digital systems use a limited number of bits to represent numbers. In order to convert an analog signal to be processed by a computer, it is therefore necessary to quantize its sampled values.

Quantization is used not only when ADCs are involved. Whenever it is necessary to perform an operation representing the numbers with less bits than the current representation, this process can be modeled as quantization. For instance, an image with the pixels represented by 8 bits can be turned into a binary image with a quantizer that outputs one bit. Or an audio signal stored as a WAV file can be converted from 24 to 16 bits per sample.

1.9.1 Quantization definitions

A quantizer maps input values from a set (eventually with an infinite number of elements) to a smaller set with a finite number of elements. Without loss of generality, one can assume in this text that the quantizer inputs are real numbers. Hence, the quantizer is described as a mapping from a real number to an element of a finite set called codebook. The quantization process can be represented pictorially by:

The cardinality of this set is and typically , where is the number of bits used to represent each output . The higher , the more accurate the representation tends to be.

A generic quantizer can then be defined by the quantization levels specified in and the corresponding input range that is mapped to each quantization level. These ranges impose a partition of the input space. Most practical quantizers impose a partition that corresponds to rounding the input number to the nearest output level. An alternative to rounding is truncation and, in general, the quantizer mapping is arbitrary.

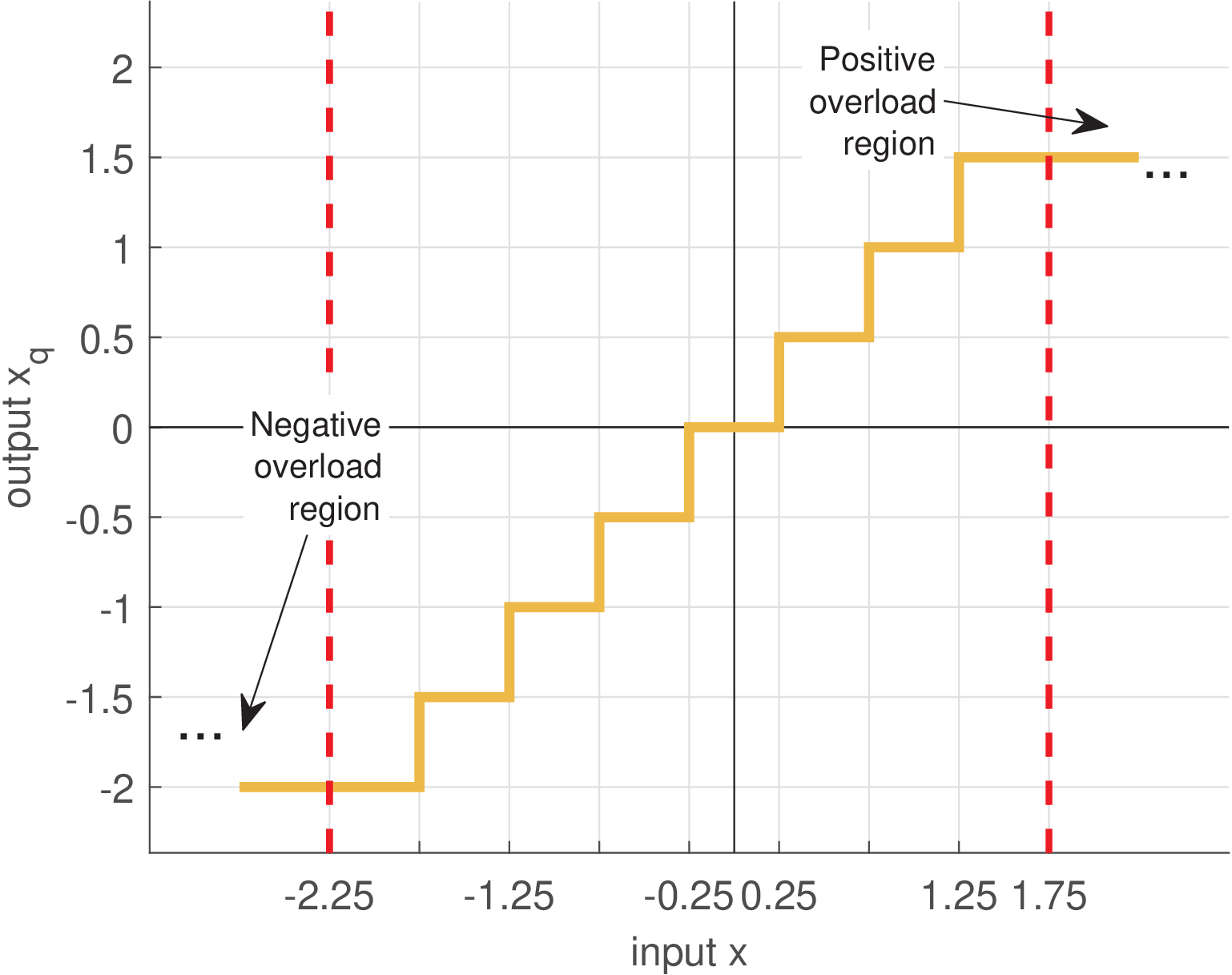

The input/output relation of most quantizers can be depicted as a “stairs” graph, with a non-linear mapping describing the range of input values that are mapped into a given element of the output set .

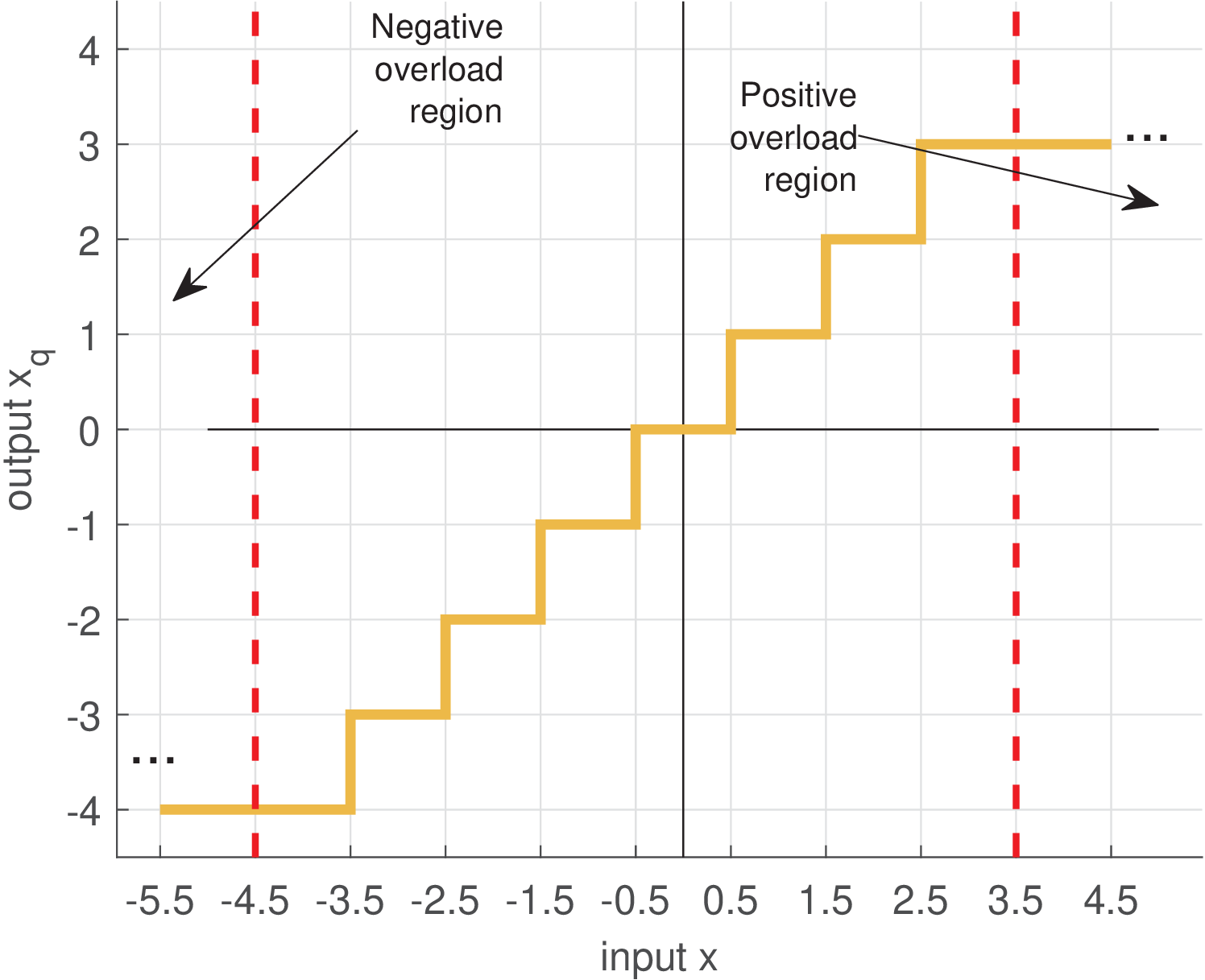

Example 1.39. Example of generic (non-uniform) quantizer. For instance, assuming the codebook is and a quantizer that uses “rounding” to the nearest output level, the input/output mapping is given by Figure 1.46.

Table 1.6 lists the input ranges and corresponding quantizer output levels for the example of Figure 1.46.

| Input range | Output level |

Given the adopted rounding, the input thresholds (in this case, , and ) indicate when the output changes, and are given by the average between two neighboring output levels. For instance, the threshold is the average between the outputs and .

The adopted convention is that the input intervals are left-closed and right-open (e. g., in Table 1.6).

In most practical quantizers, the distance between consecutive output levels is the same and the quantizer is called uniform. In contrast, the quantizer of Table 1.6 is called non-uniform.

Example 1.40. Examples of rounding and truncation. Assume the grades of an exam need to be represented by integer numbers. Using rounding and truncation, an original grade of 8.7 is quantized to 9 and 8, respectively. In this case, the quantization error is and , respectively. Assuming the original grade can be any real number , rounding can generate quantization errors in the range while truncation generates errors .

Unless otherwise stated, hereafter we will assume the quantizer implements rounding.

1.9.2 Implementation of a generic quantizer

A quantizer can be implemented as a list of if/else rules implementing a binary search. The quantizer of Table 1.6 can be implemented as in Listing 1.15.

1x=-5 %define input 2if x < -0.5 3 if x < -2.5 4 x_quantized = -4 %output if x in ]-Inf, -2.5[ 5 else 6 x_quantized = -1 %output if x in [-2.5, -0.5[ 7 end 8else 9 if x < 1.5 10 x_quantized = 0 %output if x in [-0.5, 1.5[ 11 else 12 x_quantized = 3 %output if x in [1.5, Inf[ 13 end 14end

An alternative implementation of quantization is by performing a “nearest-neighbor” search: find the output level minimum squared error between the input value and all output levels (that play the role of “neighbors” of the input value). This is illustrated in Listing 1.16.

1x=2.3 %define input 2output_levels = [-4, -1, 0, 3]; 3squared_errors = (output_levels - x).^2; 4[min_value, min_index] = min(squared_errors); 5x_quantized = output_levels(min_index) %quantizer output

1.9.3 Uniform quantization

Unless otherwise stated, this text assumes uniform quantizers with a quantization step or step size .

In this case, all the steps in the quantizer “stairs” have both height and width equal to . In contrast, note that the steps in Figure 1.46 are not equal. Uniform quantizers are very useful because they are simpler than the generic non-uniform quantizer.

A uniform quantizer is defined by the number of bits and only two other numbers: and , where is the minimum value of the quantizer output , where

|

| (1.41) |

For instance, assuming bits, and , the output levels are .

The value of is also called the least significant bit (LSB) of the ADC, because it represents the value (e. g., in volts) that corresponds to a variation of a single bit. Later, this is also emphasized in Eq. (1.47) in the context of modeling the representation of real numbers in fixed-point as a quantization process.

1.9.4 Granular and overload regions

There are three regions of operation of a quantizer: the granular and two overload (or saturation) regions. An overload region is reached when the input falls outside the quantizer output dynamic range. The granular is between the two overload regions.

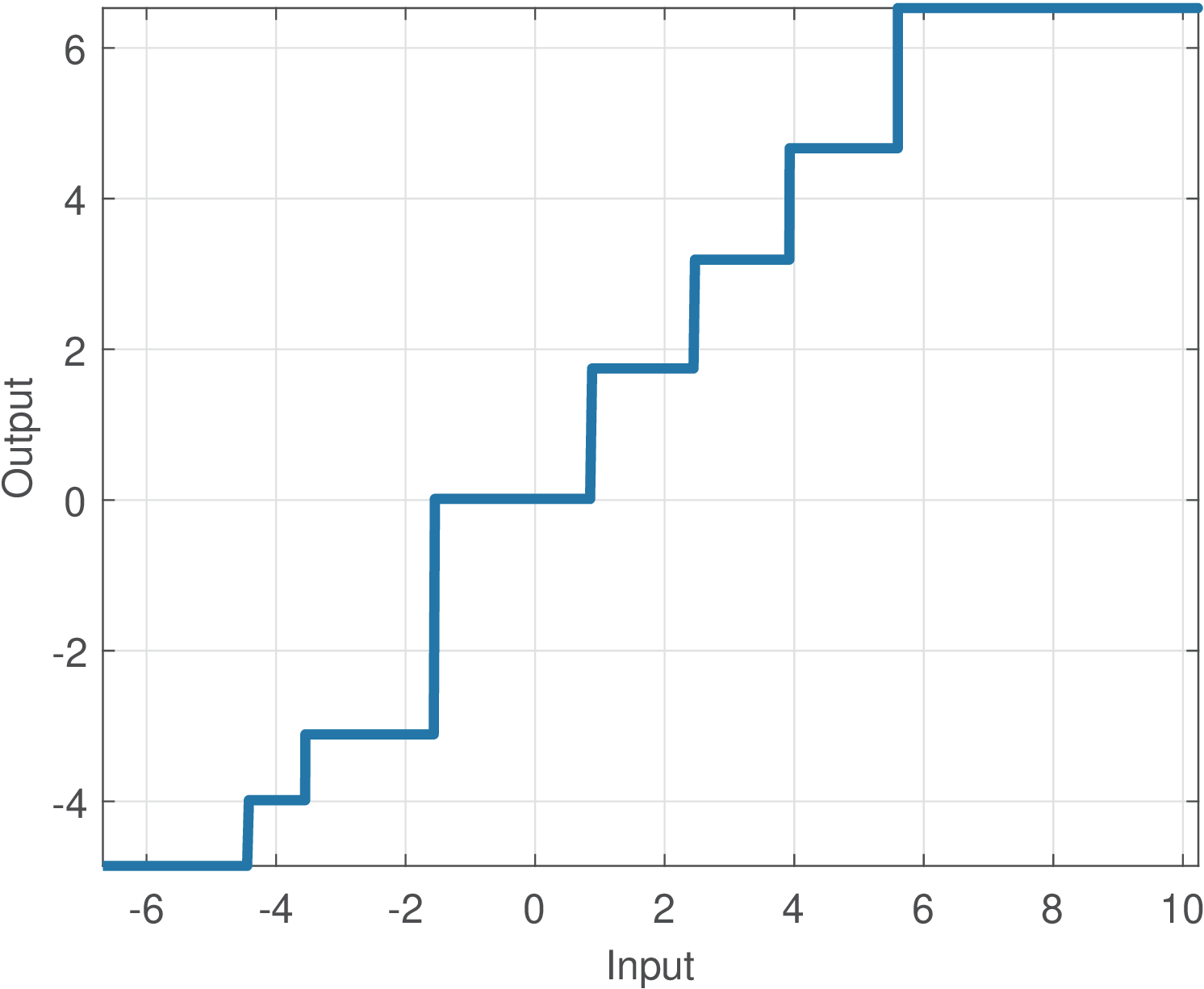

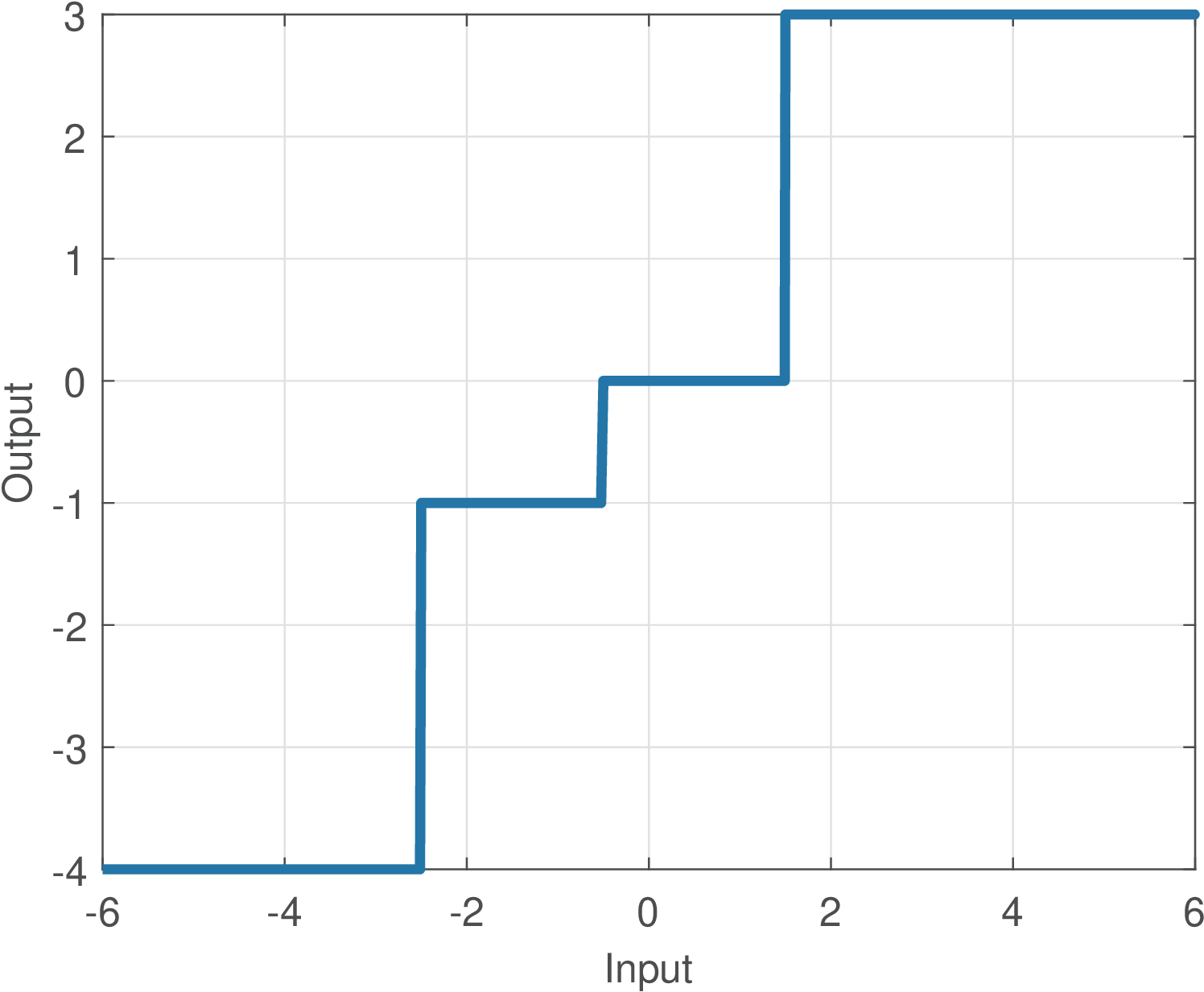

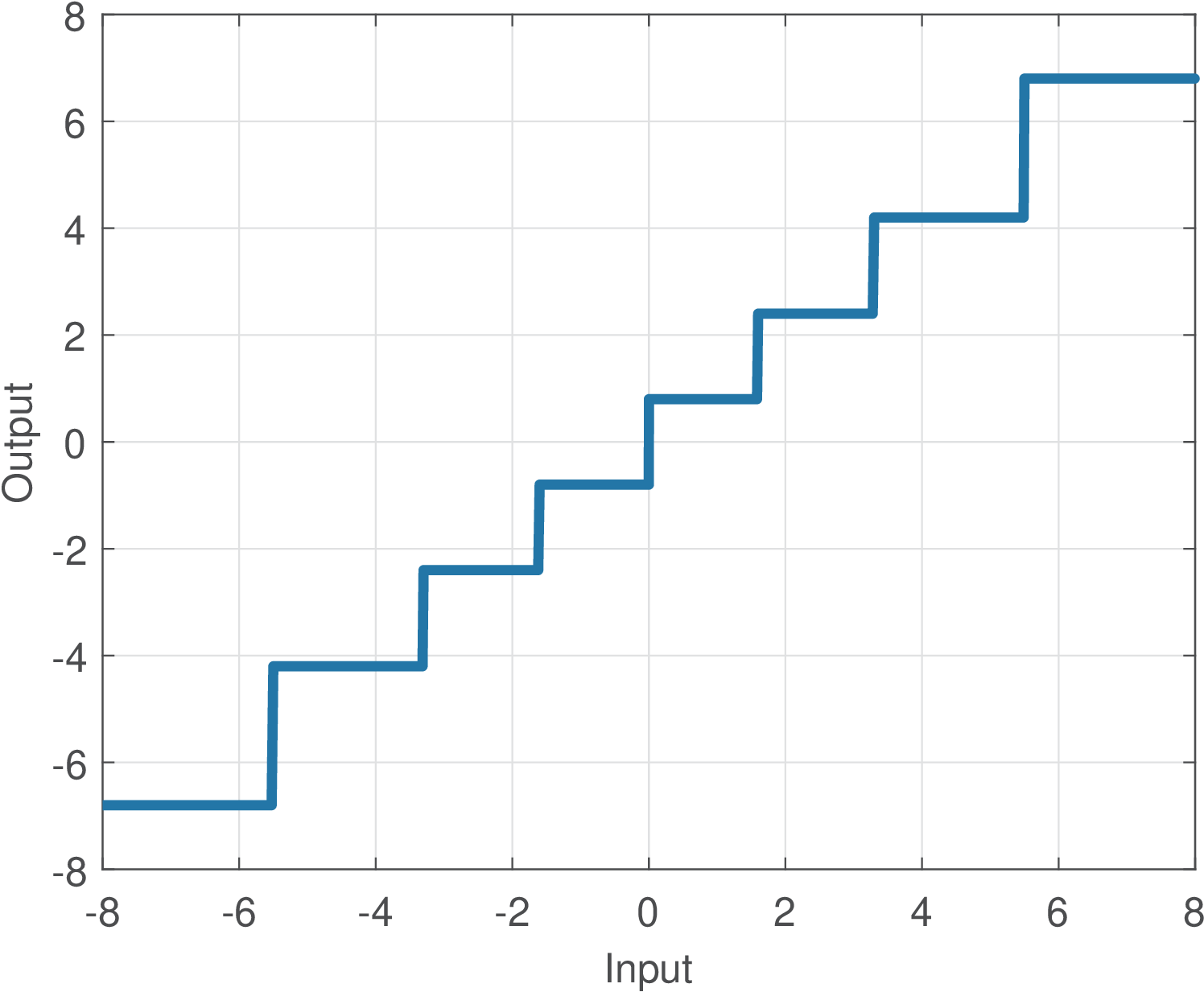

Figure 1.47 depicts the input/output relation of a 3-bits quantizer with . In Figure 1.47, because , operation in the granular region corresponds to the rounding operation round to the nearest integer. For example, round(2.4)=2 and round(2.7)=3. When , the quantization still corresponds to rounding to the nearest , but is not restricted to be an integer anymore.

Figure 1.48 depicts the input/output relation of a 3-bits quantizer with . Close inspection of Figures 1.47 and 1.48 shows that the error is in the range within the granular region, but can grow indefinitely when the input falls in one of the overload regions.

1.9.5 Design of uniform quantizers

There are several strategies for designing a uniform quantizer. Some are discussed in the following paragraphs.

Designing a uniform quantizer based on input’s statistics

Ideally, the chosen output levels in should match the statistics of the input signal . A reasonable strategy is to observe the histogram (see Figure 1.20, for an histogram example) of the quantizer input and pick reasonable values to use in . For example, if the input data follows a Gaussian distribution, the sample mean and variance can be estimated and the dynamic range assumed to be and to have the quantizer covering approximately 99% of the samples.

In case the input data has approximately a uniform distribution, the quantizer can be designed based on the dynamic range of its input , where and are the minimum and maximum amplitude values assumed by .

One should notice that outliers (a sample numerically distant from the rest of the data, typically a noisy sample) can influence too much a design strictly based on and . This suggests always checking the statistics of via a histogram.

Designing a uniform quantizer based on input’s dynamic range

Even if the input signal does not have a uniform distribution, it is sometimes convenient to adopt the suboptimal strategy of taking into account only the input dynamic range of . Among several possible strategies, a simple one is to choose and

|

| (1.42) |

In this case, the minimum quantizer output is , but the maximum value does not reach and is given by . The reason is that, as indicated in Eq. (1.41), .

To design a uniform quantizer in which , one can adopt

|

| (1.43) |

Example 1.41. Design of a uniform quantizer. For example, assume the quantizer should have bits and the input has a dynamic range given by V and V. Using Eq. (1.42) leads to V and the quantizer output levels are . Note that . Alternatively, Eq. (1.43) can be used to obtain V and the quantizer output levels would be with .

Example 1.42. Forcing the quantizer to have an output level representing “zero”. One common requirement when designing a quantizer is to reserve one quantization level to represent zero (otherwise, it would output a non-zero value even with no input signal). The levels provided by Eq. (1.43) can be refined by counting the number of levels representing negative numbers and adjusting such that . Listing 1.17 illustrates the procedure.

1Xmin=-1; Xmax=3; %adopted minimum and maximum values 2b=2; %number of bits of the quantizer 3M=2^b; %number of quantization levels 4delta=abs((Xmax-Xmin)/(M-1)); %quantization step 5QuantizerLevels=Xmin + (0:M-1)*delta %output values 6isZeroRepresented = find(QuantizerLevels==0); %is 0 there? 7if isempty(isZeroRepresented) %zero is not represented yet 8 Mneg=sum(QuantizerLevels<0); %number of negative 9 Xmin = -Mneg*delta; %update the minimum value 10 NewQuantizerLevels = Xmin + (0:M-1)*delta %new values 11end

Considering again Example 1.41, Listing 1.17 would convert the original set into , which has one level dedicated to represent zero.

Example 1.43. Designing a quantizer for a bipolar input.

Assume here a bipolar signal , for instance with peak values and ). If it can be assumed that , Eq. (1.43) simplifies to

Furthermore, one quantization level can be reserved to represent zero, while and levels represent negative and positive values, respectively. Most ADCs adopt this division of quantization levels when operating with bipolar inputs. For example, several commercial 8-bits ADCs can output signed integers from to 127, which corresponds to the integer range to . These integer values can be multiplied by the quantization step in volts to convert the quantizer output into a value in volts.

Listing 1.18 illustrates a Matlab/Octave function that implements a conventional quantizer with and saturation. The round function is invoked to obtain the integer that represents the number of quantization levels as

|

| (1.44) |

and later .

1function [x_q,x_i]=ak_quantizer(x,delta,b) 2% function [x_q,x_i]=ak_quantizer(x,delta,b) 3%This function assumes the quantizer allocates 2^(b-1) levels to 4%negative output values, one level to the "zero" and 2^(b-1)-1 to 5%positive values. See ak_quantizer2.m for more flexible allocation. 6%The output x_i will have negative and positive numbers, which 7%correspond to encoding the quantizer's output with two's complement. 8x_i = x / delta; %quantizer levels 9x_i = round(x_i); %nearest integer 10x_i(x_i > 2^(b-1) - 1) = 2^(b-1) - 1; %impose maximum 11x_i(x_i < -2^(b-1)) = -2^(b-1); %impose minimum 12x_q = x_i * delta; %quantized and decoded output

1delta=0.5; %quantization step 2b=3; %number of bits, to be used here as range -2^(b-1) to 2^(b-1)-1 3x=[-5:.01:4]; %define input dynamic range 4[xq,x_integers] = ak_quantizer(x,delta,b); %quantize 5plot(x,xq), grid %generate graph

The commands in Listing 1.19 can generate a quantizer input-output graph such as Figure 1.48 using the function ak_quantizer.m.

1.9.6 Design of optimum non-uniform quantizers

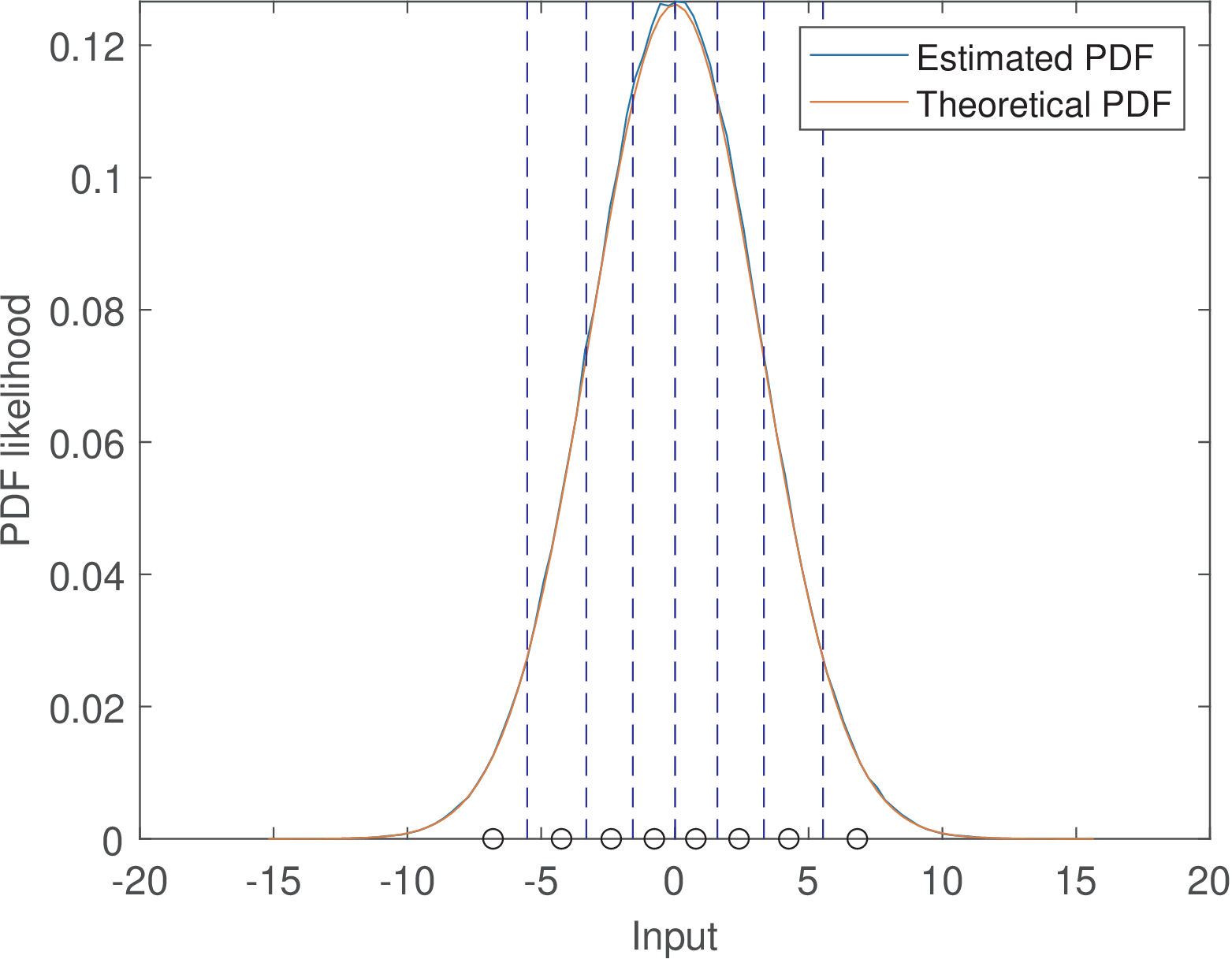

The optimum quantizer, which minimizes the quantization error, must be designed strictly according to the input signal statistics (more specifically, the probability density function of ). The uniform quantizer is the optimum only when the input distribution is uniform. For any other distribution, the Lloyd’s algorithm15 can be used to find the optimum set of output levels and the quantizer will be non-uniform. For instance, if the input is Gaussian, the optimum quantizer allocates a larger number of quantization levels around the Gaussian mean than in regions far from the mean.

Example 1.44. Optimum quantizer for a Gaussian input. This example illustrates the design of an optimum quantizer when the input has a Gaussian distribution with variance . It is assumed that the number of bits is three such that the quantizer has output levels. The following code illustrates the generation of and quantizer designer using Lloyd’s algorithm.

1clf 2N=1000000; %number of random samples 3b=3; %number of bits 4variance = 10; 5x=sqrt(variance)*randn(1,N); %Gaussian samples 6M=2^b; 7[partition,codebook] = lloyds(x,M); %design the quantizer

The obtained results are listed in Table 1.7 and Figure 1.49.

| Input range | Output level |

Note that the two intervals close to 0 have length 1.6 while the interval has a longer length . In general, the intervals corresponding to regions with less probability are longer such that more output levels can be concentrated in regions of high probability. This is illustrated in Figure 1.49.

Because Lloyd’s algorithm has a set of samples as input, this number has to be large enough such that the input distribution is properly represented. In this example, N=1000000 random samples were used.

Figure 1.49 depicts the mapping for the designed non-uniform quantizer.

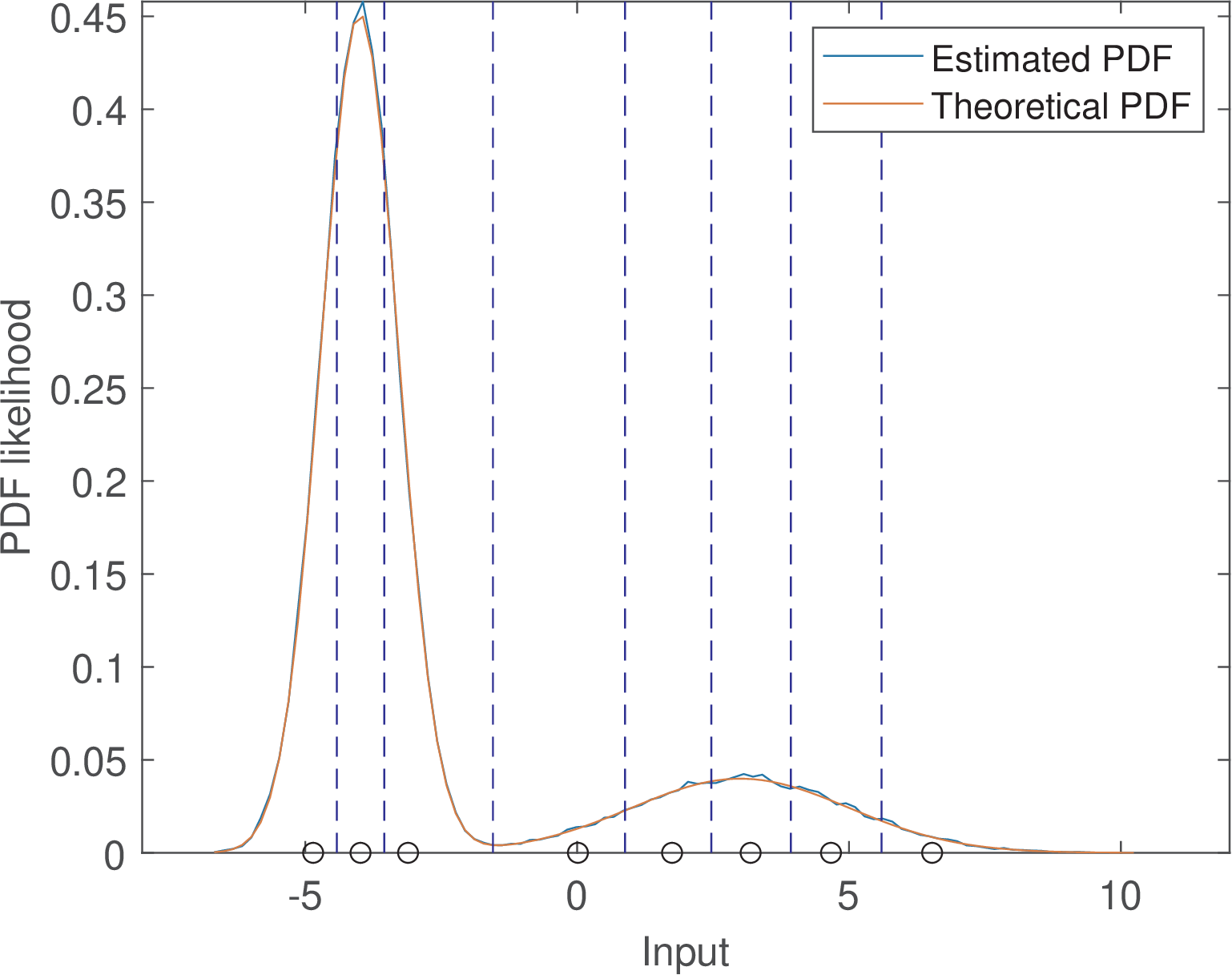

Example 1.45. Optimum quantizer for a mixture of two Gaussians. This example is similar to Example 1.44, but the input distribution here is a mixture of two Gaussians instead of a single Gaussian. More specifically, the input has a probability density function given by

|

| (1.45) |

where the notation describes a Gaussian with average and variance .

Figure 1.51 illustrates results obtained with the Lloyd’s algorithm (see script figs_signals_quantizer.m). Figure 1.51 indicates that three quantizer levels are located closer to the Gaussian with average , while the other five levels are dedicated to the Gaussian with . Because the input distribution is the mixture of Gaussians of Eq. (1.45), quantization steps are smaller around the input , and larger around . The largest step height occurs in a region close to due to the relatively low probability in this region.

1.9.7 Quantization stages: classification and decoding

Recall that the complete A/D process to obtain a digital signal to represent an analog signal can be modeled as:

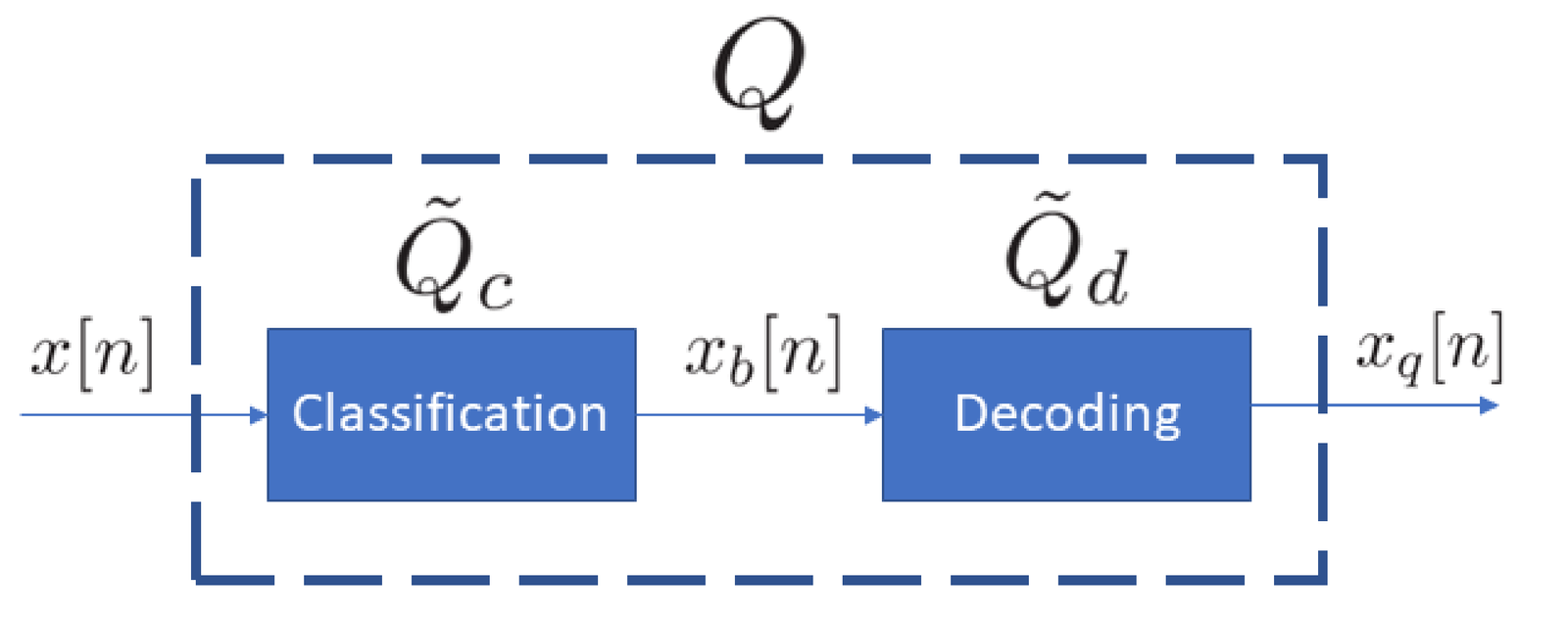

Note the convention adopted for the quantizer output: is represented by real numbers, as in Listing 1.18. This is convenient because one wants to perform signal processing with , for instance, calculating the quantization error with . However, in digital hardware, a binary representation better describes the actual output of an ADC. Hence, a more faithful representation of the job actually done by the ADC should be denoted as

where generates binary codewords, not real numbers. Hence, to enable better representing an ADC output and related variables, the quantization is broken into the two stages depicted in Figure 1.52: classification and decoding, denoted as and , respectively.

The output of the classification stage is represented by a binary codeword with bits. The decoding corresponds to obtaining a real number using . The next section discusses the decoding stage.

1.9.8 Binary numbering schemes for quantization decoding

The decoding mapping in Figure 1.52 can be arbitrary, but in most cases simple and well-known binary numbering schemes are adopted. When assuming a quantizer that uses bipolar inputs (see Eq. (1.44)), decoding often relies on a binary numbering scheme that is able of representing negative numbers. Otherwise, an unsigned numbering scheme is adopted. In both cases, the numbering scheme allows to convert into an integer , represented in the decimal system.

As the final decoding step, the integer is multiplied by the quantization step , as indicated in Example 1.43 and repeated below:

|

| (1.46) |

There are various binary numeral systems that differ specially on how negative numbers are represented. Some options are briefly discussed in the sequel.16 Table 1.8 provides an example of practical binary numbering schemes, also called codes. The first column indicates values for while the others indicate values. For example, note in Table 1.8 that corresponds to 7 in Gray code and in two’s complement.

| Binary

number | Unsigned

integer | Two’s

complement | Offset | Sign and

magnitude | Gray code

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Three of the codes exemplified in Table 1.8 will be discussed in more details because they are very important from a practical point of view: unsigned integer, offset and two’s complement output coding.

The unsigned representation, sometimes called standard-positive binary coding, represents numbers in the range . It is simple and convenient for arithmetic operations such as addition and subtraction. However, it cannot represent negative values. An alternative is the offset representation, which is capable of representing numbers in the range , as used in Listing 1.18. This binary numbering scheme can be obtained by subtracting the “offset” from the unsigned representation. The two’s complement is a popular alternative that is also capable of representing numbers in the range and allows for easier arithmetics than the offset representation, as studied in textbooks of digital electronics. Note that the offset representation can be obtained by inverting the most significant bit (MSB) of the two’s complement. For this reason it is sometimes called offset two’s complement.

Example 1.46. Negative numbers in two’s complement. To represent a negative number in two’s complement, one can first represent the absolute value in standard-positive binary coding, then invert all bits and sum 1 to the result. For example, assuming should be represented with bits, the first step would lead to , which corresponds to . Inverting these bits leads to and summing to 1 gives the final result corresponding to .

Note that two’s complement requires sign extension (see, e. g., [ url1two]) by extending the MSB. For example, if were to be represented with bits, the first step would lead to , corresponding to . After inverting the bits to obtain and summing 1, the correct codeword to represent would be (instead of ). Comparing the representation of in 16 and 8 bits, one can see that the MSB of the 8-bits representation was extended (repeated) in the first byte of the 16-bits version.

Distinguishing and may seem an abuse of notation because they are the same number described in decimal and binary numeral systems, respectively. However, the notation is useful because there are many distinct ways of mapping to and vice-versa.

Table 1.8 covers the binary numbering schemes adopted in most ADCs. These schemes can be organized within a class of number representation called fixed-point. But the representation of real numbers within computers typically use a more flexible representation belonging to another class called floating-point. The fixed and floating-point representations are discussed in the next subsection.

1.9.9 Representing numbers in fixed-point

Both fixed and floating-point representations are capable of representing real numbers. A real number can be written as the sum of an integer part and a fractional part that is less than one and non-negative.

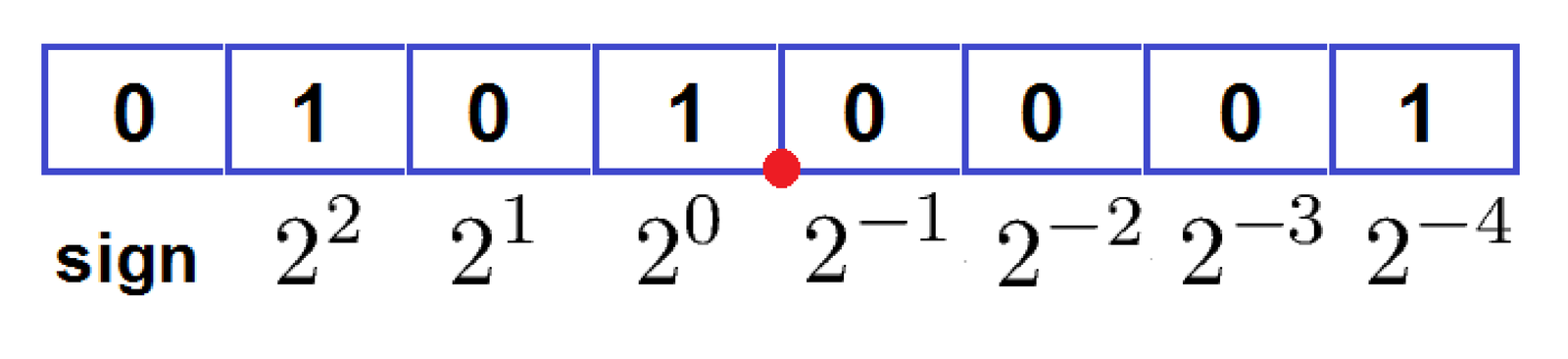

The name “fixed-point” is due to the fact that the bits available to represent a number are organized as follows:

- 1 bit representing the sign (positive or negative) of the integer part and, consequently, of the number itself

- bits representing the integer part of the number

- bits representing the fractional part of the number

such that bits. For simplicity, the sign bit is assumed here to be the MSB, but in practice that depends on the adopted standard and the machine endianness (see [ url1ofi]) for a brief explanation of big and little endian formats).

One way of thinking the “point” is that there is flexibility on choosing the power of 2 that is associated to the second bit, at the right of the MSB. After this design choice is made, a fictitious point separates the bits corresponding to the integer part from the fractional part bits. There is a convention of denoting the values chosen for and as Q..

Figure 1.53 shows an example of fixed-point representation using Q3.4, for which , and, consequently . In this case, the point is located between the fourth and fifth bits. The binary codeword is mapped to a real number according to the position of the point:

It is very common to normalize the numbers to make them fit the range . In this case can be zero, and the second bit has a weight in base-2 equals to such that the point is between the MSB and this bit. The other bit weights are then , according to their respective position. For example, the outputs of a quantizer with bits when represented in the format Q0.1 (, ) assume four possible outputs . In general, the weight of the LSB corresponds to the value of the step size, i. e.

|

| (1.47) |

Note that the number 5.0625 in Figure 1.53 can also be obtained by identifying that corresponds to and calculating . If the point were moved to the position between the MSB and second bit, which corresponds to choosing Q0.7, the quantizer would have and the same codeword would be mapped to .

In summary, when a fixed-point representation is assumed, the quantizer has step and can output numbers in the range . Algorithm 1 describes the process for representing a real number in fixed-point using Q..

For example, using Algorithm 1 to represent in Q3.4, the first step is to calculate . Then, using round leads to , which corresponds to and in two’s complement. This algorithm is the same implemented in Listing 1.18 but explicitly outputs the binary codeword .

Choosing the point position depends on the dynamic range of the numbers that will be represented and processed. This is typically done with the help of simulations and is a challenging task due to the tradeoff between range (the larger the better) and precision (the larger the better). In sophisticated algorithms, it is typically required to assume distinct positions for the point at different stages of the process. In such cases, using specialized tools such as Mathwork’s Fixed-Point Designer [ url1mfi] can significantly help.

Example 1.47. Fixed-point in Matlab. Matlab counts with the well-documented Fixed-Point Toolbox. If you have this Matlab’s toolbox, try for example doc fi. The Matlab command for representing as in the previous example is:

which outputs:

x_q = -7.437500000000000 DataTypeMode: Fixed-point: binary point scaling Signed: true WordLength: 8 FractionLength: 4 RoundMode: nearest OverflowMode: saturate ProductMode: FullPrecision MaxProductWordLength: 128 SumMode: FullPrecision MaxSumWordLength: 128 CastBeforeSum: true

Example 1.48. Quantization example in Matlab. As another example, let volts be the amplitude of at , such that , where is the value of corresponding to . The value of quantized with bits and reserving bits to the integer part is , which can be obtained in Matlab with:

1format long %to better visualize numbers 2x=2804.6542; %define x 3xq=fi(x) %quantize with 16 bits and "best-precision" fraction length

When fi is used with a single argument, it assumes a 16-bit word length and it adjusts such that the integer part of the number can be represented (called in Matlab “best-precision” fraction length). For this example, the result is:

xq = 2.804625000000000e+03 Signedness: Signed WordLength: 16 FractionLength: 3

indicating that bits are used to represent the integer part of (2084), given that . Considering one bit is reserved for the sign, then bits, which is the FractionLength.

1.9.10 IEEE 754 floating-point standard

Choosing the point position provides a degree of freedom for trading off range and precision in a fixed-point representation. However, there are situations in which a software needs to deal simultaneously with small and large numbers, and having distinct positions for the point all over the software is not practical or efficient. For example, if distances in both nanometers and kilometers should be manipulated with reasonable precision, the dynamic range of would require bits for the integer part or keeping track of two distinct point positions. Hence, in many cases, a floating-point representation is more adequate.

A number in floating point is represented as in scientific notation:

|

| (1.48) |

For example, assuming the base is 10 for simplicity, the number is interpreted as , where 1.234567 is the significand and 2 the exponent. While in fixed-point the bits available to represent a signed number are split between and , in floating point the bits are split between the significand and exponent.

The key aspect of Eq. (1.48) is that, as the value of the exponent changes, the “point” has new positions and allows to play a different trade off between range and precision: the values a floating point can assume are not uniformly spaced. While fixed-point numbers are uniformly spaced by , which then imposes the maximum error within the range, the error magnitude in a floating point representation depends on the value of the exponent. For a given error in the significand representation, the larger the exponent, the larger the absolute error of with respect to the number it represents. This is a desired feature in many applications and floating point is used for example in all personal computers. The fixed-point niche are embedded systems, where power consumption and cost have more stringent requirements.

The IEEE 754 standard for representing numbers and performing arithmetics in floating point is adopted in almost all computing platforms. Among other features,17 it provides support to single and double precision, which are typically called “float” and “double”, respectively, in programming languages such as C and Java.

The wide adoption of IEEE 754 is convenient because a binary file written with a given language in a given platform can be easily read with a distinct language in another platform. In this case, the only concern is to make sure the endianness is the same ([ url1ofi]). Two examples discussing numerical errors and quantization in practical scenarios are presented in Appendix B.12.

1.9.11 Quantization examples

Some quantization examples are provided in the following paragraphs.

Example 1.49. ECG quantization. An ECG signal was obtained with a digital Holter that used a uniform quantizer with 8 bits represented in two’s complement and quantization step mV. Hence, when represented as decimal numbers, the quantized outputs vary from to 127. Assuming three quantizer input samples as , and , all in mV, the quantized values are given by , which lead to , and . The corresponding binary codewords are , and . Using Eq. (1.46), the quantizer outputs are , and mV, and the quantization error is , and mV.

Example 1.50. Quantization using 16 bits. Assume the quantizer of an ADC with and bits is used to quantize the discrete-time signal at time . The input amplitude is and the quantizer output is . It is also assumed that the classification quantizer stage outputs in two’s complement, which in decimal is . The decoding then generates

using Eq. (1.46).

Example 1.51. The quantization step size may be eventually ignored. As mentioned, the quantization step or step size indicates the variation (e.g., in volts) between two neighbor output values of a uniform quantizer.

In many stages of a digital signal processing pipeline of algorithms, the value of is not important. For example, speech recognition is often performed on samples represented as integers instead of . In such cases, it is convenient to implicitly assume .

For example, considering Example 1.50 and an original sample value in analog domain volts, this sample may be represented and processed in digital domain as . This corresponds to an implicit assumption that and , instead of minding to adopt the actual of Example 1.50.

If at some point of the DSP pipeline, it becomes necessary to relate and , such as when the average power in both domains should match, the scaling factor is then incorporated in the calculation. But many signal processing stages can use instead of .

Example 1.52. The step size of the ADC quantizer is often unavailable. When the quantization is performed in the digital-domain, such as when representing a real number in fixed-point, the value of the step is readily available. However, the value of the step in the quantization process that takes place within an ADC chip is often not available.

For example, as explained in Application 1.1, the Matlab/Octave function’s readwav returns (when the option ’r’ is used in Matlab) or a scaled version of , where the scaling factor is not but . The value of that the sound board’s ADC used to quantize the analog values obtained via a microphone for example, is often not registered in audio files (e. g., ‘wav’), which store only the samples and other information such as . Application 1.5 discusses more about in commercial sound boards.

File formats developed for other applications may include the value of in their headers. For example, Application 2.3 uses an ECG file that stores the information that mV. The analog signal power can then be estimated as 3.07 mW by normalizing the digital samples with .

If the correct value of is not informed, it is still possible to relate the analog and digital signals in case there is information about the analog signal dynamic range.

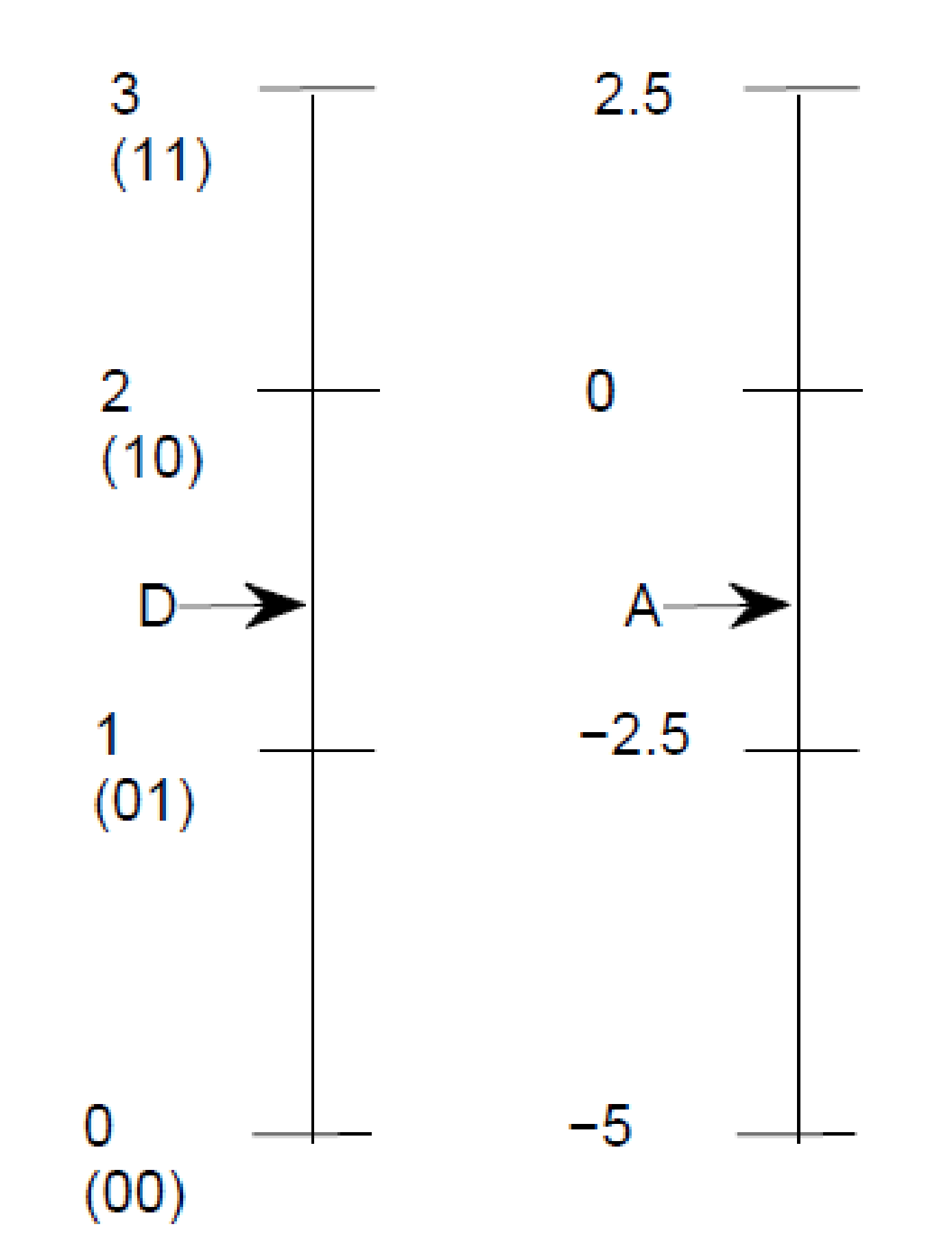

As an example of using proportion to relate and , assume a 2-bits ADC that adopts unsigned integers as indicated in Table 1.8. The task is to relate the integer codewords (corresponding to 00, 01, 10 and 11, respectively) to values, which can represent volts, amperes, etc. It is assumed that the original is given in volts and continuously varies in the range . The relation between and can be found by proportion as:

which gives

and, consequently, an estimate of V. In this case, the ADC associates the code 00 to V, 01 to V, 10 to V and 11 to V, as illustrated in Figure 1.54.