2.10 Applications

This section will briefly discuss some applications of transforms.

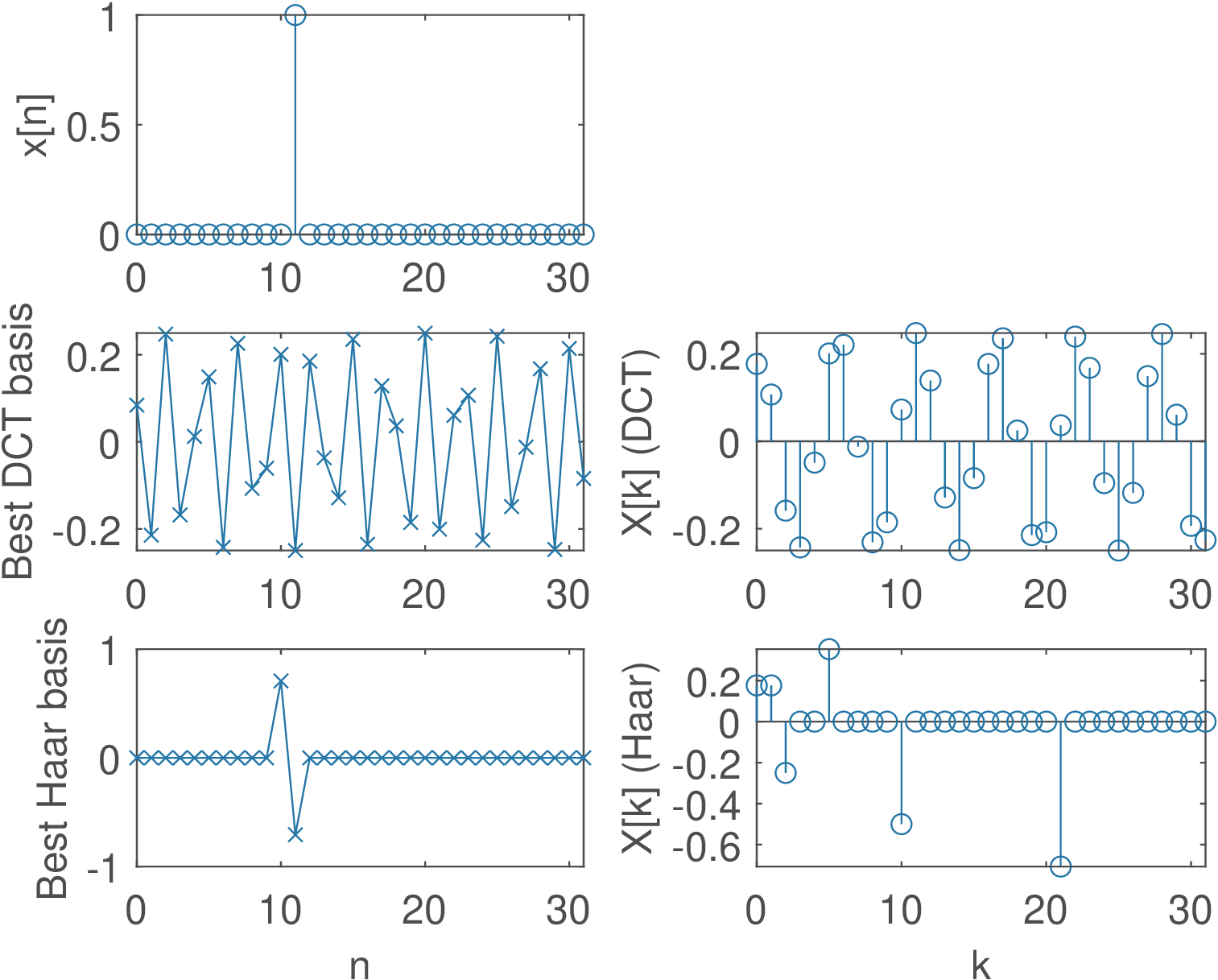

Application 2.1. Time-localization property of Haar coefficients.

A very simple experiment will help illustrating the time-localization property of Haar functions. Consider the signal to be analyzed in the transform domain is a single impulse , which will be represented by a vector will zeroed elements but the one corresponding to the position. The script MatlabOctaveBookExamples/ex_transforms_dcthaar_example.m can be used to observe the behavior of the transforms. Figure 2.33 shows the output of the script when the signal is . In this case, many Haar coefficients are zero and the one with largest magnitude () has a corresponding basis function (bottom of the left column) that helps to localize the occurrence of the impulse in . In contrast, most DCT coefficients have relatively large values and the basis functions do not help in time-localization. The mentioned script allows to investigate this aspect more deeply. Compare the results of DCT and Haar transforms when the signal is a cosine summed to an impulse.

Application 2.2. Inverse Laplace transforms with Matlab’s Symbolic Math Toolbox. Keeping in mind that Matlab adopts the unilateral Laplace transform, its Symbolic Math Toolbox can be used to calculate Laplace transforms and their inverses. For example, the unilateral Laplace transform of can be obtained with the commands syms t; laplace(t / t). Similarly, the commands syms w0,t; laplace(sin(w0*t)) indicate that for . The following commands illustrate the inverse transform of given by Eq. (2.54):

1syms s %defines s as a symbolic variable 2a=1; b=-2; c=-1+j*2; %choose poles and zeros 3X=(s-a)/((s-b)*(s-c)*(s-conj(c))); %define X(s) 4ilaplace(X) %Matlab's inverse unilateral Laplace transform

The command pretty (e. g., pretty(ilaplace(X))) can be used to beautify the output, which in this case is - 3/5 exp(-2 t) + 1/5 exp(-t) (3 cos(2 t) + sin(2 t)). Find the inverse Laplace transform for other signals, such as .

Application 2.3. ECG transform coding. Because a block transform is completely specified by an invertible matrix , going from one domain to another ( to or vice-versa) is loss-less, which means there is no loss of information. In many applications, such as image coding, where the goal is to minimize the number of bits to represent an image, it is useful to compress the signal using a lossy algorithm. Transform coding can be a lossy algorithm when it discards or quantizes coefficients in the transform domain. This is effective in many applications because, while all samples in have the same “importance”, in the transform domain the coefficients can be organized according to some hierarchy or rank. In transform coding the most “important” coefficients are quantized more carefully than the unimportant ones.

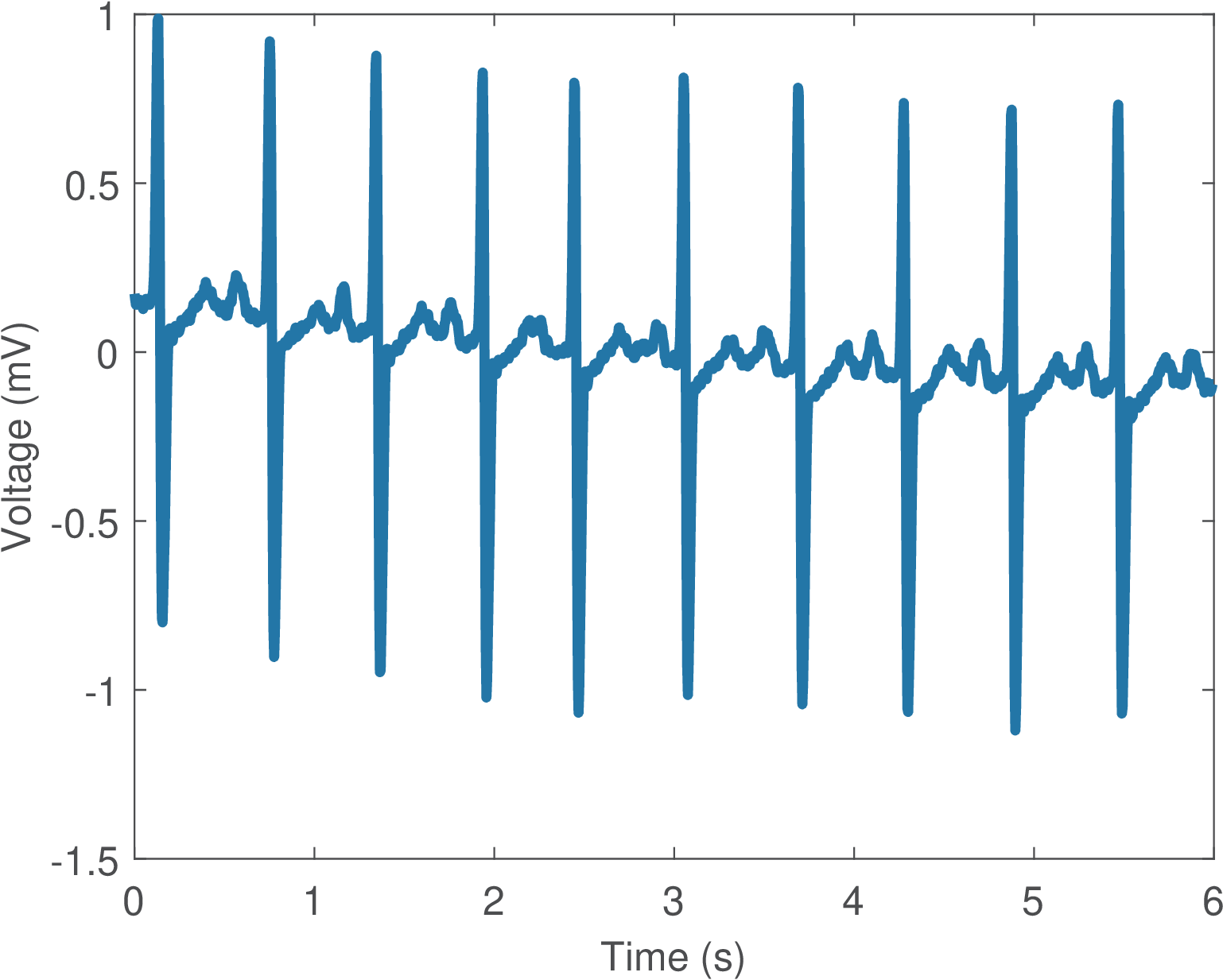

Figure 2.34 depicts a segment (first 1,500 samples) of an ECG signal. The ECG data used here is from the MIT-BIH Arrhythmia Database.18, and Figure 2.34 corresponds to the first channel of file 12531_04.dat. The function ak_rddata.m indicates that the DC offset of the ADC chip was calibrated to be zero. It also indicates that the ECG signals were digitized with a sampling frequency Hz, 12 bits per sample, and a quantizer with step mV. The (empirical) signal power is 3.07 mW and, following the conventional quantization model, Eq. (1.20) suggests the quantization noise power was mW. Hence, the SNR corresponding to the quantization stage alone is approximately 97.7 dB.

A very simple example of a transform coding system is provided as Matlab/Octave code in the companion software (in directory Applications/ECGTransformCoding). The idea is to simply discard the higher-order coefficients in the transform domain. For example, assume that is the dimension of and , which are related by a transform matrix . In the encoding stage, a discrete-time input signal is segmented into blocks of dimension , composing a set of input vectors , where is the available number of samples of . The coding scheme converts each into and keeps in a new vector only the first elements of (the remaining are discarded). The vectors can be concatenated to create an encoded output signal with samples.

In the decoding stage, in order to reconstruct a signal from (if there were no losses, ), an inverse procedure is adopted. The signal is blocked into vectors of dimension and zero elements are inserted to create vectors of dimension (this operation is called zero-padding). Each of these -dimensional vectors is converted to time-domain vectors , which are then concatenated to form .

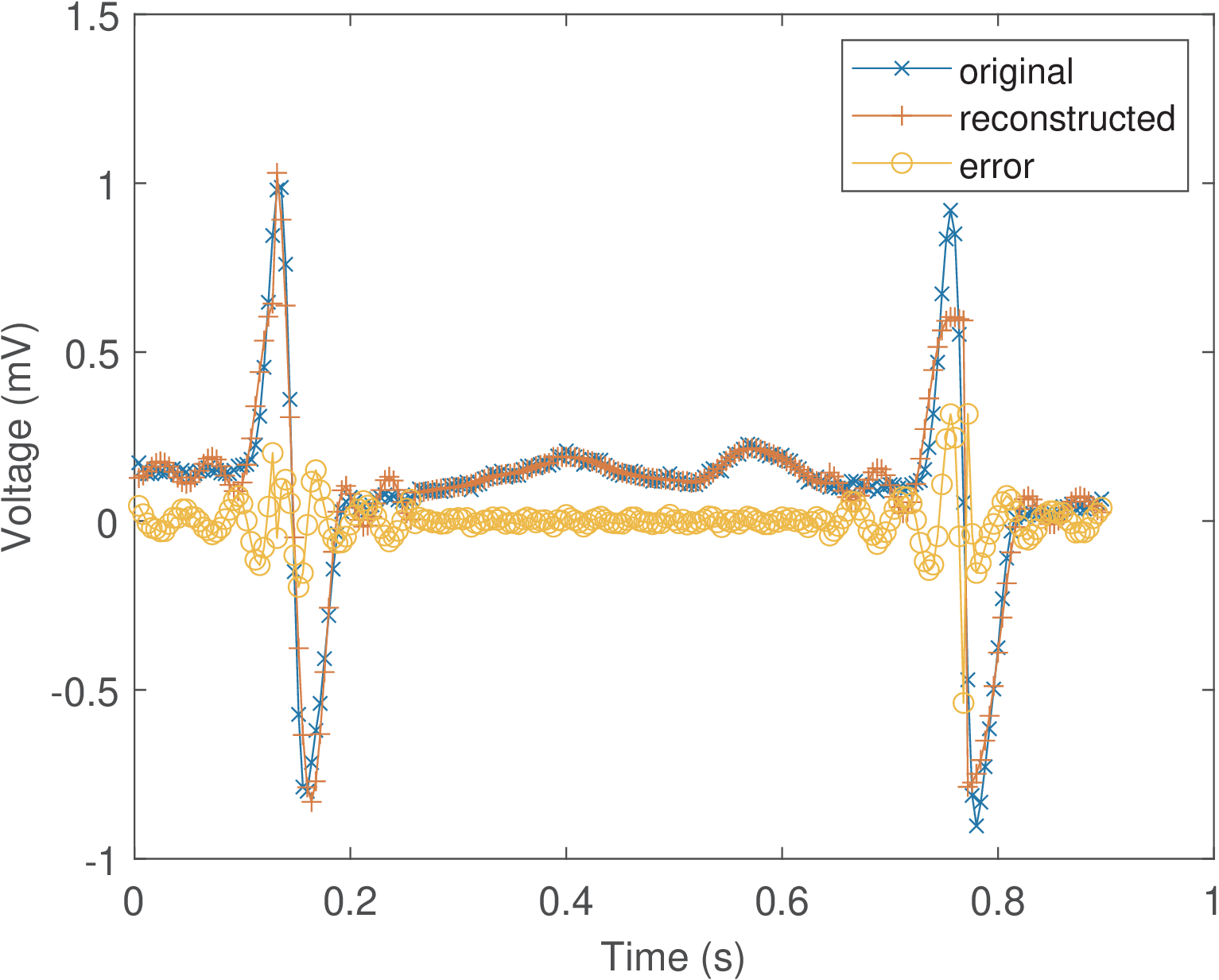

Figure 2.35 was obtained using a DCT matrix of points and discarding 26 (high-frequency) coefficients. It can be seen that keeping only out of 32 coefficients is enough to provide a rough representation of but the error is significant in high-frequency regions. Note that the DCT operation is performed in blocks of samples and Figure 2.35 is the result of processing many of these blocks.

In practice, a quantization scheme should be adopted to represent with a small number of bits per sample. The compression ratio is the number of bits to represent divided by the number of bits to represent . For simplicity, the proposed example does not involve quantization and the compression ratio is evaluated by . This corresponds to assuming that each sample of both and is represented with the same number of bits.

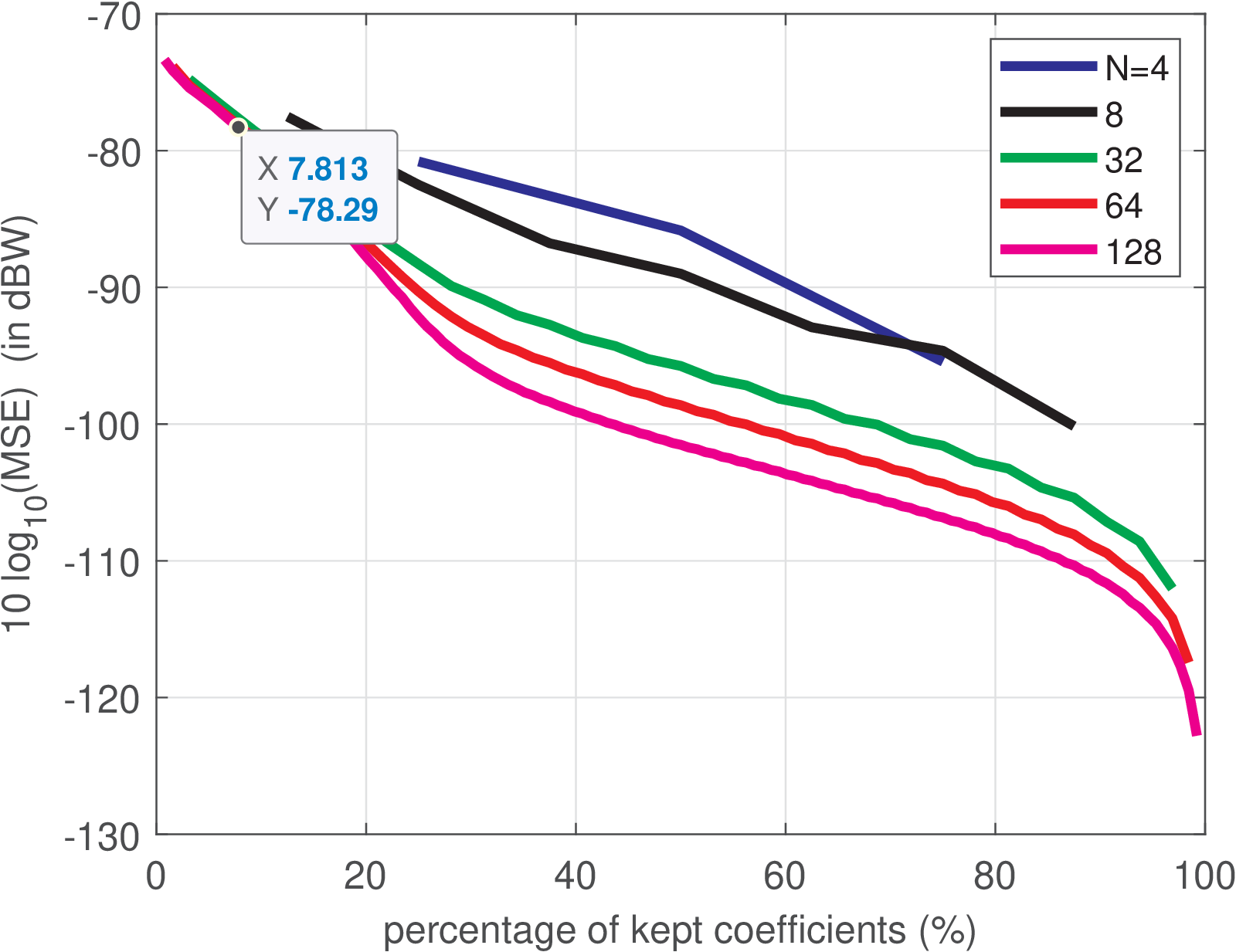

Figure 2.36 shows the percentage of kept coefficients in the abscissa and in the ordinate, where

is the mean-squared error, which is equivalent to the power of the error signal. If (100% in the abscissa) the system is lossless and ( in log scale), so the graphs do not show these points. The larger , the better the coding performance but the higher the computational cost.

Assuming and as indicated in Figure 2.36, the abscissa is % and the corresponding error is W ( dBW) or, equivalently, dBm. Given that the signal power was estimated as 3.07 mW ( dBm), the dB when considering only the distortion incurred by this specific encoding procedure.

It should be noted that the dataset used is formed by abnormal ECG signals and, eventually, better results could be obtained when using another dataset.

Write an encoder that uses DCT coding to generate files of a relatively small size and a decoder to “decompress” such files and recover an ECG signal (an approximation to the original one). To do that, it is necessary to quantize the DCT coefficients and pack them in a way that the resulting file has a small size. Consider using scalar or vector quantization. The advantage of vector quantization is that you need to store only the index to the codebook entry (codeword) with a vector that will represent the DCT. In case you use a codebook with codewords, each entry can be conveniently written as an unsigned char of 8 bits. To design the codebook, besides the Mathwork’s code in the DSP System Toolbox, there are many algorithms such as the K-means with implementations available on the Web.

Another interesting exercise is to apply KLT and other transforms to this problem, trying to get better results than with the DCT.

Application 2.4. DCT coding of image.

The usefulness of DCT in coding is illustrated by the JPEG image coding standard.19 Before describing an example, two dimensional transforms are briefly discussed.

Linearly-separable two dimensional (2-D) transforms of a block (e. g., a matrix with pixel values) is obtained by first using the transform along the rows (or columns) and then transforming this result along the columns (or rows). Alternatively, one can use matrix notation: Because, is real for a DCT, one has

This is equivalent to first calculating : the 1-D DCT transform of each column of is placed in its corresponding column in a temporary matrix . Then, each row of is transformed by another 1-D DCT, which could be accomplished by . This result should be transposed to generate . These operations are equivalent to:

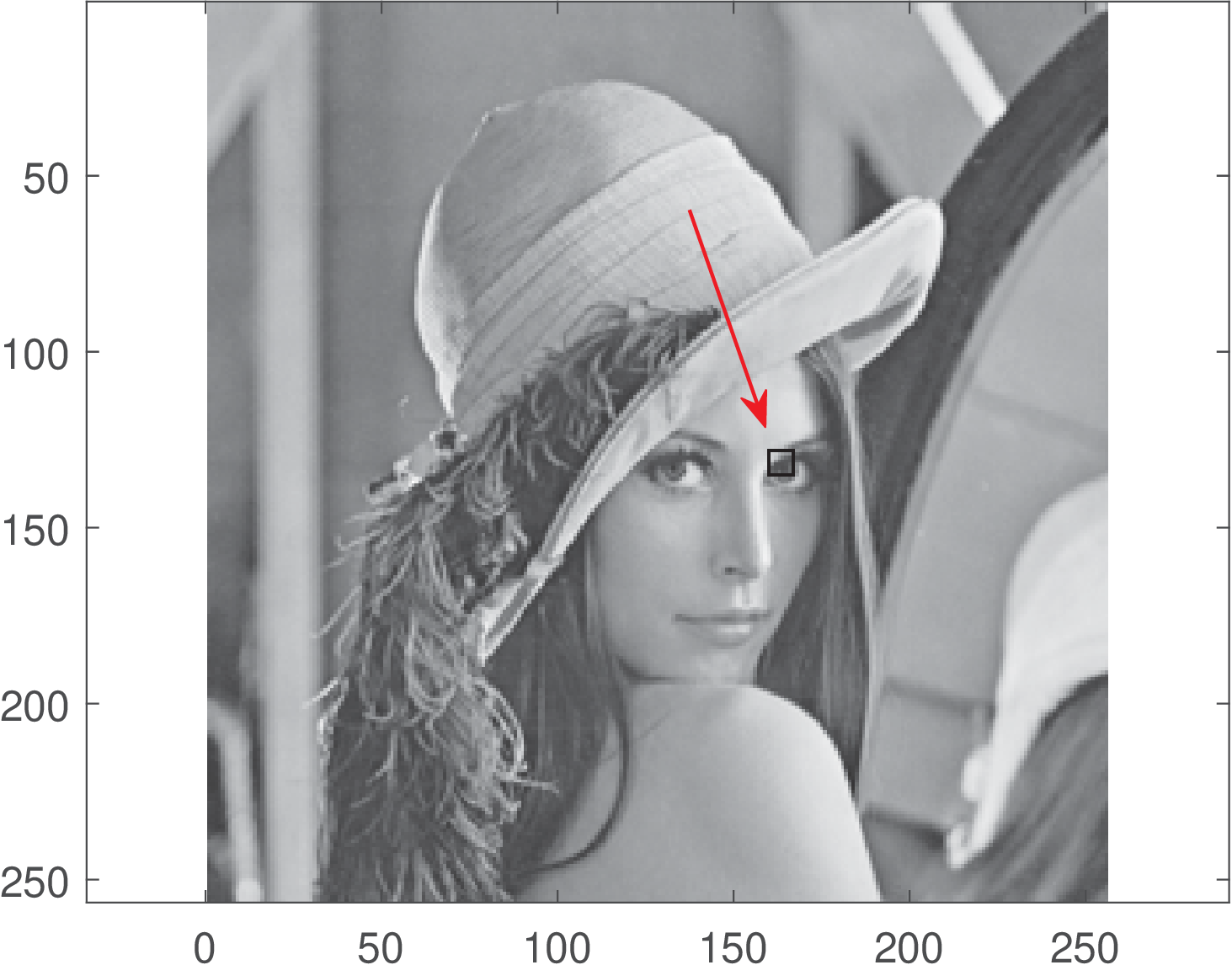

Listing 2.5 illustrates the adoption of a 2-D DCT for coding an image block (represented by a matrix).

1%load black & white 256 x 256 pixels of Lenna: 2fullPath='lenna_bw.gif' %file location 3 [lenaImage,map]=imread(fullPath); %read file 4colormap(map); %use the proper color map 5x1=128; x2=135; y1=160; y2=167; %to take a 8x8 block close to her eye 6x=lenaImage(x1:x2,y1:y2); %get the region 7x=double(x); %cast x to be double instead of integer 8%draw a rectangle to indicate the block: 9lenaImage(x1,y1:y2)=1; lenaImage(x2,y1:y2)=1; 10lenaImage(x1:x2,y1)=1; lenaImage(x1:x2,y2)=1; 11imagesc(lenaImage) %show the image 12axis equal %correct the aspect ratio 13%Some calculations to practice: 14X=octave_dct2(x); %calculate forward DCT 15x2=octave_idct2(X); %calculate inverse DCT to check 16numericError = max(x(:)-x2(:)) %any numeric error? 17Ah=octave_dctmtx(8); %now calculate in an alternative way 18X2 = Ah*x*transpose(Ah); 19%X2 = transpose(Ah)*x*Ah; %Note: this would be wrong! 20numericError2 = max(X(:)-X2(:)) %any numeric error?

Similar to the 1-D ECG coding example in Application 2.3, few DCT coefficients can be enough to represent an image. Study the code MatlabOctaveFunctions/ak_dctdemo.m using DCT to represent different images,20 including ones with text. Even better results can be obtained with the wavelet transform, which is used in the JPEG 2000 image coding standard. Write an encoder and decoder that use a transform to compress and decompress an image file.

Application 2.5. Orthogonality of continuous-time sinusoids over a given interval. In applications such as frequency modulation, it is important to know the conditions in which two cosines with frequencies and and phase , are orthogonal over a given interval of interest. Mathematically, this requirement can be expressed as

and, because the phase is the same for both cosines, this requirement is called “coherent orthogonality”. It can be shown21 that this condition is satisfied with integers and such that

For example, assume that seconds, and . In this case, and are 1650 and 1850 Hz, respectively. Note that the minimum value of is Hz in this case, which corresponds to choosing .

As a side note, for “noncoherent orthogonality”, the minimum frequency separation22 is .

Application 2.6. Orthogonality of finite duration discrete-time sinusoids. It is also important to evaluate in what conditions a pair of discrete-time sinusoids are orthogonal considering a finite duration interval.

Two discrete-time exponentials and , , are always orthogonal when summed over a range to (see Example 2.1), but this is not the case when the summation interval is finite. One way of inspecting what happens when the duration is finite is to reason as following: 1) keep in mind that the sum of the samples of a single sinusoid over a multiple of its fundamental period is zero. 2) Interpreting Example 2.3 in discrete-time allows to decompose the inner product for testing orthogonality into a sum of two sinusoids. 3) the inner product is mapped to summations of sinusoids with frequencies equal to and and the goal is to find an integer that is a multiple of both fundamental periods.

One application of this orthogonality principle is the design of a binary transmission, where the two signals are distinguished by their frequencies and . This scheme is called frequency shift keying (FSK). We are interested on determining the minimum symbol period that guarantees orthogonality between the two sinusoids. In the context of telecommunications, this minimum period will correspond to the highest symbol rate. The script MatlabOctaveBookExamples/ex_transforms_check_orthogonality.m can be used for studying this question and is repeated below. The sampling frequency is and one should check different pairs of and .

1if 1 %choose between two options 2 f0 = 980; %frequency of first sinusoid (in telecom, of bit 0) 3 f1 = 1180; %frequency of 2nd sinusoid (in telecom, of bit 1) 4else %another example 5 f0 = 1650; %1st sinusoid 6 f1 = 1850; %2nd sinusoid 7end 8Fs=9600; %assumed sampling frequency 9sumSinusoidFrequency = f0+f1; 10subractSinusoidFrequency = f1-f0; 11%reduce numbers to rational forms 12[m,n]=rat(sumSinusoidFrequency/Fs) 13[p,q]=rat(subractSinusoidFrequency/Fs) 14Nmin = lcm(n,q) %find the least commom multiple 15disp(['Minimum common period: ' num2str(Nmin)]) 16T_duration = Nmin / Fs; %minimum number of samples in seconds 17%disp(['Maximum symbol rate: ' num2str(1/T_symbolDuration) ' bauds']) 18%% Check if the product of the two sinusoids is really orthogonal 19Tsum = 1 / sumSinusoidFrequency; 20Tsub = 1 / subractSinusoidFrequency; 21disp('Numbers below should be integers:') 22T_duration/Tsum 23T_duration/Tsub 24Ts=1/Fs; %sampling interval 25t = 0:Ts:T_duration-Ts; %generate (discrete) time 26%use sin or cos with arbitrary phase 27b0 = sin(2*pi*f0*t+4)'; %column vector 28b1 = sin(2*pi*f1*t)'; %column vector 29normalizedInnerProduct = sum(b0.*b1) / length(b0) %inner product 30if (normalizedInnerProduct > 1e-13) 31 error('not orthogonal! should not happen!'); 32end

Modify Listing 2.6 to use discrete-time sinusoids in radians, not Hz. Assume and find a set of sinusoids that are mutually orthogonal over an interval of eight consecutive samples. What was the maximum value of that you could find? Now, consider the set of basis functions for a -point DFT and evaluate their orthogonality. Going back to the question about , can it be larger than ? Why?

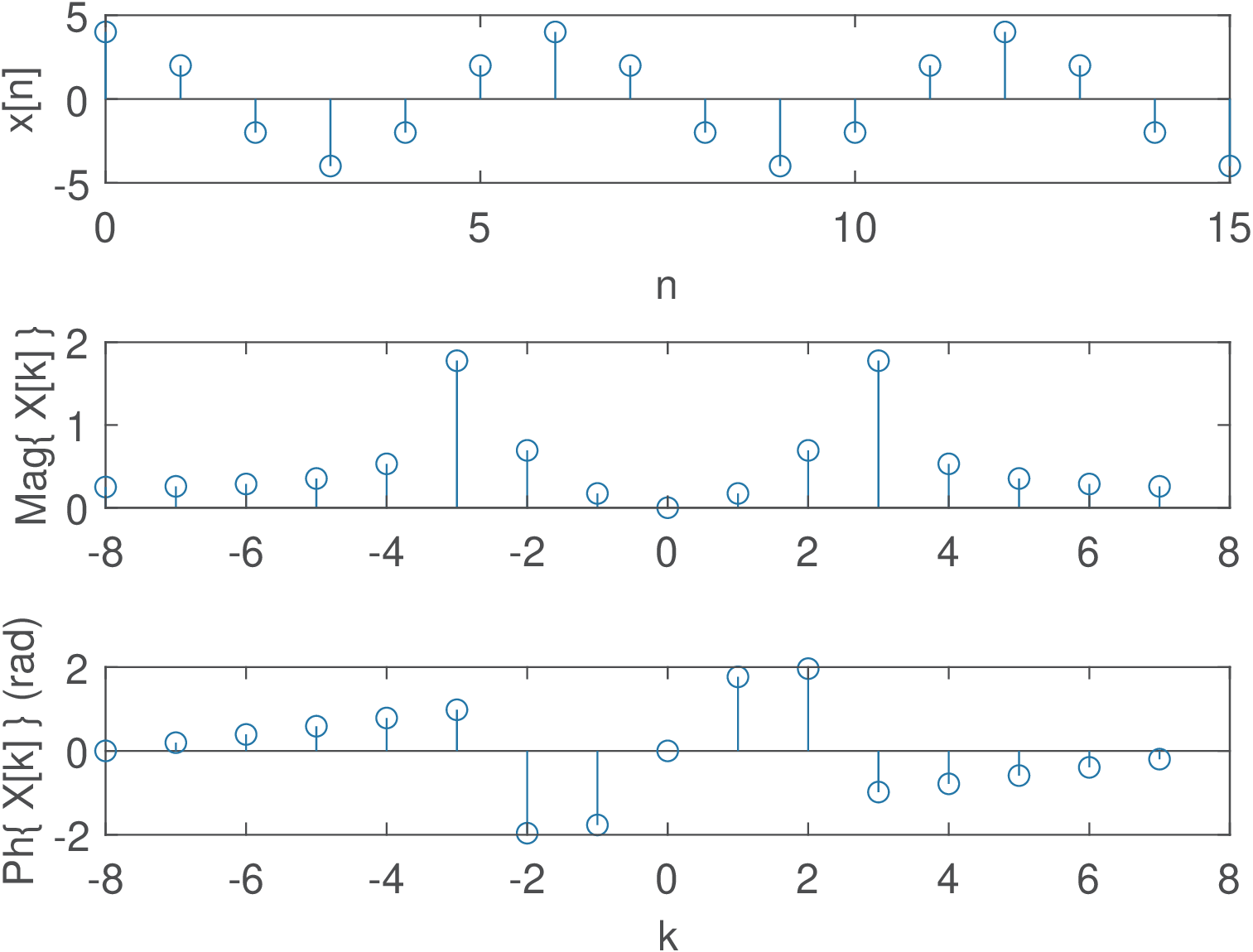

Application 2.7. Better visualizing the FFT spectrum: Using fftshift to reorganize the spectrum and masking numerical errors. Two improvements of the procedure adopted to obtain Figure 2.14 are typically used. The first one is with respect to the range . Figure 2.14 reminds that the definition of an -point DFT assumes . Sometimes it is more convenient to work with ranges that use “negative” frequencies. Typical ranges are when is even and when is odd. In these cases, to represent negative frequencies (or angles in discrete-time processing) the command fftshift can be used. For example, fftshift([0 1 2 3]) returns [2 3 0 1], while fftshift([0 1 2 3 4]) returns [3 4 0 1 2].

The second one aims at eliminating spurious values. Note that, due to numerical errors, the elements of X that should be zero, have small values such as -1.4e-15 - j 3.3e-16, which leads to random angles for in Figure 2.14. It is a good practice to eliminate these random angles based on a threshold for the magnitude. This post-processing step can be done with a command such as X(abs(X) 1e-12)=0, and is adopted in the Matlab/Octave fft routine.

Figure 2.38 benefits from these two improvements and was obtained using Listing 2.7.

1k=-N/2:N/2-1; %range with negative k (assume N is even) 2X(abs(X)<1e-12)=0;%discard small values (numerical errors) 3X=fftshift(X); %rearrange to represent negative freqs. 4subplot(211);stem(k,abs(X));subplot(212);stem(k,angle(X));

Note that fftshift does not calculate the FFT but simply reorders the elements in a vector.

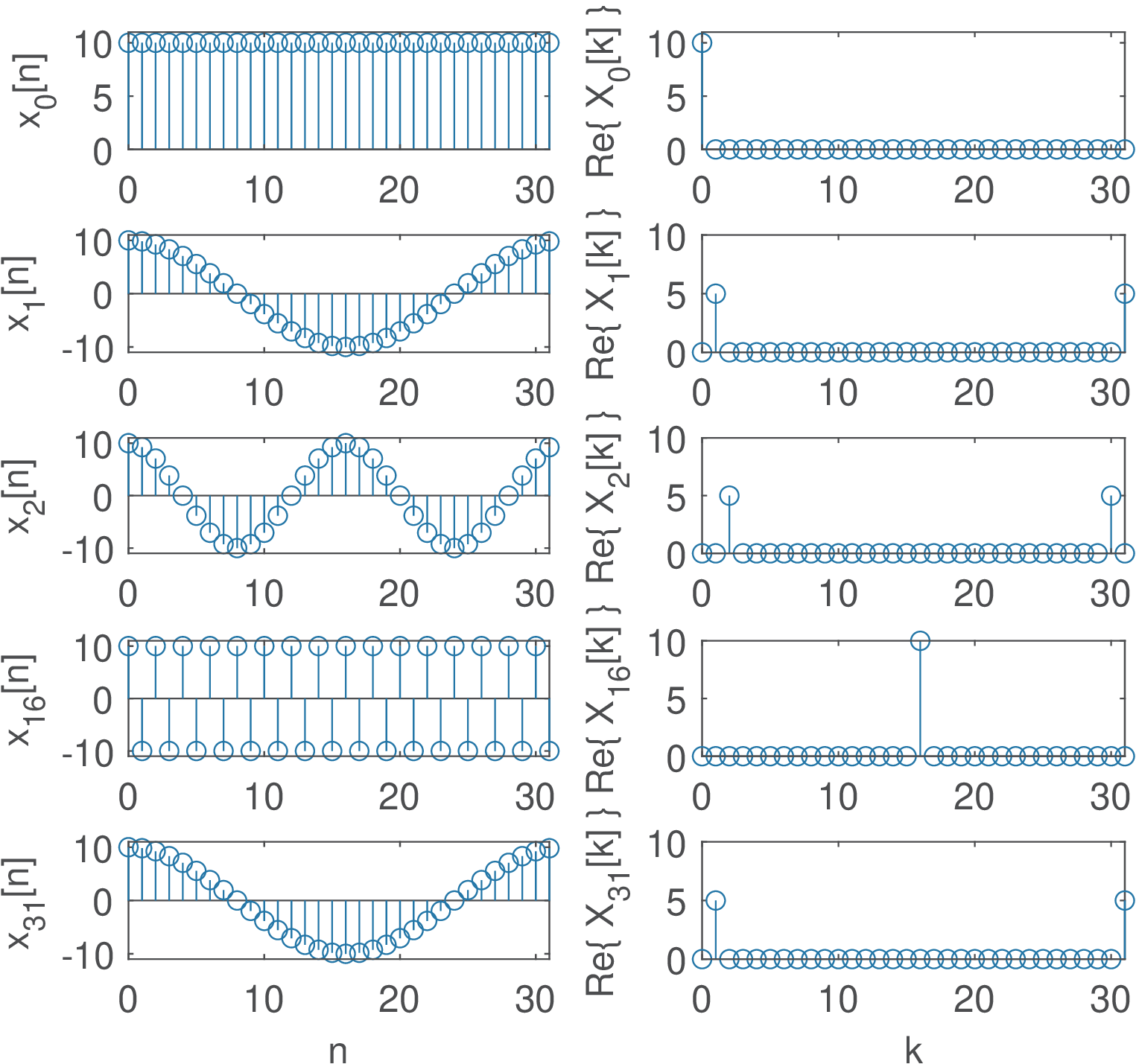

Application 2.8. DTFS analysis of single cosines. Figure 2.39 depicts five cosines with angular frequencies rad, where , and their respective DTFS spectrum calculated with a DFT of points and normalized by .

The first is a DC signal (), the second is , where is the fundamental frequency in this DTFS analysis (given that ) and the other three signals are cosines with the following harmonic frequencies: , and rad. Because the cosines are even functions, the imaginary part of their DTFS is zero.

Note in Figure 2.39 that the frequency increases up to the -th harmonic . When is even, the coefficient of corresponds to . It is important to note that the highest “frequency” of a discrete-time signal is always rad.

Another aspect of Figure 2.39 is that for and , corresponding to the angles and , the spectra have only one non-zero coefficient , while the other three signals are represented by two coefficients. Figure 2.7, for and can be invoked to help interpreting why the coefficients for angles different than and occur in pairs with Hermitian symmetry (i. e., when is real). The basis functions for and are real signals while the other angles lead to complex-valued signals. In the analysis of a real signal , using the -th complex basis requires also using the -th basis, such that their imaginary parts can cancel in the synthesis of a real .

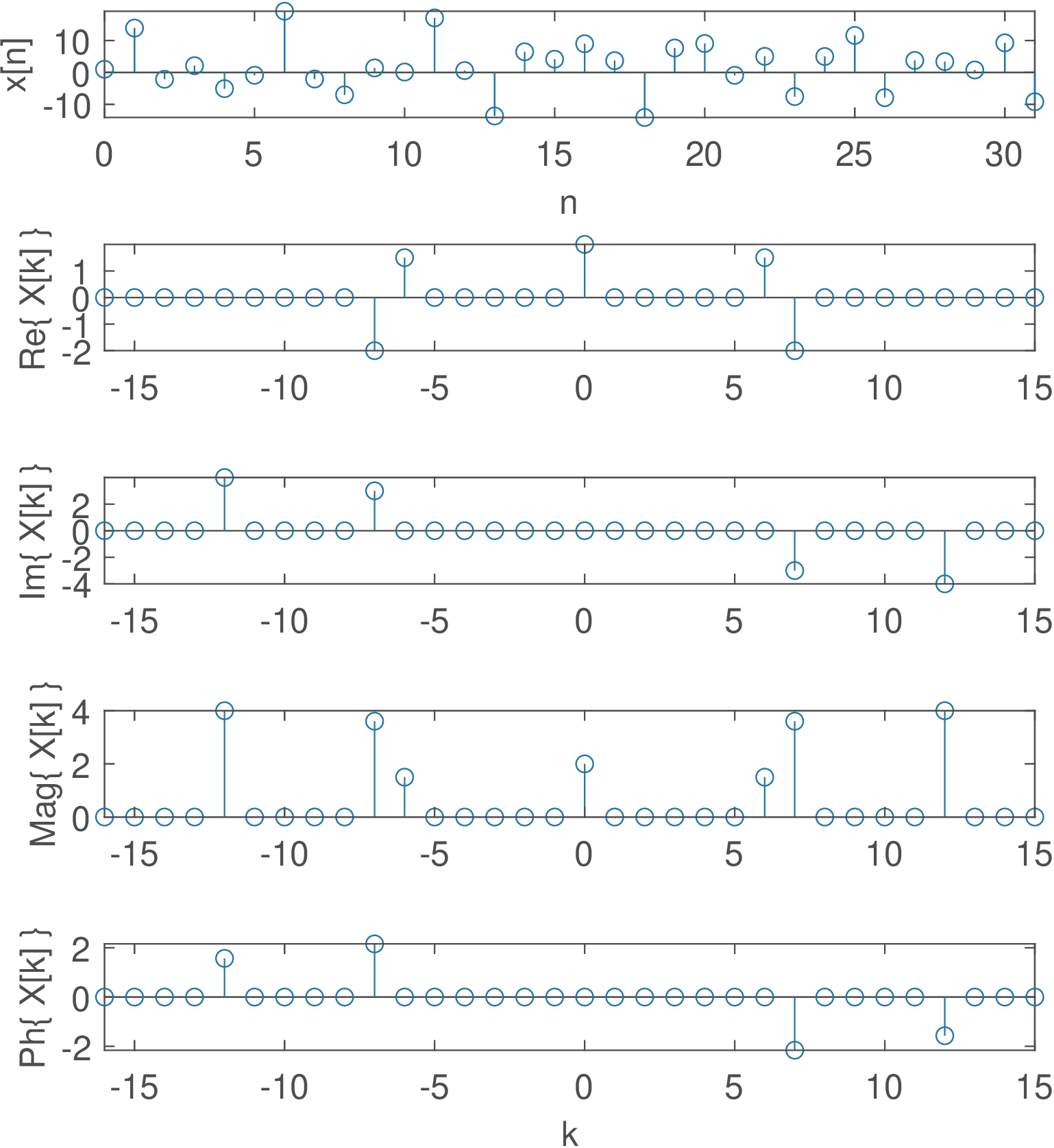

Application 2.9. DTFS analysis of a sum of sines and cosines. This example aims at illustrating how the cosines and sines are mapped in the DTFS, especially when two of them have the same frequency. Figure 2.40 shows the spectrum of a more complex example obtained with Listing 2.8.

1N=32; %number of DFT-points 2n=0:N-1; %abscissa to generate signal below 3x=2+3*cos(2*pi*6/32*n)+8*sin(2*pi*12/32*n)-... 4 + 4*cos(2*pi*7/32*n)+ 6*sin(2*pi*7/32*n); 5X=fft(x)/N; %calculate DTFS spectrum via DFT 6X(abs(X)<1e-12)=0; %mask numerical errors

Note in Figure 2.40 that the coefficient is due to the DC level, are due to the cosine , and are due to , and are due to the two parcels of frequency with the being represented by the real part () and represented by the imaginary part ().

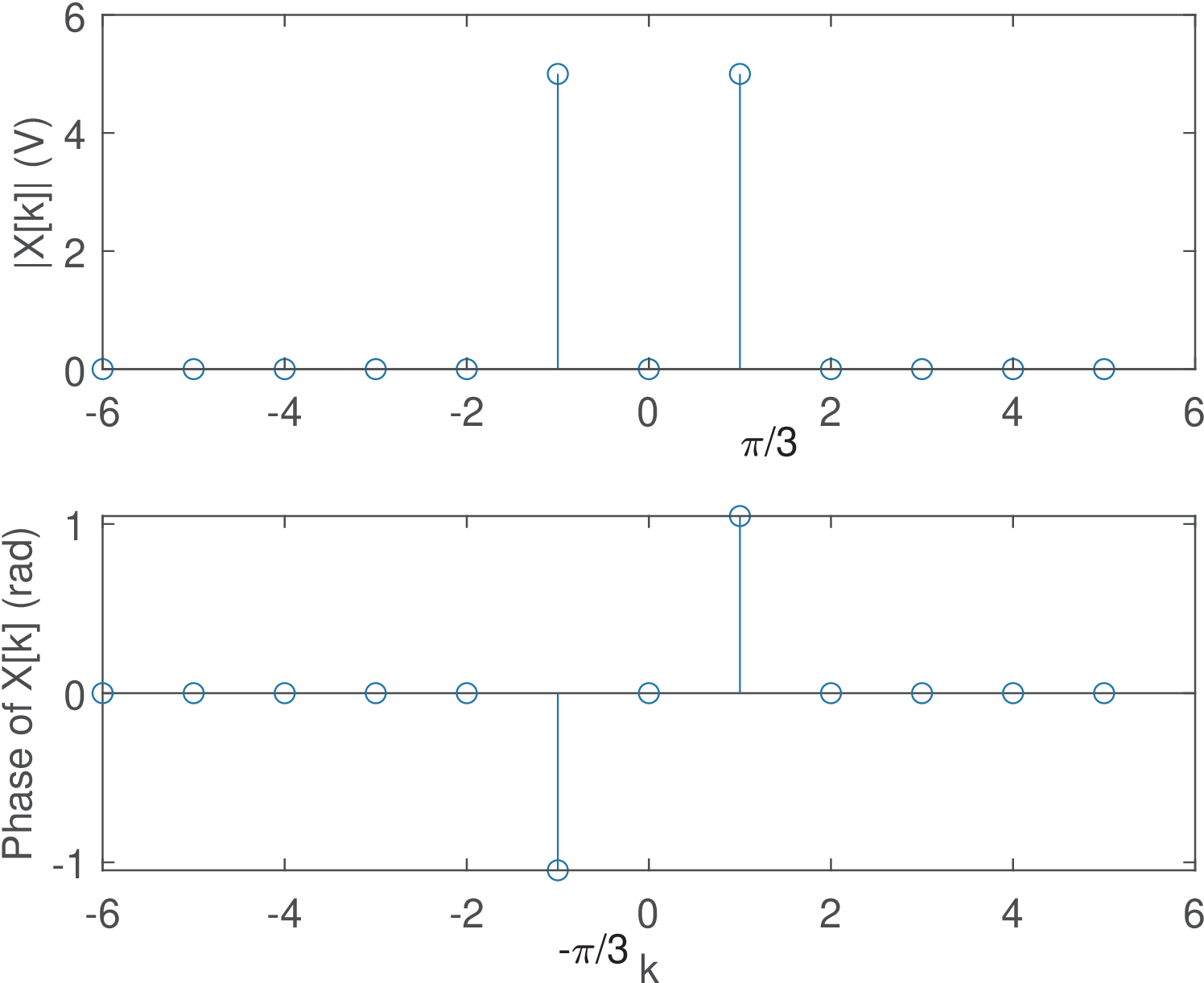

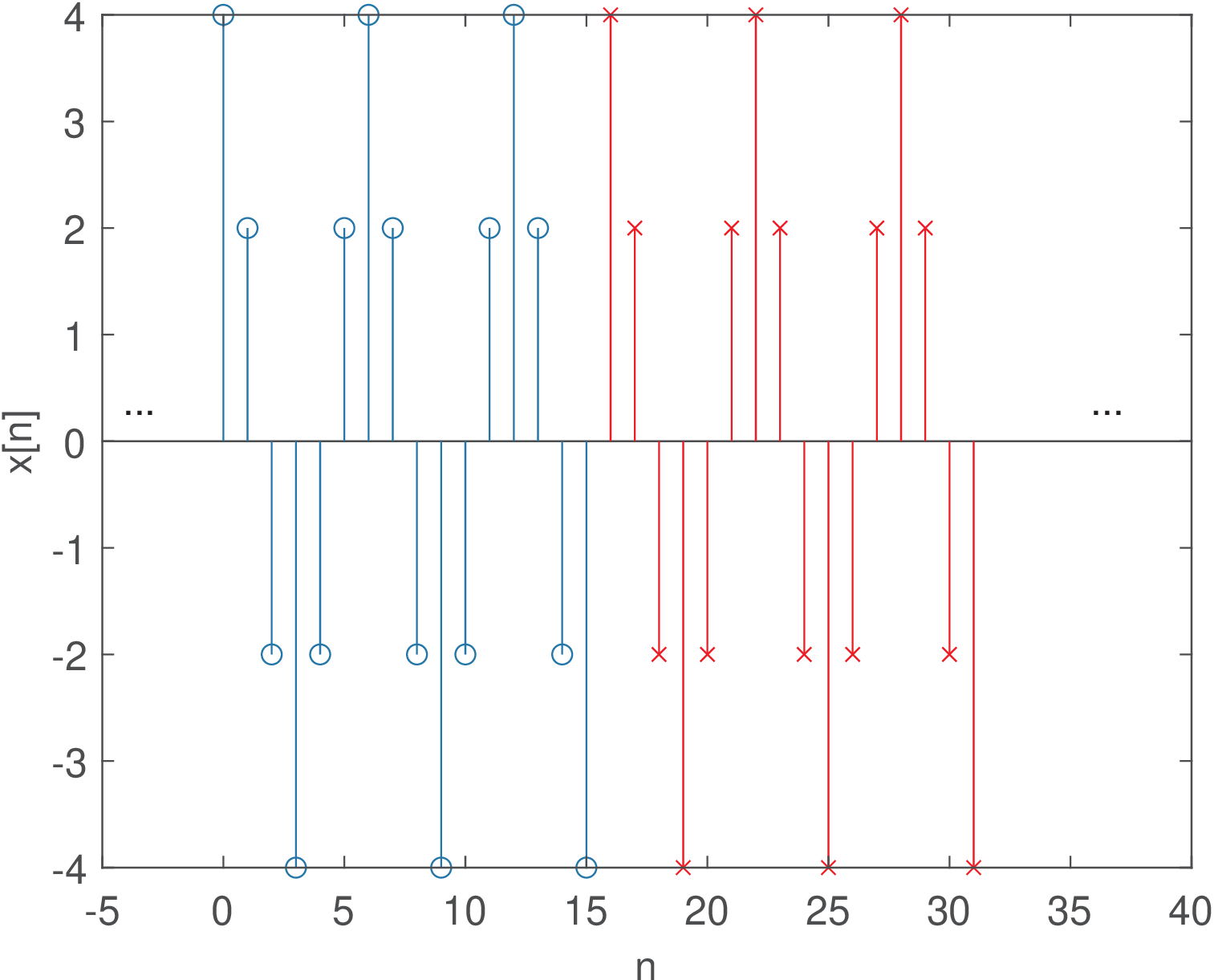

Application 2.10. Spurious frequency components: When the number of DFT points is not a multiple of the signal period. Figure 2.41 illustrates the DTFS spectrum of a signal with period of 6 samples obtained via a 16-point DFT. Note that because 16 is not a multiple of 6, the spectrum has many non-zero spurious components. The coefficients and , both with magnitude 1.78, are the closest to representing the angle rad. In fact, when using points to divide (see Figure 2.7), the angle increment is

|

| (2.64) |

which in this case is , and the DFT / DTFS can deal only with the angles rad. This explains why and are concentrating most of the power . The other coefficients absorb the remaining power that “leaks” due to the imperfect match of the cosine frequency with the discrete grid of 16 points imposed by the DFT / DFTS.

The creation of spurious components is associated to leakage and the picket-fence effect, which are discussed in Section 4.3. This phenomenon is very common when using the FFT to analyze signals that are non-periodic, have infinite duration, etc. In the current case, the spurious components could be avoided by choosing an appropriate number of points for the DFT / DTFS (a multiple of 6). In practice, spurious components due to FFT usage occur most of the times. Even when the original signal is periodic, say with period , it is typically not guaranteed that is a multiple of , where is the number of DFT points and the sampling period.

Figure 2.42 shows part of the signal obtained by repeating the segment of 16 samples depicted in Figure 2.41. This represents the signal “assumed” by the DFT / DTFS. Spurious components appear because, in spite of having a period of , an abrupt transition (from to 4) occurs in the boundary of the segments of 16 samples. In general, the periodic extension (that is assumed by the FFT when approximating a DTFS) of a signal not commensurate23 with its period, leads to discontinuities at the boundaries of the replicas of .

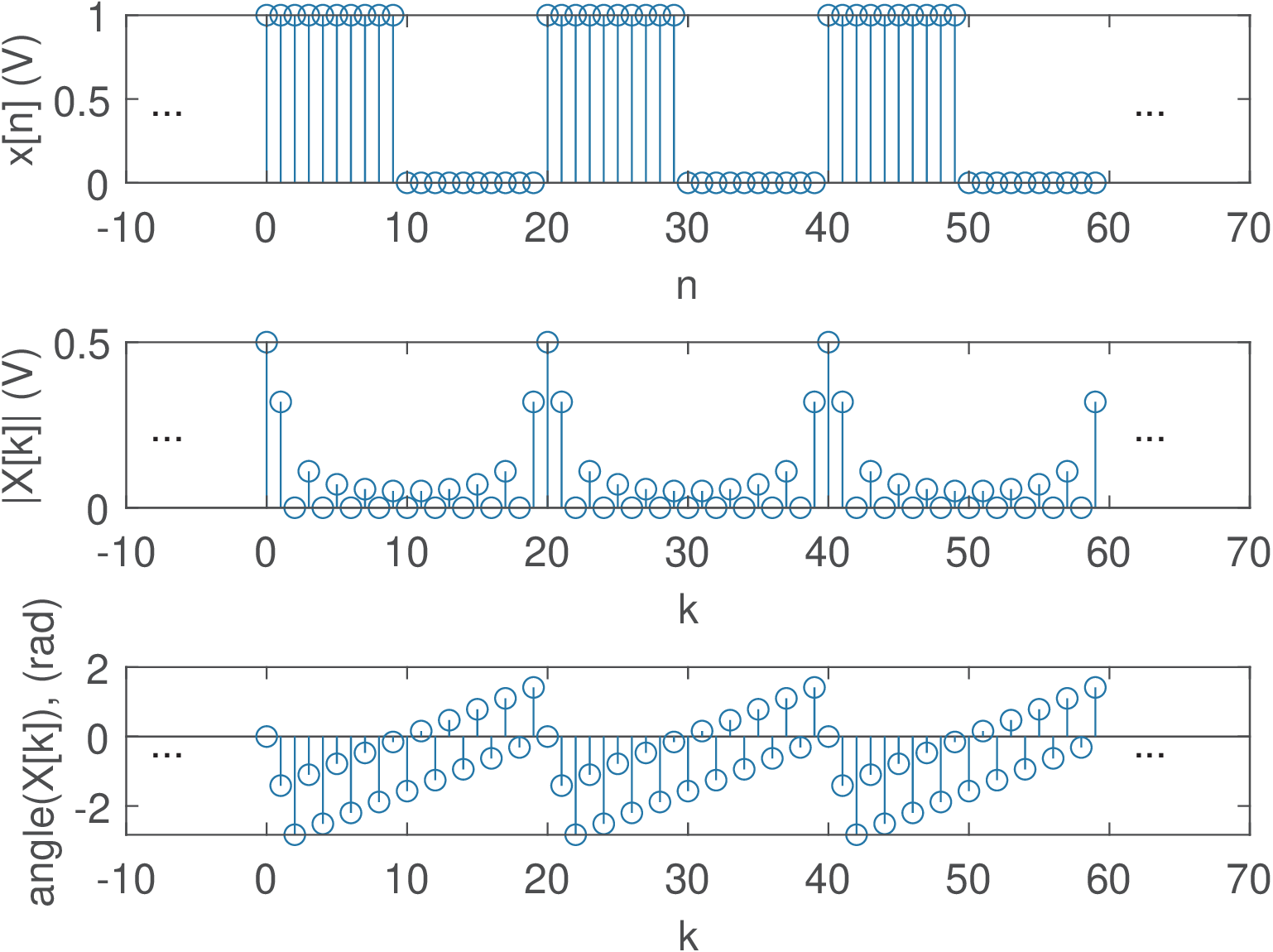

Application 2.11. DTFS for periodic discrete-time pulses. Assume a periodic train of pulses with period and one period described as from to and from to . Its DTFS coefficients are given by

which is the sum of terms of a geometric series with ratio . Applying Eq. (A.16) leads to

This expression can be simplified by applying Eq. (A.14) to both numerator and denominator:

|

| (2.65) |

for and then periodically repeated in . For the expression is undetermined (because of the fraction) and L’Hospital’s rule leads to .

Listing 2.9 obtains the DTFS of the periodic pulses in two distinct ways.

1N=10; N1=5; k=0:N-1; %define durations and k 2Xk=(1/N)*(sin(k*N1*pi/N)./sin(k*pi/N)) .* ... 3 exp(-j*k*pi/N*(N1-1)); %obtain DTFS directly 4Xk(1)=N1/N; %eliminate the NaN (not a number) in Xk(1) 5%second alternative, via DFT. Generate x[n]: 6xn=[ones(1,N1) zeros(1,N-N1)] %single period of x[n] 7Xk2=fft(xn)/N %DTFS via DFT, Xk2 is equal to Xk

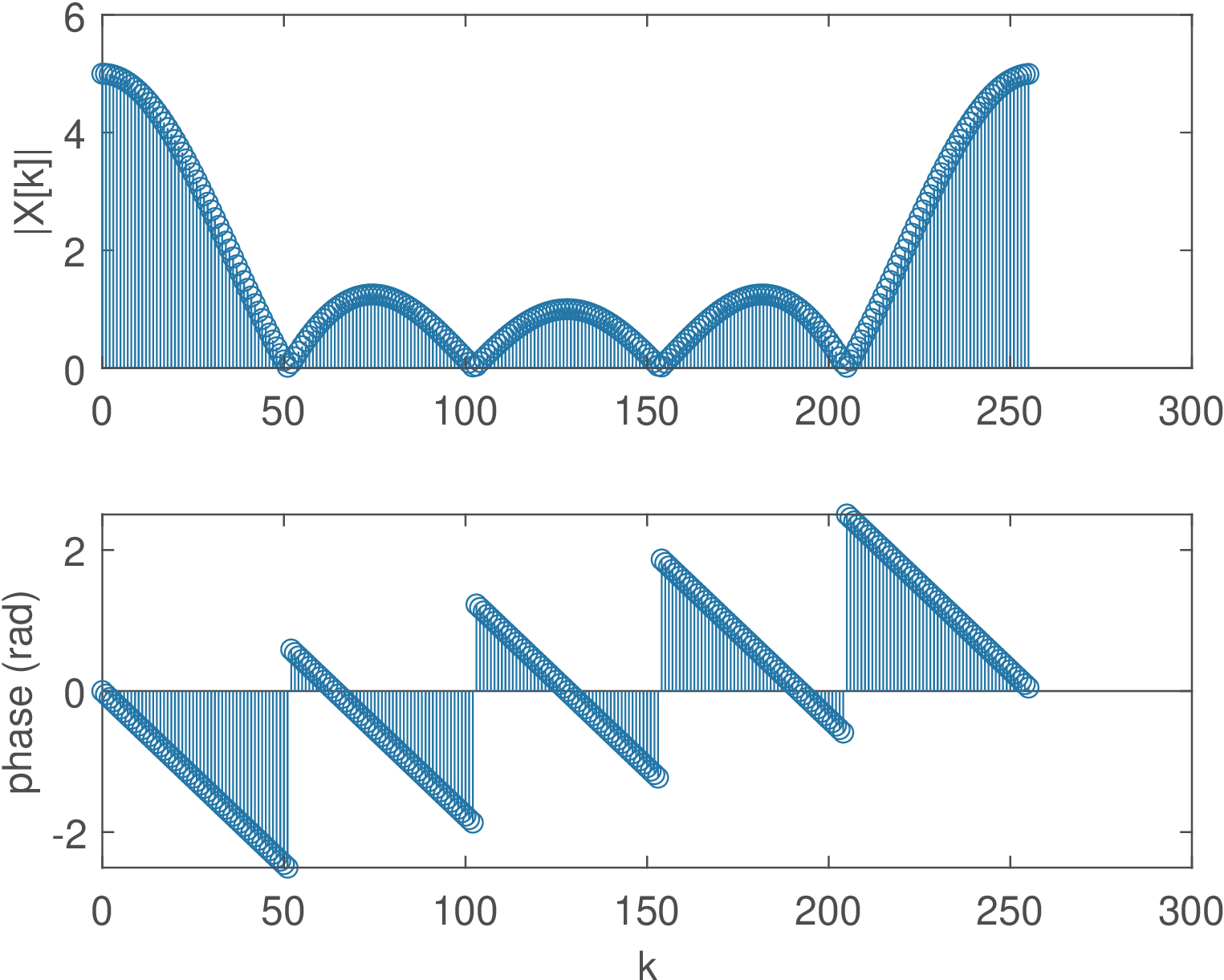

Figure 2.43 illustrates the result using and . Note that has the behavior of a sinc function, as expected. The DTFS phase in Figure 2.43 c) is the combined effect of the linear phase and the sign of in Eq. (2.65). This sign is responsible for Figure 2.43 c) not being a linear function of frequency.

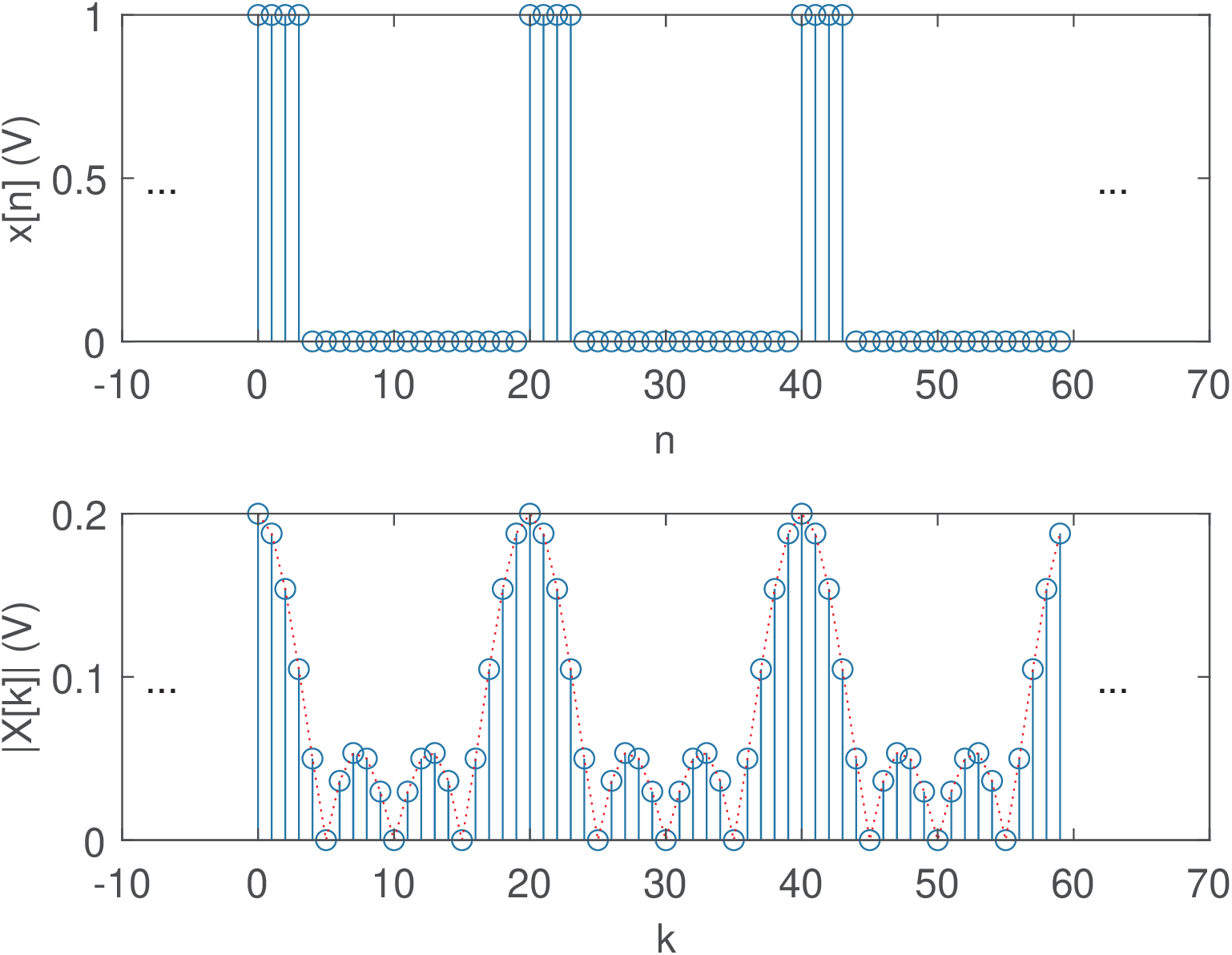

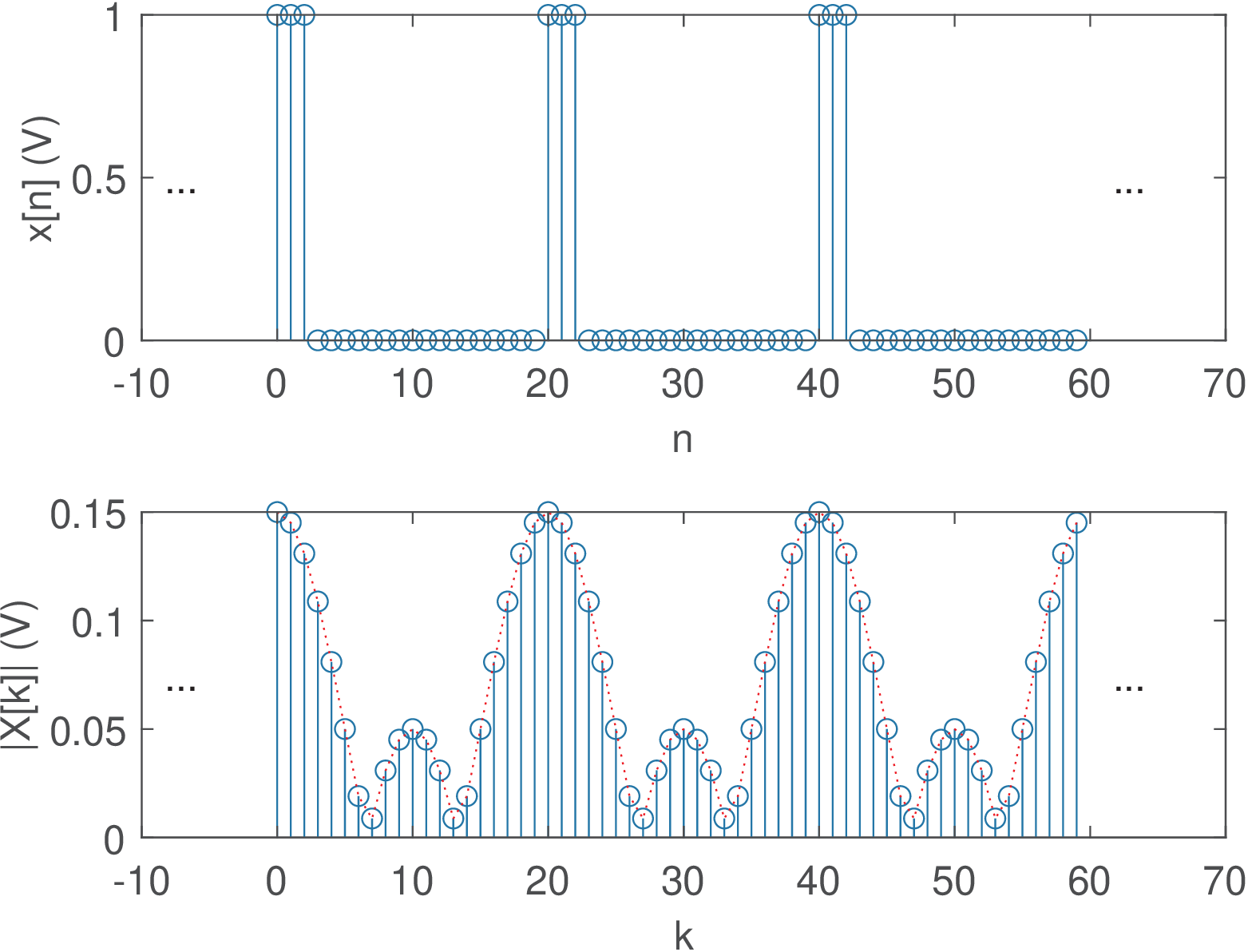

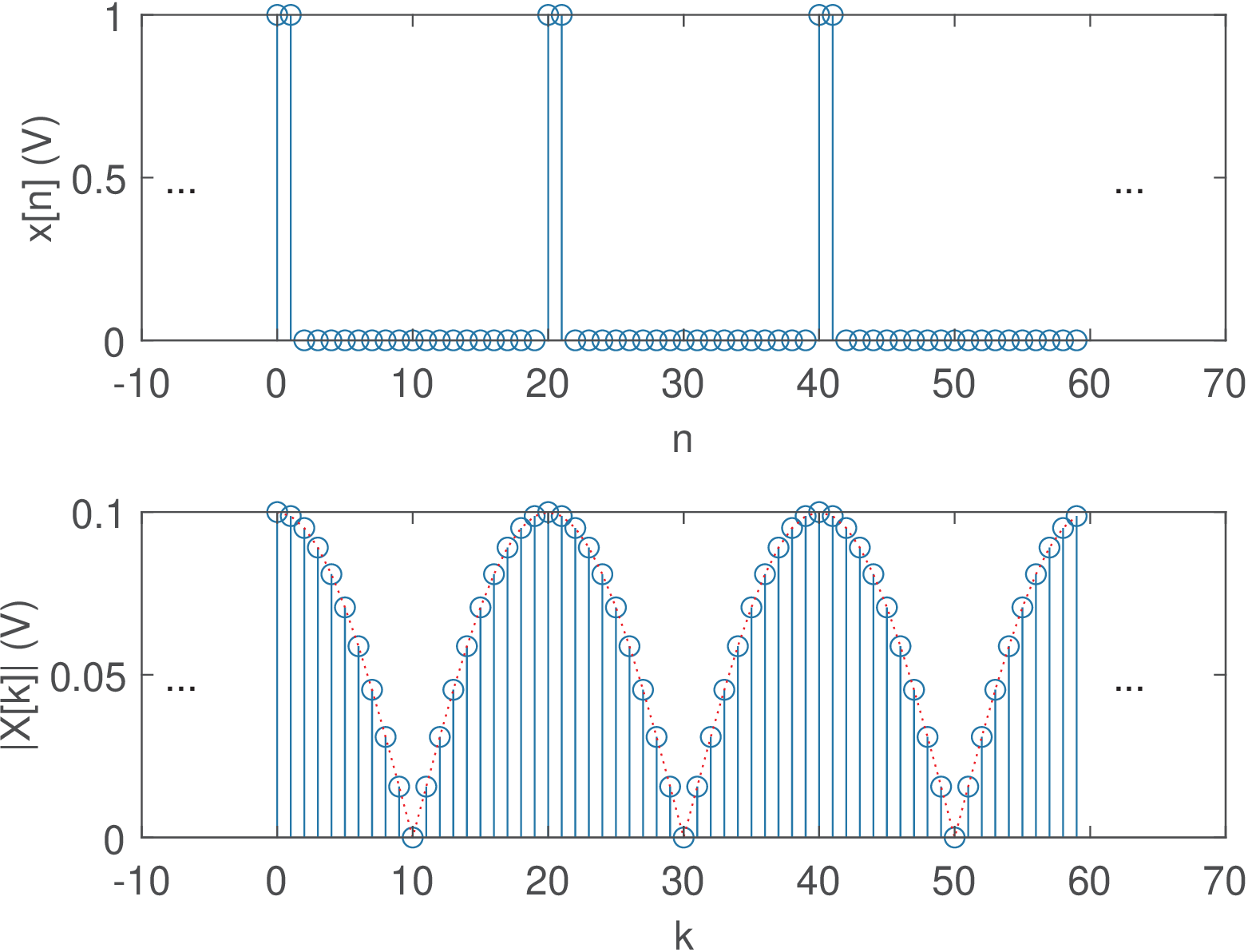

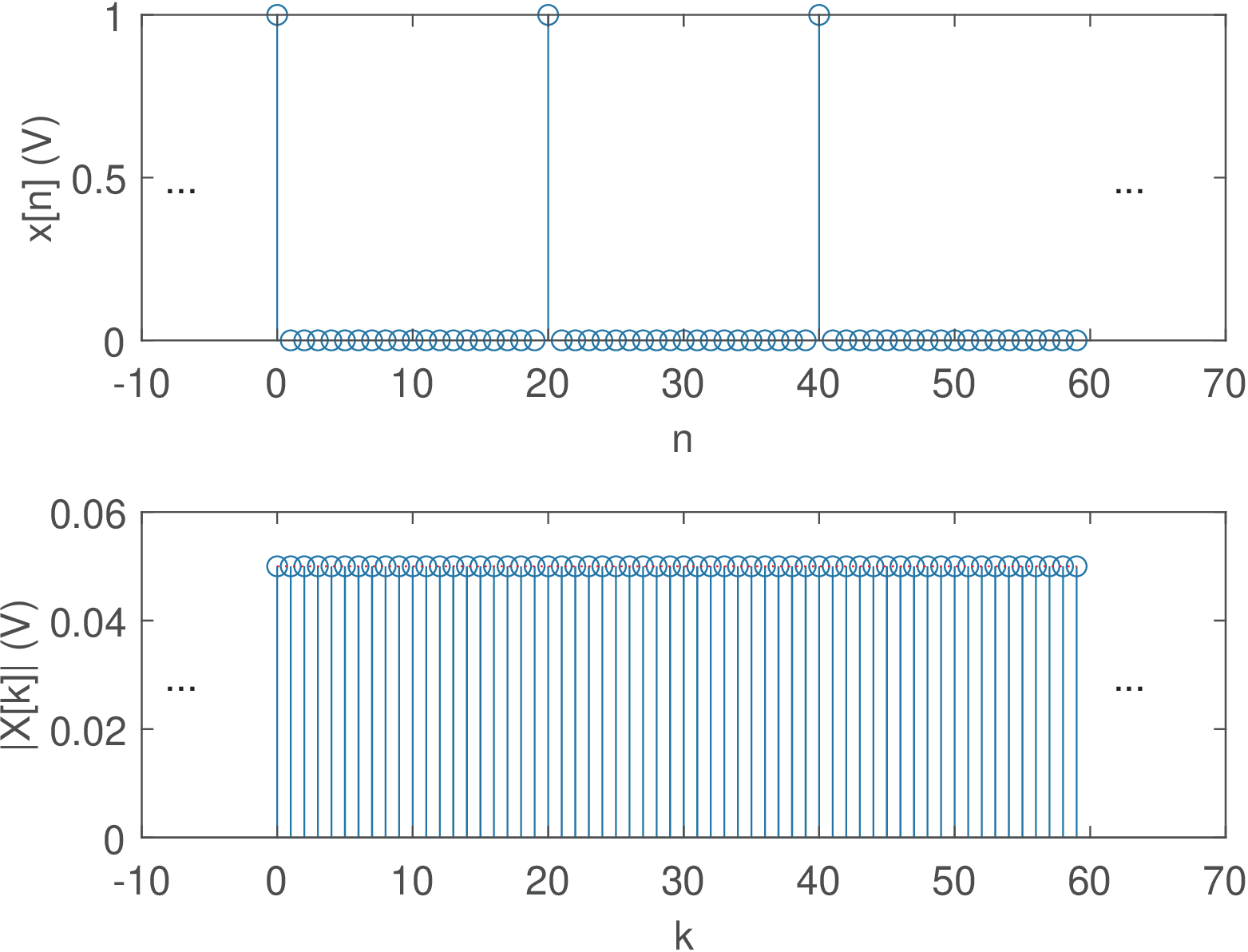

Figure 2.44 shows the behavior of the DTFS of when of Figure 2.43 is decreased from to 1 Note the duality between time and frequency: as the pulse duty cycle gets smaller (smaller ), the spectrum gets wider and with smaller maximum values. When , then . Also, in this case, dictates the number of nulls in the spectrum, while controls how the unit circle is sampled by the DFT.

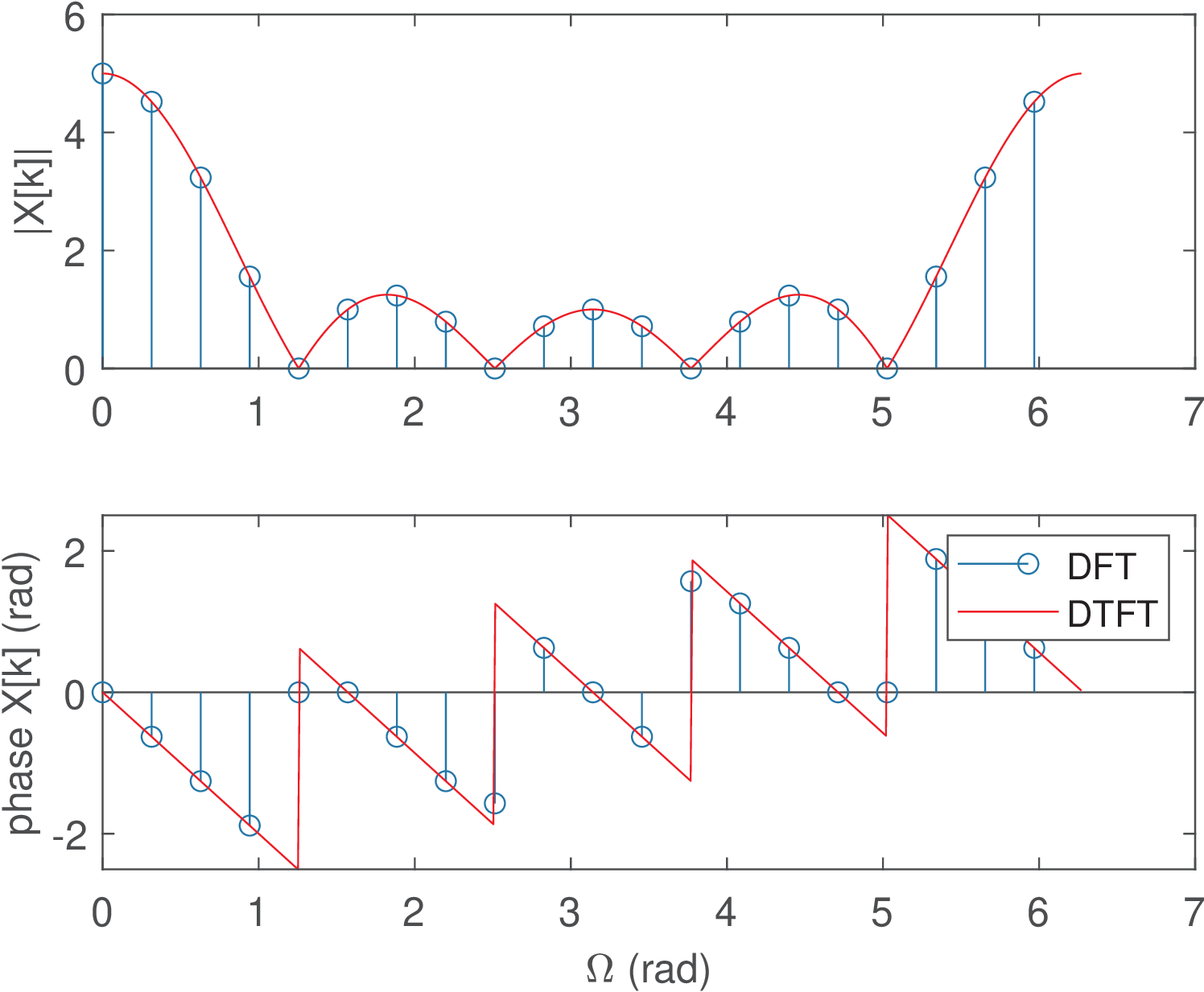

Application 2.12. Calculating the DTFT of a pulse via the DFT. Following steps similar to the ones that led to Eq. (2.65), the DTFT pair of a pulse is given by

|

| (2.66) |

Listing 2.10 illustrates how to obtain samples of the DTFT for a pulse with non-zero samples using a DFT of points.

1N=20; %DFT size 2N1=5; %num. non-zero samples in the (aperiodic) pulse 3x=[ones(1,N1) zeros(1,N-N1)]; %signal x[n] 4N2=512; %num. samples of the DTFT theoretical expression 5k=0:N2-1; %indices of freq. components 6wk = k*2*pi/N2; %angular frequencies 7Xk_fft = fft(x); %use N-point DFT to sample DTFT 8Xk_theory=(sin(wk*N1/2)./sin(wk/2)).*exp(-j*wk*(N1-1)/2); 9Xk_theory(1) = N1; %take care of 0/0 (NaN) at k=0 10subplot(211); stem(2*pi*(0:N-1)/N,abs(Xk_fft)); 11hold on, plot(wk,abs(Xk_theory),'r'); 12subplot(212); stem(2*pi*(0:N-1)/N,angle(Xk_fft)); 13hold on, plot(wk,angle(Xk_theory),'r');

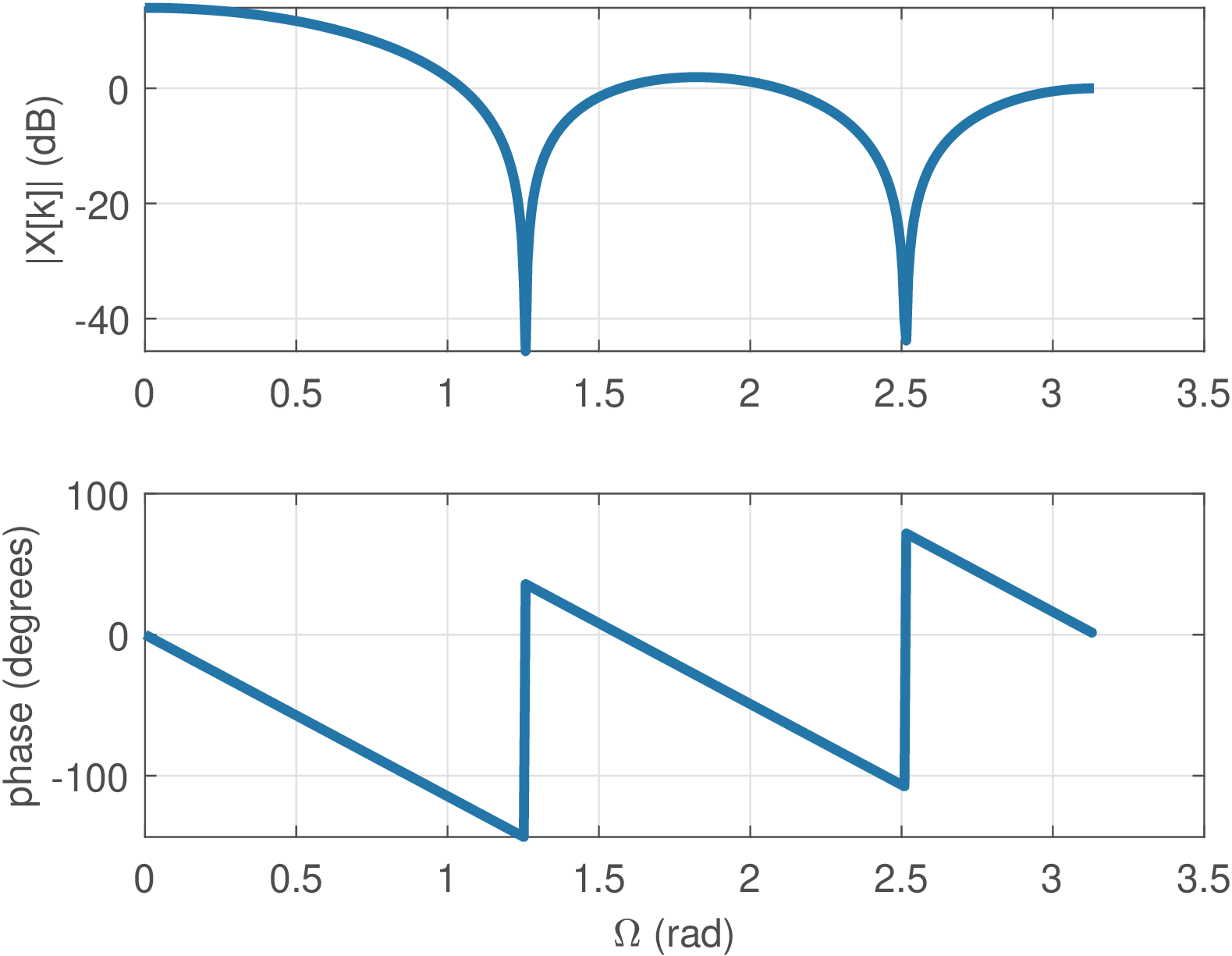

The code plots the DTFT superimposed to the DFT result, as illustrated in Figure 2.45.

As stated by Eq. (2.35), Figure 2.45 pictorially illustrates that the DFT values at the discrete frequencies coincide with the DTFT of the aperiodic pulse calculated at these frequencies using Eq. (2.66).

The DTFT can be calculated in a grid of frequencies with arbitrary resolution by increasing the number of DFT points. For example, using leads to the spectrum in Figure 2.46. Note that if x has less than samples, the command fft(x,N) conveniently extends its duration with zero-padding.

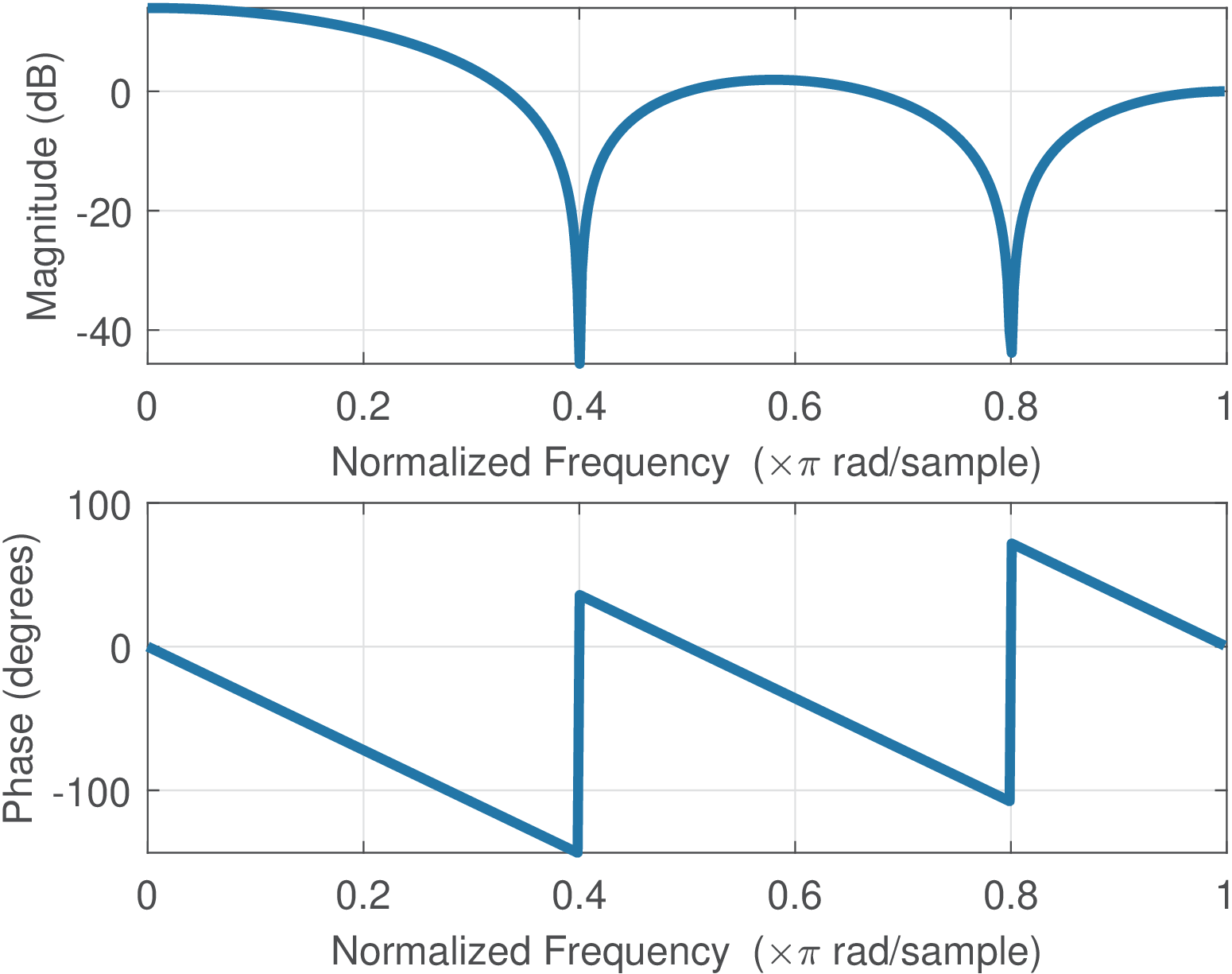

Application 2.13. Calculating the DTFT using Matlab/Octave’s freqz. When is relatively large, it is more convenient to use plot instead of stem (as in Figure 1.19), in spite of being discrete. This is also convenient when is used to represent values of as in this example. Matlab/Octave has the command freqz that uses the DFT to calculate the DTFT at a grid of discrete frequencies. Assuming the same vector x of the previous code snippet, the command freqz(x) generates Figure 2.47.

Note that Figure 2.46 and Figure 2.47 provide the same information in spite of looking quite different. For instance, Figure 2.47 is not explicitly showing that the DTFT is being calculated only at a grid of discrete frequencies.

To better understand how freqz works, Listing 2.11 mimics freqz and was used to obtain Figure 2.48.

1N1=5; %num. non-zero samples in the (aperiodic) pulse 2x=[ones(1,N1) zeros(1,2)]; %signal x[n] 3halfN=512; N=2*halfN; %half of the DFT dimension and N 4k=0:N-1; %indices of freq. components 5wk = k*2*pi/N; %angular frequencies 6Xk_fft=fft(x,N);%use 2N-DFT to get N positive freqs. 7Xk_fft=Xk_fft(1:halfN);%discard negative freqs. 8wk=(0:halfN-1)*(2*pi/N); %positive freq. grid 9subplot(211); plot(wk,20*log10(abs(Xk_fft))); %in dB 10subplot(212); plot(wk,angle(Xk_fft)*180/pi); %in degrees

It can be seen that Figure 2.48 is very similar to Figure 2.47. The only distinction is that, by default, Matlab/Octave represents the abscissa normalizing by , such that the maximum frequency is not but 1. It is interesting to note that Matlab/Octave and GNU Radio, for example, indicate in rad/sample, while this and other texts assume is given in radians ( is dimensionless).