2.2 Linear Transform

The goal in this section is to analyze a signal ( or ) using a linear transform. The following steps will be discussed:

- f 1.

- We will choose the linear transform.

- f 2.

- The linear transform will represent the signal using its basis functions, which are often orthogonal among themselves.

- f 3.

- The transform operation corresponds to finding the values called transform coefficients, which multiplied by the corresponding basis functions (one coefficient per basis functions) reconstruct the original signal.

To fully understand linear transforms, we will discuss these concepts using vectors and linear algebra. Later we will generalize from vectors to signals. We start this study of linear transform, associating it to a simple matrix multiplication.

2.2.1 Matrix multiplication corresponds to a linear transform

In linear algebra, any linear transformation1 (or transform) can be represented by a matrix . The linear transform operation is given by

|

| (2.1) |

where and are the input and output column vectors, respectively.

Example 2.1. Example of linear transform. The matrix

|

| (2.2) |

implements a transform that corresponds to a clockwise rotation of the input vector by an angle .

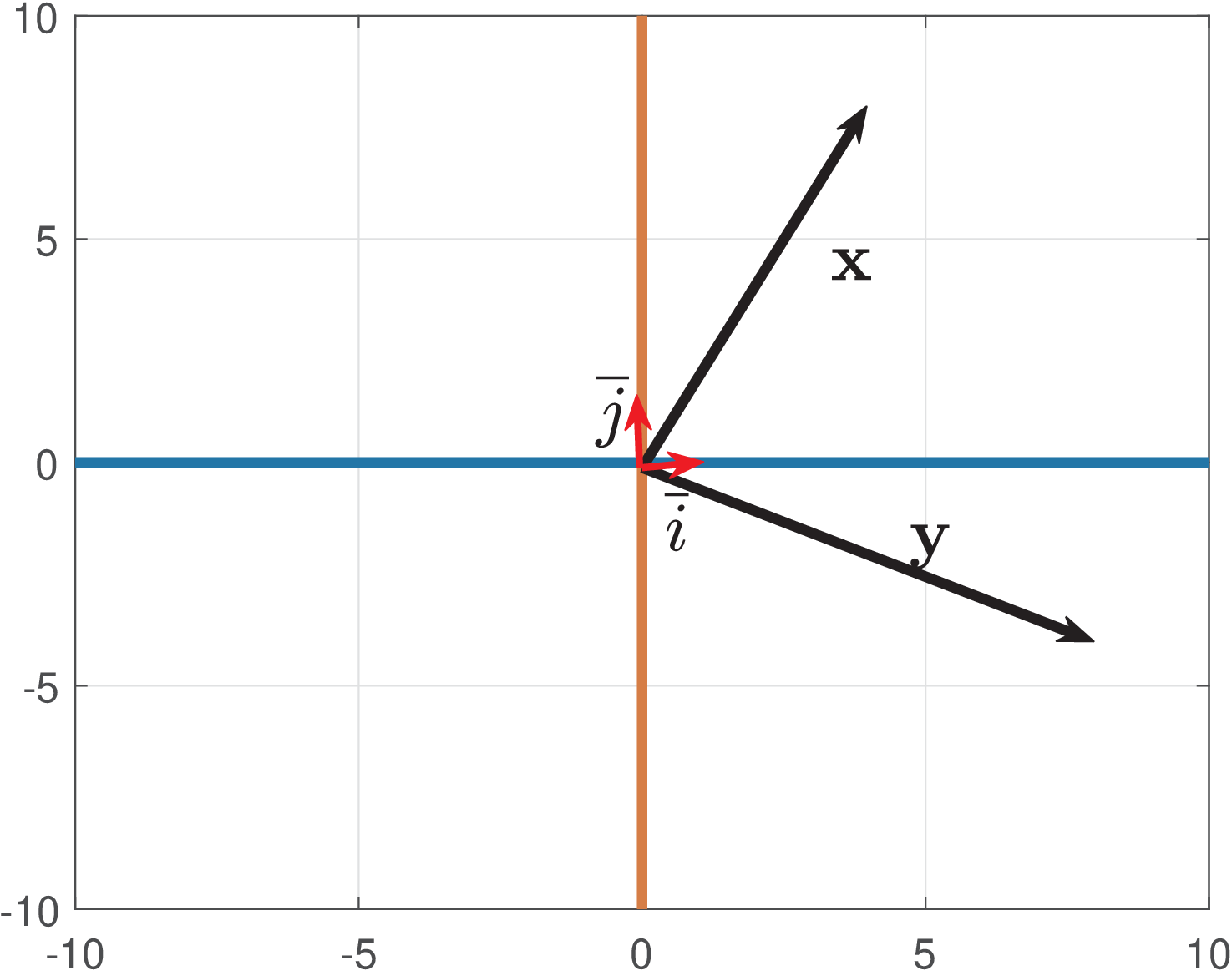

Figure 2.1 illustrates the rotation for a vector by an angle radians, i. e., , resulting in .

2.2.2 Basis: standard, orthogonal and orthonormal

Another important concept in linear transforms, which has origin in linear algebra, is the concept of basis. The linear combination of basis vectors allow to create any possible vector in the corresponding vector space. Many basis are orthogonal or orthonormal. Figure 2.1 indicates a pair of orthonormal2 vectors and that span . The vectors and form a standard basis and allow to easily represent any vector , such as . It is useful to get a geometric interpretation by studying basis vectors, and later generalize the concepts to basis functions that represent discrete or continuous-time signals.

When the basis vectors are organized as the columns of a matrix , the elements of the input vector indicate the coefficients of a linear combination of the basis functions that leads to . For example, in the case of the standard basis:

which is a trivial relation because is the identity matrix. More interesting transforms are used in practice.

Example 2.2. Interpreting the given example as a linear transform. Assume one is dealing with computer graphics and wants to rotate vectors. Eq. (2.2) of Example 2.1 with radians leads to (see Figure 2.1):

This can be interpreted as a transform as follows:

- f 1.

- We chose with as the linear transform.

- f 2.

- In this case, the elements of are interpreted as the transform coefficients, while is interpreted as the original vector.

The forward transform operation corresponds to finding the coefficients to compose . In the inverse operation, the coefficients would be the input values, and could be found using . In practice, we avoid the task of inverting a matrix and often choose with special properties. For instance, because the columns of are orthonormal, its inverse is equal to its Hermitian (and the basis vectors are the rows of ). Tricks to avoid inverting matrices will be further explored, alongside with the adoption of inner products.