4.5 Power spectral density (PSD)

In most cases, the signal under analysis has infinite energy, such as a deterministic power signal (e. g. a periodic signal) or realizations of a stationary random process. Therefore, the main interest in spectral analysis relies not on the ESD but on the PSD.

4.5.1 Main property of a PSD

A PSD describes the distribution of power over frequency. The frequency can be linear in Hz, angular frequency in radians/s or discrete-time angular frequency , which is an angle in radians.

Before detailing PSD definitions, this subsection presents their main property: the PSD has the important property that the average power can be obtained in continuous-time by

|

| (4.20) |

with in watts/Hz.

Similar to the reasoning associated to Eq. (4.19), the version of Eq. (4.20) when the continuous-time PSD is a function of in radians/s is

|

| (4.21) |

and is interpreted in units of watts per rad/s.

The PSD definition in discrete-time is

|

| (4.22) |

where is interpreted in units of watts per radians.

Table 4.3 summarizes the discussed PSD functions.

| Time | Ind. var. | Definition | Main property | |

| Continuous-time | (Hz) | |||

| Continuous-time | (rad/s) | |||

| Discrete-time | (rad) | |||

4.5.2 PSD definitions

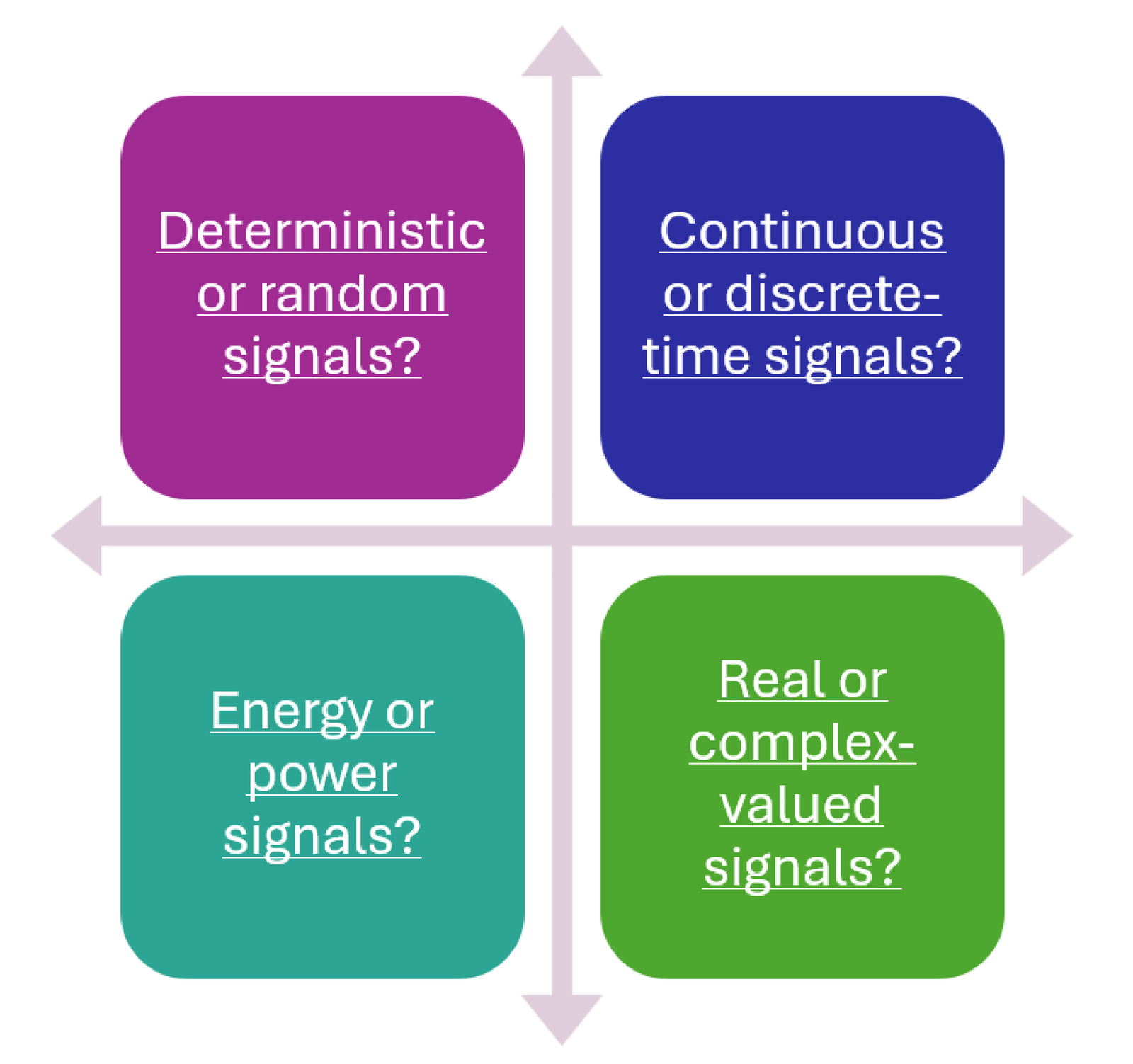

As discussed in Section 1.6, there are many categories of signals. Figure 4.24 illustrates some of them.

Similar to the definitions of correlation in Table 1.9, different categories of signals require distinct definitions of PSD. To simplify the discussion, some spectral analysis textbooks4 choose to emphasize discrete-time random signals, and define the PSD for this kind of signals. Moreover, the spectral estimation problem is defined in these textbooks5 as:

- From a finite-length record of a second-order stationary random process, find an estimate of its power spectral density for .

In other words, the context of this specific problem is restricted to discrete-time power signals with duration of samples. Here we also discuss other cases, such as: a) continuous-time PSDs, b) when the signal is deterministic and c) the theoretical PSD expressions for infinite-duration signals. This will require attention to adopting the correct definitions.

For instance, the PSD for a continuous-time power signal can be defined as

|

| (4.23) |

where is the Fourier transform of a signal obtained by multiplying the original signal by a window of duration . Alternatively, the PSD definition for continuous-time deterministic signals is

which is basically the ESD normalized by the time interval .

An important method for obtaining the PSD of a wide-sense stationary (WSS) random process is given by the Wiener–Khinchin theorem,6 which states that the PSD is the Fourier transform of the autocorrelation function.

For instance, assuming is the autocorrelation of a WSS random process , the PSD can be obtained via

|

| (4.24) |

Analogous results hold in the discrete-time case, where the PSD is the DTFT of the autocorrelation sequence:

|

| (4.25) |

where is the lag, and the inverse DTFT gives

|

| (4.26) |

Similar constructions apply to deterministic finite-energy signals, where the ESD is the Fourier transform of the signal’s autocorrelation function. The next subsections provide more details about distinct PSD definitions.

4.5.3 Advanced: PSD of random signals

It makes complete sense to focus the study of PSD estimation on random signals, because they are important in many applications, such as in digital communications. One useful model for these signals is the wide-sense stationary (WSS) random process with autocorrelation (see definitions in Appendix B.4). In many spectral analysis problems,7 besides being WSS, the random process is assumed to be autocorrelation ergodic.8

The PSD for a continuous-time power signal corresponding to a realization of a WSS stochastic process is defined as

|

| (4.27) |

where is the Fourier transform of a truncated (windowed) version of with duration . In other words, where for or zero otherwise.

Similar to Eq. (4.27), the PSD for a discrete-time power signal corresponding to a realization of a WSS stochastic process is defined as

|

| (4.28) |

where is the DTFT of , a truncated version of obtained via a rectangular window of non-zero samples. The unit of is watts per radians.

In practice, there is a finite number of realizations of and often only one realization is available. Fortunately, ergodicity of the autocorrelation can be assumed in many cases and the ensemble averages substituted by averages taken over time (see Appendix B.3). Besides, the number of samples (the duration of ) is often limited to a given value that can be determined, for example, by the time over which the process can be considered stationary. For example, in speech analysis applications, it is typically assumed the process of vowel production is quasi-stationary over segments with durations from 40 to 80 ms. With limited-duration signals, the challenge for spectral analysis is to obtain accurate estimates, as discussed in this chapter.

For both Eq. (4.27) and Eq. (4.28), windows other than the rectangular can be used when the signal under analysis has a short duration. But a rectangular window with infinite duration is the adequate window for the PSD definition.

4.5.4 Advanced: PSD of deterministic and periodic signals

Noticing from Eq. (4.15) and (4.18) that squared magnitudes of provide the energy distribution over frequency, to obtain the power distribution one can intuitively consider dividing the ESD by “time”, via a normalization factor that converts energy into power.

The PSD definition that is adopted for continuous-time deterministic signals (and does not require the expected value used in Eq. (4.27)) is

which is basically the ESD normalized by the time interval .

A special case of deterministic signals are the periodic ones. Assuming a continuous-time signal with period , its PSD is

|

| (4.29) |

where are the Fourier Series coefficients and is the fundamental frequency. Similarly, for , the expression is

|

| (4.30) |

where rad/s.

In summary, the PSD of a deterministic (non-random) periodic signal is composed by impulses with areas determined by the squared magnitude of Fourier series coefficients.

Example 4.9. PSD of a continuous-time sinusoid. If , then and the average power is .

When considering a discrete-time periodic signal , its PSD can be obtained by first considering an expression for the frequency range :

|

| (4.31) |

where is the period, is the fundamental frequency, and the DTFS of . Finally, the PSD is simply the periodic repetition of :

|

| (4.32) |

Example 4.10. PSD of a discrete-time sinusoid. If (assume obeys Eq. (1.21) to have periodic), then and

provides its PSD, which has period , as expected.

4.5.5 Advanced: Fourier modulation theorem applied to PSDs

If is the PSD of a WSS random process , the PSD of a new process is

|

| (4.33) |

Similarly, if , then

|

| (4.34) |

To observe why Eq. (4.33) (and, consequently, Eq. (4.34)) is true, recall the Wiener-Khinchin theorem of Eq. (4.24) and the autocorrelation definition of Eq. (1.52). Generally speaking, when modifying a WSS process , the effect on its PSD can be obtained by checking how the modification affects its autocorrelation, and then relating this to frequency domain using Eq. (4.24). For example, multiplying by a scalar corresponds to scaling its autocorrelation by and leads to a PSD given the linearity of the Fourier transform.

According to this reasoning, a proof sketch of Eq. (4.33) follows:

Eq. (4.34) can be obtained by decomposing the cosine into two complex exponentials and taking in account that the factor leads to the in the PSD expression. Eq. (4.34) allows to observe that the result of a signal multiplied by a cosine of unitary amplitude has half of the original signal power.