2.12 Signal Spaces and Constellations

This section concerns the representation of waveforms by vectors. Converting waveforms into vectors facilitates the analysis of communication systems. The discussion will introduce the vector-space interpretation of signals. Most signals of interest are originally in an infinite dimension signal space. The vector and signal spaces will be related with the aid of a signal constellation.

It should be noted that all the discussion concerns a single channel use, i. e., the transmission of a single symbol. The implicit assumption is that the relations among signal samples at distinct time instants are not of interest or proper steps have been taken to eliminate such relations.

2.12.1 Signals as linear combinations of orthogonal functions

First, assume a single channel use, i. e., the transmission of a single symbol . Recall that, for PAM, as exemplified in Eq. (2.1), this symbol is a scalar because the PAM dimension is . In the general case, the -th symbol is a vector and, instead of a single PAM shaping pulse , there is a function to be multiplied by the -th symbol element .

It is imposed that the basis functions compose an orthonormal set such that if , and 0 otherwise. The set can be obtained, e. g., via the Gram-Schmidt procedure discussed in Section A.13, adapted to using continuous-time signals instead of vectors.

Hence, the information corresponding to constellation symbol is conveyed by a signal created by the linear combination

|

| (2.29) |

and the following signal represents an infinitely long sequence of symbols:

|

| (2.30) |

For PAM, Eq. (2.30) simplifies to

|

| (2.31) |

because and the only basis function is the shaping pulse.

2.12.2 Constellations

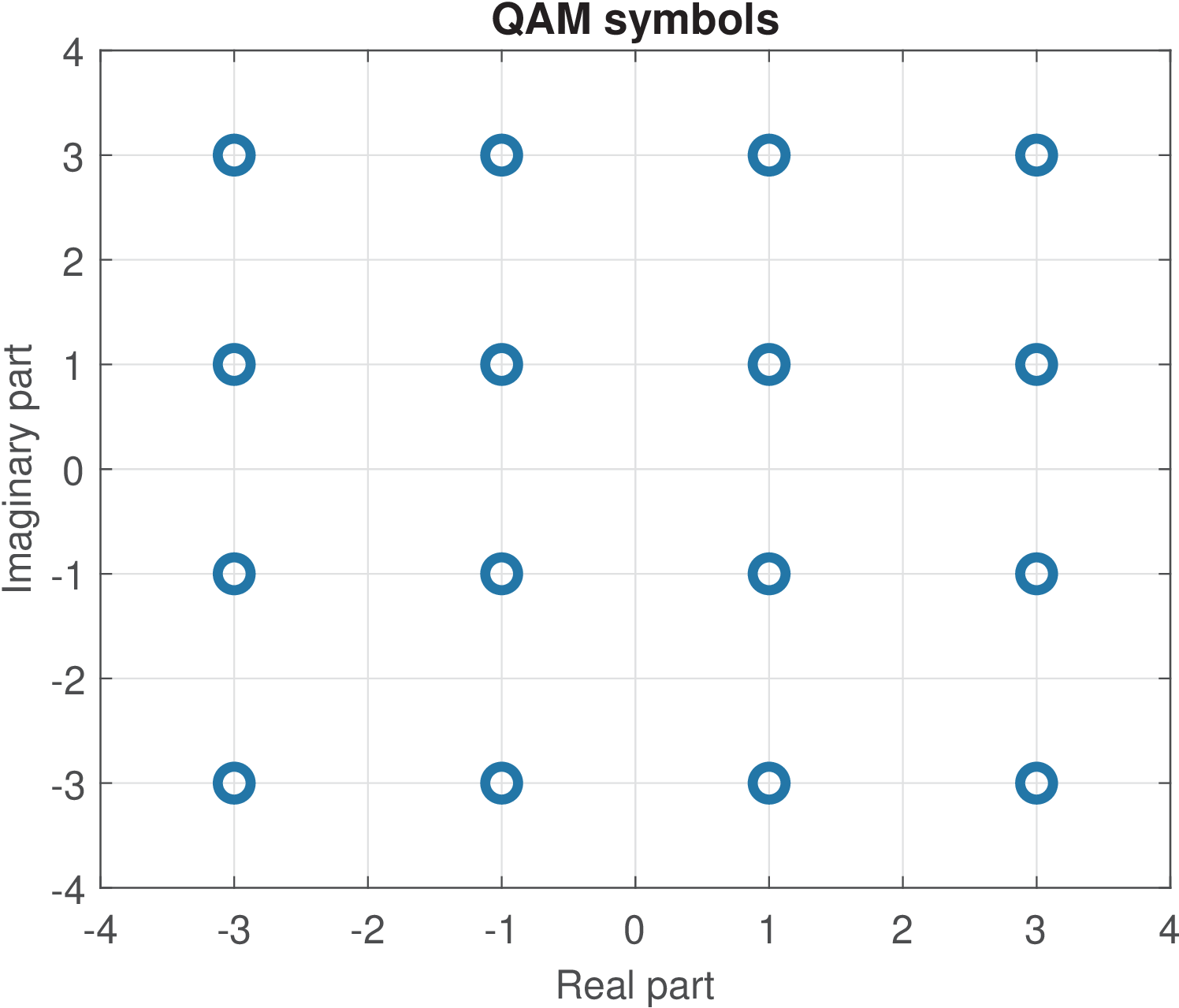

As discussed for PAM in Section 2.7.1, for a given modulation scheme, the set of distinct vectors of dimension , or symbols, is called signal constellation. As implied by Eq. (2.12), PAM adopts dimension , while Figure 2.24 depicts the symbols of a constellation for a specific 16-QAM modulation (), which has dimension .

As indicated in Figure 2.24, the 16-QAM constellation can be interpreted as the Cartesian product of two 4-PAM constellations.

Constellations are useful when representing waveforms as vectors. This representation allows the establishment of several connections between signal and vector spaces. For example, the inner products between symbols (vectors in ) can be made equal to the inner products between the continuous-time signals that represent these symbols, i. e.,

for the first and second symbols, for example.

It is convenient to represent signals by constellation symbols and abstract (or even ignore) the details of the respective waveforms . For example, as long as the basis functions in Eq. (2.29) are orthonormal, the average energy of a signal constellation is invariant to the choice of these functions. In fact, a given constellation can be associated to several distinct sets of orthonormal basis functions with the same properties in terms of robustness to errors under AWGN, for example.

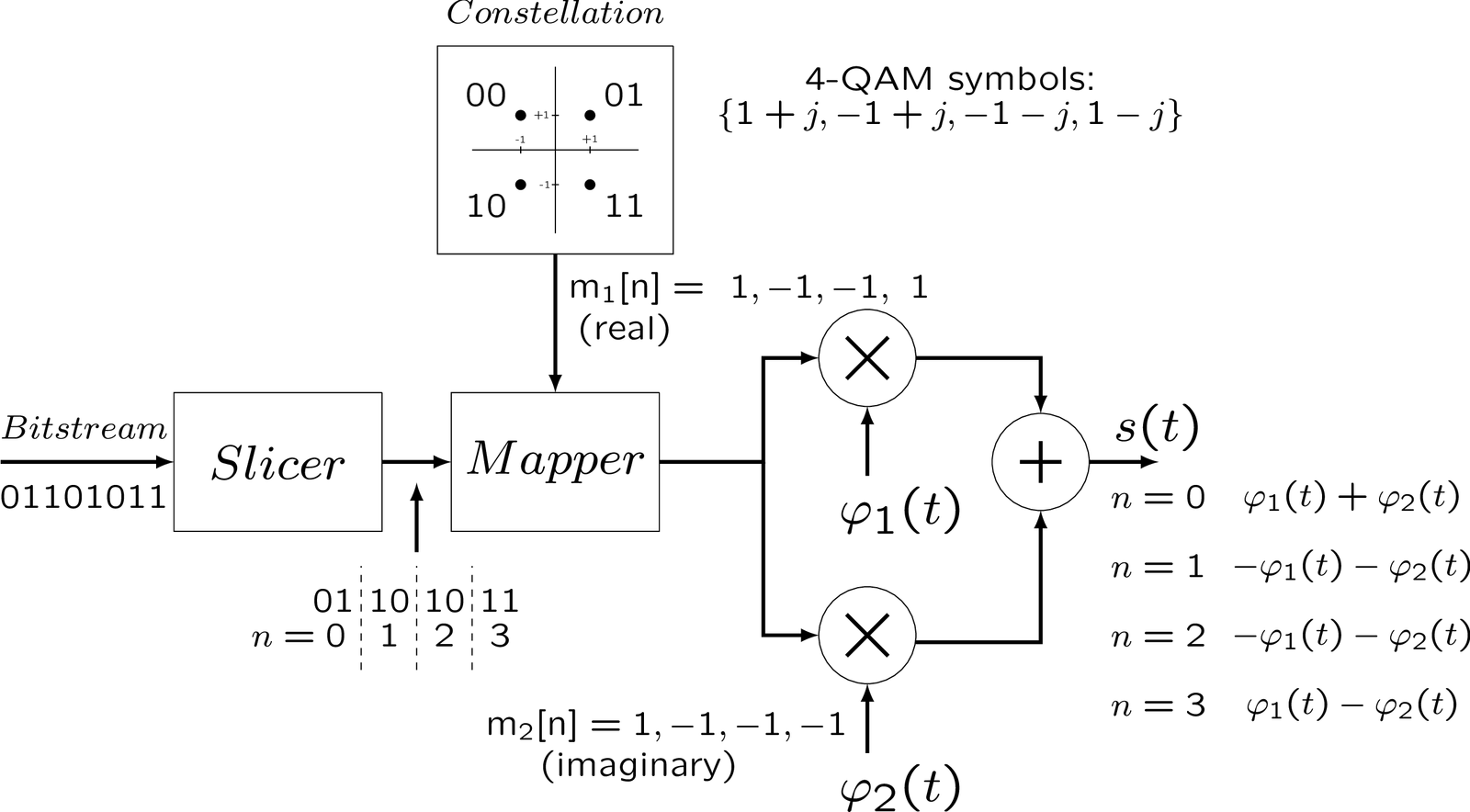

Figure 2.25 shows an example of generating a signal using a 4-QAM constellation and orthonormal basis functions. When , it is mathematically convenient to represent the symbols as a complex-valued symbol .

As depicted in Figure 2.25, the slicer organizes the bitstream and feeds the mapper with 2 bits at each iteration . The mapper uses a 4-QAM constellation to convert the binary input into symbols . For example, at iteration the bits are “01”, which is mapped via the defined constellation to the symbol . The real parcel (1 in this case) of this symbol multiplies while the imaginary part (also 1) multiplies . This way, the real and imaginary parcels of the complex-valued symbols multiply the corresponding basis function , to create . Note that is the duration of each iteration .

2.12.3 Recovering the symbols via correlative decoding

Orthogonal basis allows inner products to recover the symbols

Inner products are key elements to represent waveforms as vectors, as discussed in Section D.3 and Appendix A.12.3. Given that the set of orthonormal basis functions used to generate the transmit waveform is known to the receiver, the symbol can be obtained using correlative decoding as indicated by Eq. (D.9).

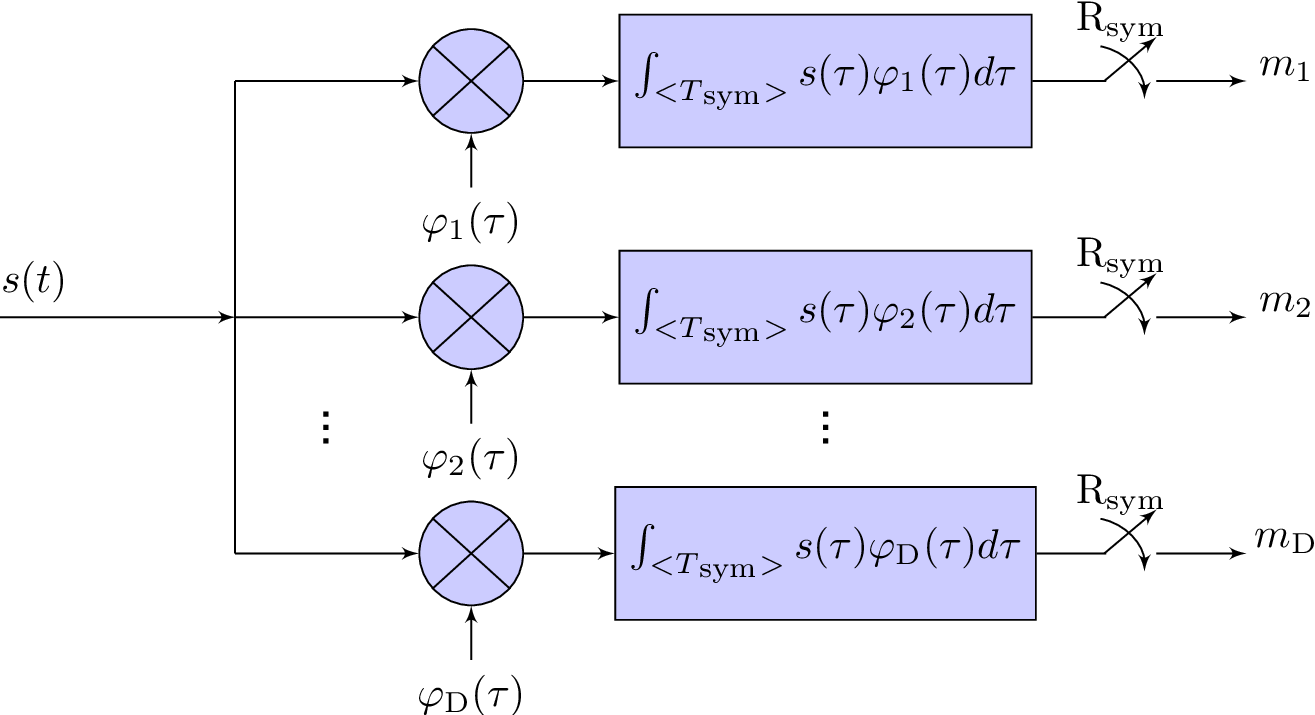

Figure 2.26 depicts the correlative decoding process, which is capable of recovering the constellation symbols used to create in Eq. (2.30). A clock synchronized with the symbol rate is required to calculate inner products, each with a time interval . Considering a given symbol, the process can be written in a simplified form as

|

| (2.32) |

where is the -th element of the current symbol . The use of an inner product between signals as in Eq. (2.32) is analogous to its use between vectors, as discussed in Example A.1.

Example 2.7. Obtaining the symbols of a linear combination of basis functions. Using a procedure similar to Example A.1, consider that the transmitter uses basis functions to embed the -th information symbol into a segment of the transmit waveform according to Eq. (2.29). Now the task is to recover the symbol via the correlative decoder of Figure 2.26.

Assuming the received signal coincides with the transmitted , to recover the first element of the -th symbol, the inner product between and can be used as follows:

where the second step was obtained due to the linearity of an inner product and the third one due to the orthonormality of the basis functions. The other elements of can be retrived via and , as suggested by Figure 2.26.

The previous example assumed a single symbol, but a receiver has the more complicated task of dealing with a signal that represents a sequence of symbols. In practice, the receiver chooses a time instant (or a sample in discrete-time) called cursor, which serves as a time reference to the start of a symbol, and periodically makes a decision at a rate . The cursor and baud rate may have to be continuously adjusted in a closed loop for tracking small variations.

Correlative decoding maximizes SNR when the channel is AWGN

When the channel is AWGN, correlative decoding achieves the optimum performance. A sketch of a proof to this is deferred to Section 4.3.8 and it will be based on the equivalence between correlative decoding and matched filtering.

In the sequel, the goal is to simply develop some intuition about how correlative decoding performs when the input signal is not the transmit signal as in Figure 2.26, but a signal contaminated by noise . For that, it suffices to consider the transmission of a single symbol . For simplicity, it is assumed PAM with and being a shaping pulse with unitary energy.

As discussed in Section C.10, correlation is a measure of similarity. Because the receiver knows that the transmitted signal was composed by repeatedly scaling the shaping pulse by symbol values, it is sensible to perform the correlation between the received signal and the shaping pulse itself. This way, when there is no noise () and , the correlation is

|

| (2.33) |

More importantly, when AWGN is present and , the correlation is

|

| (2.34) |

where the random variable has variance , as suggested by Eq. (F.48), which adopts a filtering notation instead of correlation. This is the minimum noise power that can be observed given the receiver architecture of Figure 2.26.

The noise parcel is uncorrelated with , such that the power of their sum in Eq. (2.34) is the sum of their individual powers. By definition, the power associated to parcel in Eq. (2.34) is the constellation energy . Hence, the SNR at the AWGN output is

|

| (2.35) |

because and are the power of the discrete-time signals derived from the signal of interest and noise , respectively.

If the transmit pulse does not have unitary energy, the correlative decoder can use a normalized version of without any penalty in performance. In fact, any scaling at the receiver affects both the signal of interest and noise, such that the SNR is not altered, as will be discussed in Section 4.3.4. In contrast, scaling at the transmitter can lead to a stronger signal of interest at the receiver and improve performance. Hence, to simplify the notation, it is convenient to assume unitary energy pulses and control the transmit power via the constellation energy .

2.12.4 Interpreting digital modulation as transforms

Correlative decoding has this name because the cross-correlation between the input signal and each basis function is repeatedly calculated, as indicated in Figure 2.26. is similar to finding the coefficients using an inverse transform as discussed in the sequel.

It is useful to make the connection between modulation / demodulation and transforms. The correlative decoding of Figure 2.26 uses inner products that can be made equivalent to a transform . In digital communications, one can interpret the generated “time-domain” signals (or in the continuous-time case) as inverse transforms of a finite number of possible coefficient vectors .

When compared to other applications of transforms, the following characteristics of their use in digital communications are emphasized:

- The number of basis functions is finite and relatively small (two, for example).

- The transform matrices and its inverse are not square.

- While some applications of transforms do not impose restrictions to the coefficient values, in the context of digital communication system, the transform coefficients are restricted to the finite set of symbols called constellation.

- The basis are often real functions and is simply the transpose of such that the functions that appear in Figure 2.26 are used in both transmitter and receiver.

The following example uses QAM modulation to illustrate the analogy.

Example 2.8. QAM modulation interpreted as a transform. Assume a digital communication system uses simultaneously the amplitudes of a cosine and a sine to convey information to the receiver. Assume also that the possible amplitude values for both cosine and sine, are , corresponding to a 4-PAM for each of the two dimensions of the QAM. Assume also that in a discrete-time implementation, each message (or symbol) is represented by samples and both sinusoids have a period of 8 samples. Hence, each symbol in time-domain is given by

where and are the amplitudes of the cosine and sine, respectively, and the scaling factor normalizes the basis functions to have unity energy (as in the DCT). Note that for each block of samples there is a pair . Because there are possible pairs , each symbol can carry bits.

In an analogy to block transforms, the amplitudes and play the role of the coefficients, and the transform matrix has dimension , with columns consisting of the cosine and sine samples. In this case the matrix transform is not square and a pseudoinverse should be adopted for mathematical consistency when obtaining the inverse of . In practice, given that the columns are orthonormal, the pseudoinverse of is . Hence, given a vector representing the samples of , the coefficients can be obtained by . Listing 2.15 illustrates the procedure.

1S=32; %number of samples per symbol 2Period=8; %period of sinusoids 3n=(0:S-1)'; %time index 4%inverse matrix: 5A=[cos(2*pi/Period*n) sin(2*pi/Period*n)]*sqrt(2/S); 6innerProduct=sum(A(:,1).*A(:,2)) %are columns orthogonal? 7if 1 %test two alternatives to obtain Ah from A 8 Ah=A'; %the pseudoinverse is the Hermitian 9else 10 Ah=pinv(A); %equivalently, use pseudoinverse function 11end 12Ac=3; As=-1; 13x=Ac*A(:,1)+As*A(:,2); %compose the signal in time domain 14X=Ah*x %demodulation at the receiver: recover amplitudes

It should be noted that the period of 8 samples was chosen such that corresponds to an integer number of periods and the sinusoids are orthogonal. Try to use a period of 9 in the previous script and you will note that the original amplitudes cannot be perfectly recovered. Listing 2.22 provides another, and more complete, example of using transforms for correlative decoding.