5.2 Detection Theory and Probability of Error for AWGN Channels

One application of detection theory is choosing hypotheses given a set of measurements. In our case, the problem is to choose a symbol given the received signal. Instead of dealing with waveforms, Listing 4.3 indicated that for AWGN and matched filtering it is appropriate to use a symbol-based simulation or, equivalently, adopt a vector channel model.

There are three basic aspects that define the estimation of symbol error probabilities:

- f 1.

- The noise distribution (power, dynamic range, etc.) at the receiver.

- f 2.

- The characteristics of the signal of interest at the receiver, given primarily by the transmitted constellation (position of symbols, constellation energy, probability of each symbol) and the energy of the shaping pulse.

- f 3.

- The decision regions used by the receiver, which typically depend on the adopted criterion, such as maximum likelihood (ML) or maximum a posteriori (MAP).

Because the following discussion assumes AWGN, it is sensible to also assume that a matched filter is used and adopt the notation of Section 4.3.4. But to simplify the expressions, it is considered unitary-energy pulses with and scalar or complex symbols (not vectors with arbitrary elements). This way the notation can rely on a symbol being transmitted and the receiver observing . This allows to abstract the whole process of creating a waveform via pulse shaping to transmit and recovering it at the receiver. The reader must be aware though that is obtained with a MF such that the receiver does not make the decision based on a single sample of the received signal.

The receiver is assumed to choose a method for making decisions. This method could be eventually arbitrarily choosing thresholds to make decisions with if/else. But in practice, the receiver follows a sound mathematical method, such as making decisions to minimize the probability of symbol error .

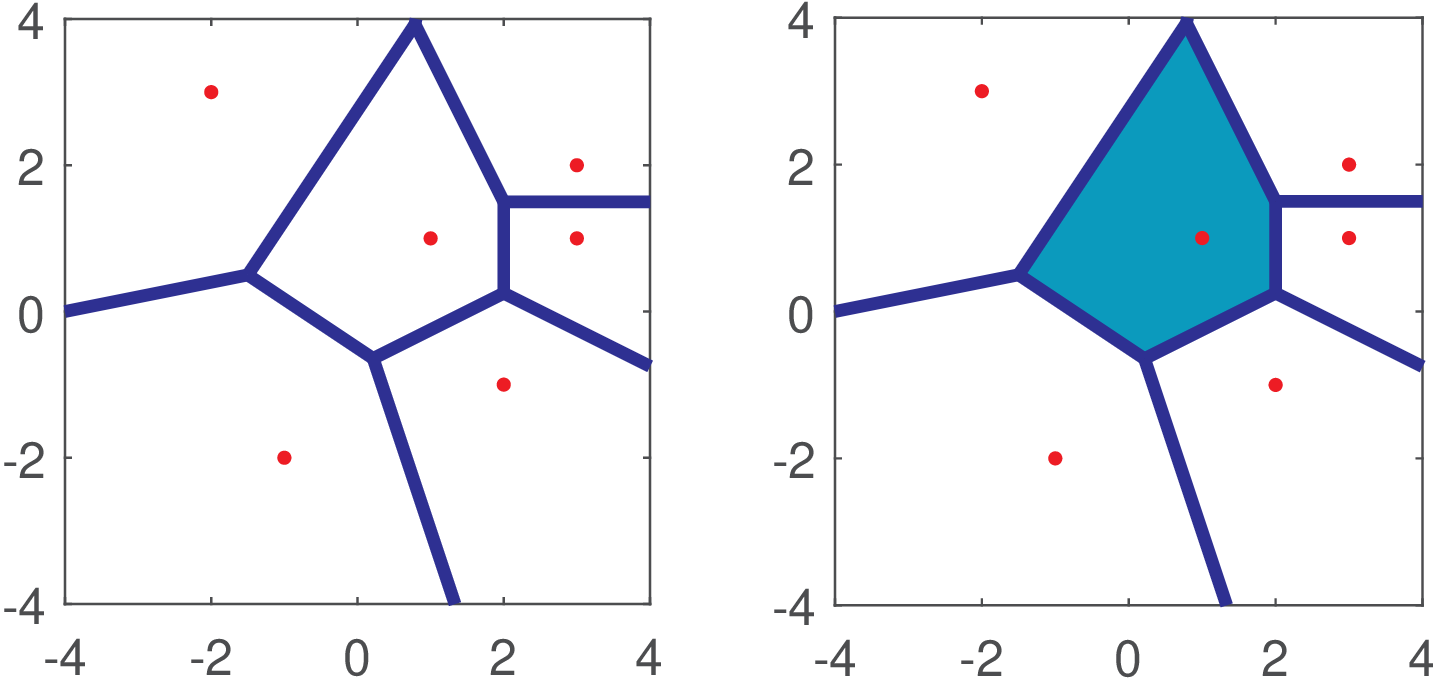

Given a decision method, the space where the transmitted (and received ) symbols rely is partitioned and each transmitted symbol becomes associated to a decision region . From the receiver’s perspective, all points belonging to will be interpreted as . For the sake of example, let be a two-dimensional vector from the set as in Figure 5.3. To provide better visualization, the symbols were on purpose arbitrarily positioned instead of using constellations adopted in practice. Assume the receiver makes decisions according to the minimum Euclidean distance criterion, which is equivalent to partitioning the space into decision regions called Voronoi regions as depicted Figure 5.3. For example, all received symbols that fall in the region associated to will be interpreted as .

The error probability depends on the decision regions and also on how the noise influences each symbol. The conditional probability of the output symbol given the input symbol , completely describes the discrete-time AWGN (in fact, any vector channel). For AWGN, all conditional distributions are Gaussians.

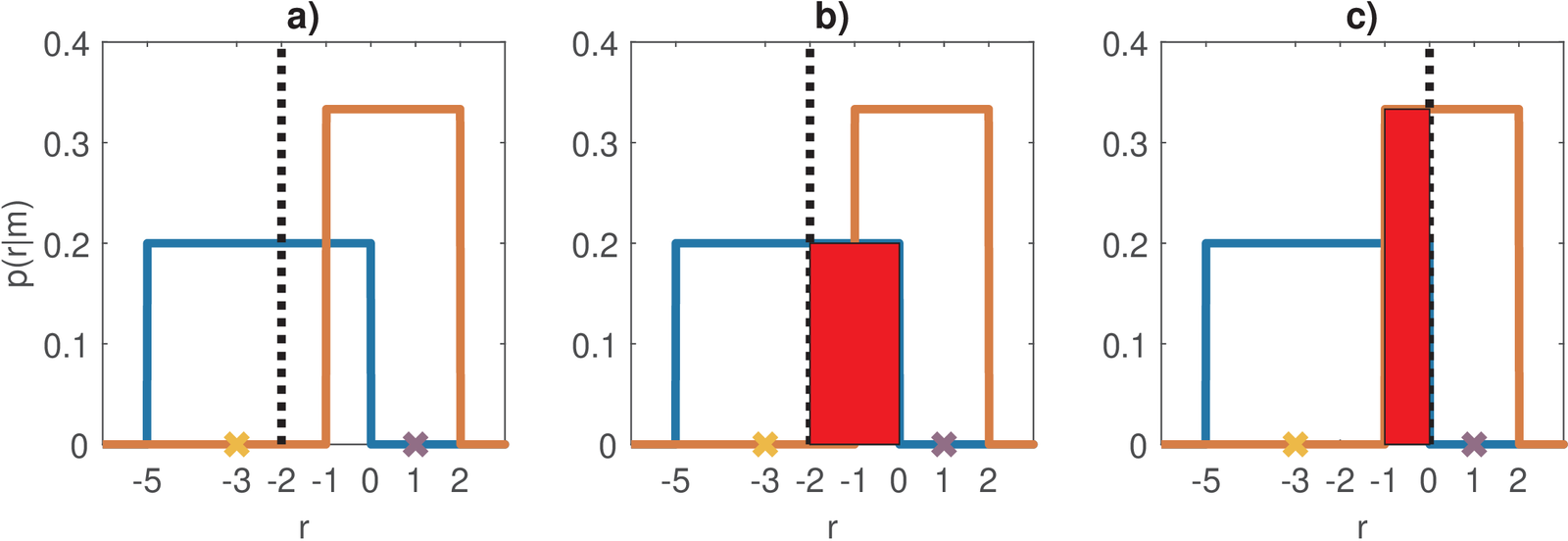

As an example, assume a binary transmission with symbols with prior probabilities 0.9 and 0.1, respectively. The noise is such that and are uniform distributions. The conventional AWGN is not used because it is simpler to calculate the probabilities with uniform rather than Gaussians PDFs. The receiver adopts a threshold equal to to create two decision regions. Figure 5.4 illustrates the example. The SER is given by

where is the probability of error given that was transmitted. In this case, the noise that affects a transmitted is never strong enough to cause an error, and . When transmitting , there is a chance that the received symbol falls in the range , which is within the decision region of symbol and these cases are wrongly interpreted. Hence, the is given by the indicated area in Figure 5.4 b) and

The previous calculation assumed the receiver already had established the decision regions based on the threshold . A pertinent question is what is the optimal threshold to minimize ? Listing 5.2 can help finding the optimal in this very specific case.

1N=1000; thresholds = linspace(-2,2,N); Pe = zeros(1,N); 2for i=1:N %loop over the defined grid of thresholds 3 Pe1=0.2*(-thresholds(i)); Pe2=1/3*(thresholds(i)+1); 4 if Pe1<0 Pe1=0; end %a probability cannot be negative 5 if Pe2<0 Pe2=0; end %a probability cannot be negative 6 Pe(i) = 0.9*Pe1+0.1*Pe2;%prob. error for thresholds(i) 7end 8plot(thresholds, Pe); %visualize prob. for each threshold

Because the prior of is much higher, the optimal threshold is 0 for this example, such that all transmitted symbols are properly interpreted and . The new situation with is indicated in Figure 5.4 c), with the highlighted area corresponding to . In this case,

In decision theory, the minimum achievable error (when using the optimal decisions) is called the Bayes error. In the digital communication scenario, the Bayes error is zero only when the conditional probabilities do not overlap, which does not occur if they are Gaussians given their infinite support.

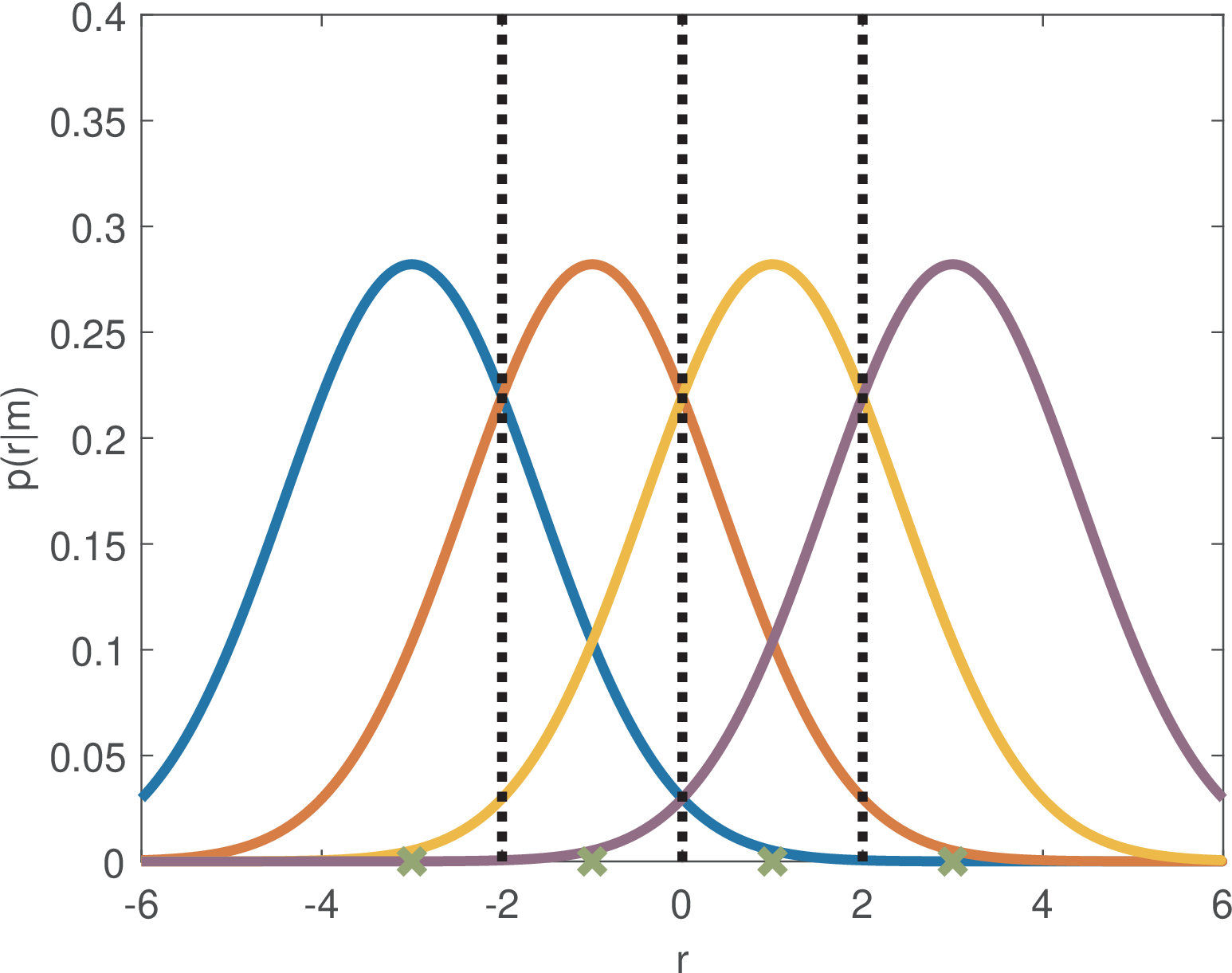

For the AWGN case, and because in most cases the symbols are equiprobable, calculating via Eq. (5.1) can benefit from the symmetry of the problem. Figure 5.5 depicts for a 4-PAM with symbols assuming a Gaussian noise with variance . In the discussion associated to Figure 5.2, was already obtained for this 4-PAM case with AWGN. The next paragraphs seek a general result, valid for any PAM. In some situations, as this one, it is useful to use the probability of making correct decisions , given by . The reason is that sometimes the conditional are easier to calculate and can be derived as

instead of using Eq. (5.1).

In the sequel, two criteria for defining the decision regions are presented.